Apache Kafka? is a distributed streaming platform. What exactly does that mean?

**Apache Kafka?是一個*分布式流平臺*。這意味著什么?**

We think of a streaming platform as having three key capabilities:

**我們認為一個流平臺有這三個關鍵功能:**

1. It lets you publish and subscribe to streams of records. In this respect it is similar to a message queue or enterprise messaging system.

2. It lets you store streams of records in a fault-tolerant way.

3. It lets you process streams of records as they occur.

**1. 能夠發布訂閱記錄流。從這個層面來講,它類似一個消息隊列或企業消息系統。

2. 能夠以容錯方式存儲記錄流。

3. 能夠及時處理記錄流。**

What is Kafka good for?

**Kafka 的優點是?**

It gets used for two broad classes of application:

**主要應用于應用的兩大方面**

1. Building real-time streaming data pipelines that reliably get data between systems or applications

2. Building real-time streaming applications that transform or react to the streams of data

**1. 在系統與應用之間建立可靠獲取數據的實時流式數據管道

2. 建立相應變換或響應數據流的實時流式應用**

To understand how Kafka does these things, let's dive in and explore Kafka's capabilities from the bottom up.

**為了理解Kafka怎樣實現這些,讓我們開始自下而上的研究Kafka的功能。**

First a few concepts:

**概念先行:**

* Kafka is run as a cluster on one or more servers.

* The Kafka cluster stores streams of records in categories called topics.

* Each record consists of a key, a value, and a timestamp.

* Kafka作為一個集群運行在一個或多個服務器上。

* Kafka按類別存儲的數據流稱為topics。

* 每條記錄分別包含一個鍵、值、時間戳。

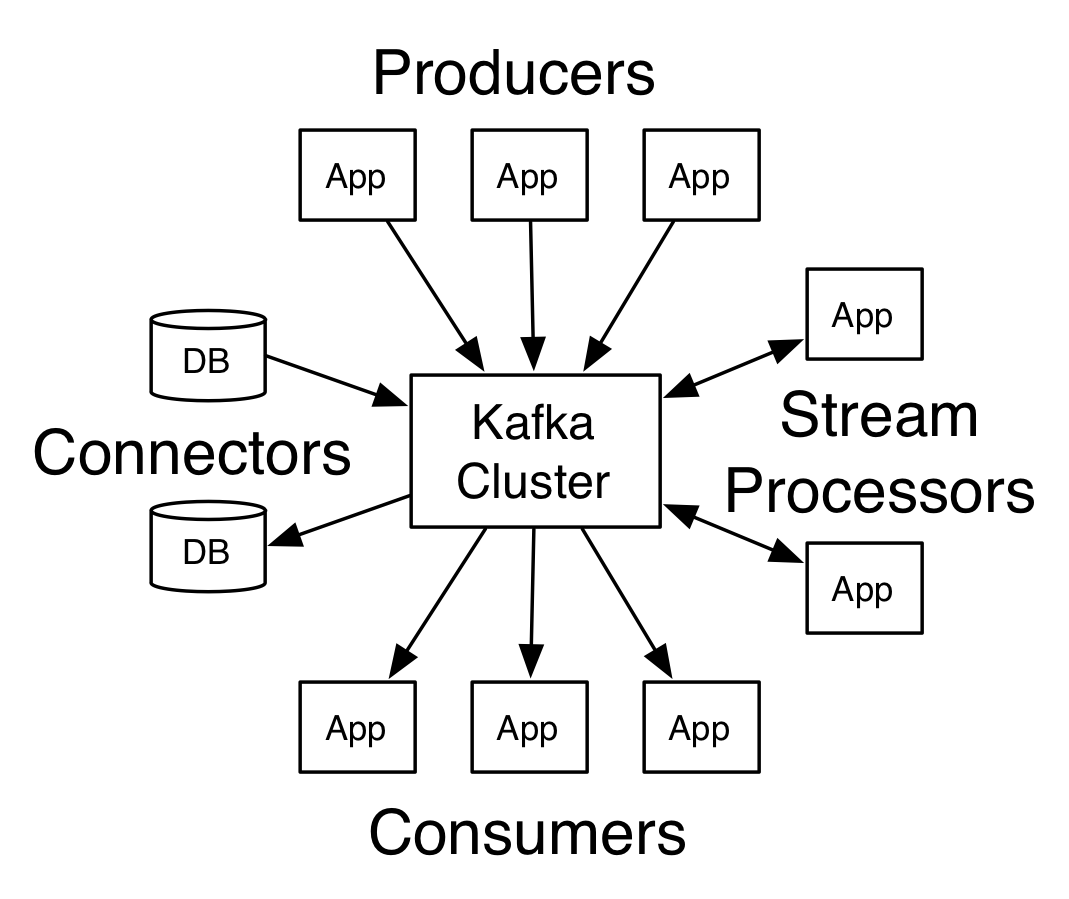

Kafka has four core APIs:

**Kafka的四個核心API:**

* The Producer API allows an application to publish a stream of records to one or more Kafka topics.

* The Consumer API allows an application to subscribe to one or more topics and process the stream of records produced to them.

* The Streams API allows an application to act as a stream processor, consuming an input stream from one or more topics and producing an output stream to one or more output topics, effectively transforming the input streams to output streams.

* The Connector API allows building and running reusable producers or consumers that connect Kafka topics to existing applications or data systems. For example, a connector to a relational database might capture every change to a table.

* Producer API (生產者)允許一個應用發布記錄流給一/多個Kafka的topic。

* Consumer API(消費者)允許一個應用訂閱一/多個topic并處理(推送給它們的)記錄流。

* Streams API(流)使得應用成為流處理器,消費來自一/多個topic的輸入流并發布輸出流給一/多個topic,有效地轉換輸入流為輸出流。

* Connector API(連接器) 使得建立并運行著的可復用生產/消費者,能夠連接起Kafka topic與現有應用/數據系統。例如,一個關系型數據庫的連接器能捕獲每張表的變化。

In Kafka the communication between the clients and the servers is done with a simple, high-performance, language agnostic TCP protocol. This protocol is versioned and maintains backwards compatibility with older version. We provide a Java client for Kafka, but clients are available in many languages.

**在Kafka中,客戶端與服務器間的連接采用簡單、高效,語言無關的TCP協議。該版本化協議支持向后兼容舊版本。我們為Kafka提供了Java版客戶端,也可使用其他(更多)語言來實現客戶端。**

Topics and Logs

**Topic與Log**

Let's first dive into the core abstraction Kafka provides for a stream of records—the topic.

**首先深入Kafka中用于提供流記錄的核心抽象概念——topic。**

A topic is a category or feed name to which records are published. Topics in Kafka are always multi-subscriber; that is, a topic can have zero, one, or many consumers that subscribe to the data written to it.

**一個topic即一種類別或者說是發布記錄的統稱。Kafka中的Topic總是多訂閱者的,也就是說,一個topic可以有零個,一個,或多個消費者來訂閱寫入topic的數據。**

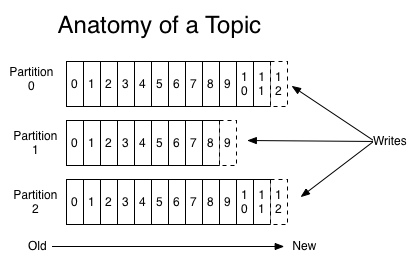

For each topic, the Kafka cluster maintains a partitioned log that looks like this:

**對于每個topic而言,Kafka集群維系著如下的一個分區日志:**

Each partition is an ordered, immutable sequence of records that is continually appended to—a structured commit log. The records in the partitions are each assigned a sequential id number called the offset that uniquely identifies each record within the partition.

**每組是一個有序的,不可變的,連續追加的記錄序列,一種結構型提交日志。分區中的記錄分別被賦予一個按次序的id號,稱之為偏移,用來唯一區分區內每條記錄。**

The Kafka cluster retains all published records—whether or not they have been consumed—using a configurable retention period. For example, if the retention policy is set to two days, then for the two days after a record is published, it is available for consumption, after which it will be discarded to free up space. Kafka's performance is effectively constant with respect to data size so storing data for a long time is not a problem.

**基于可配置的保留周期,Kafka集群能保留著所有發布的記錄,即便它們被消費了。例如,如果保留策略設為兩天,那么兩天內的數據可用于消費,之后將被清空丟棄。在數據量方面Kafka的性能是高效恒定的,因此長期存儲數據不是什么問題。**

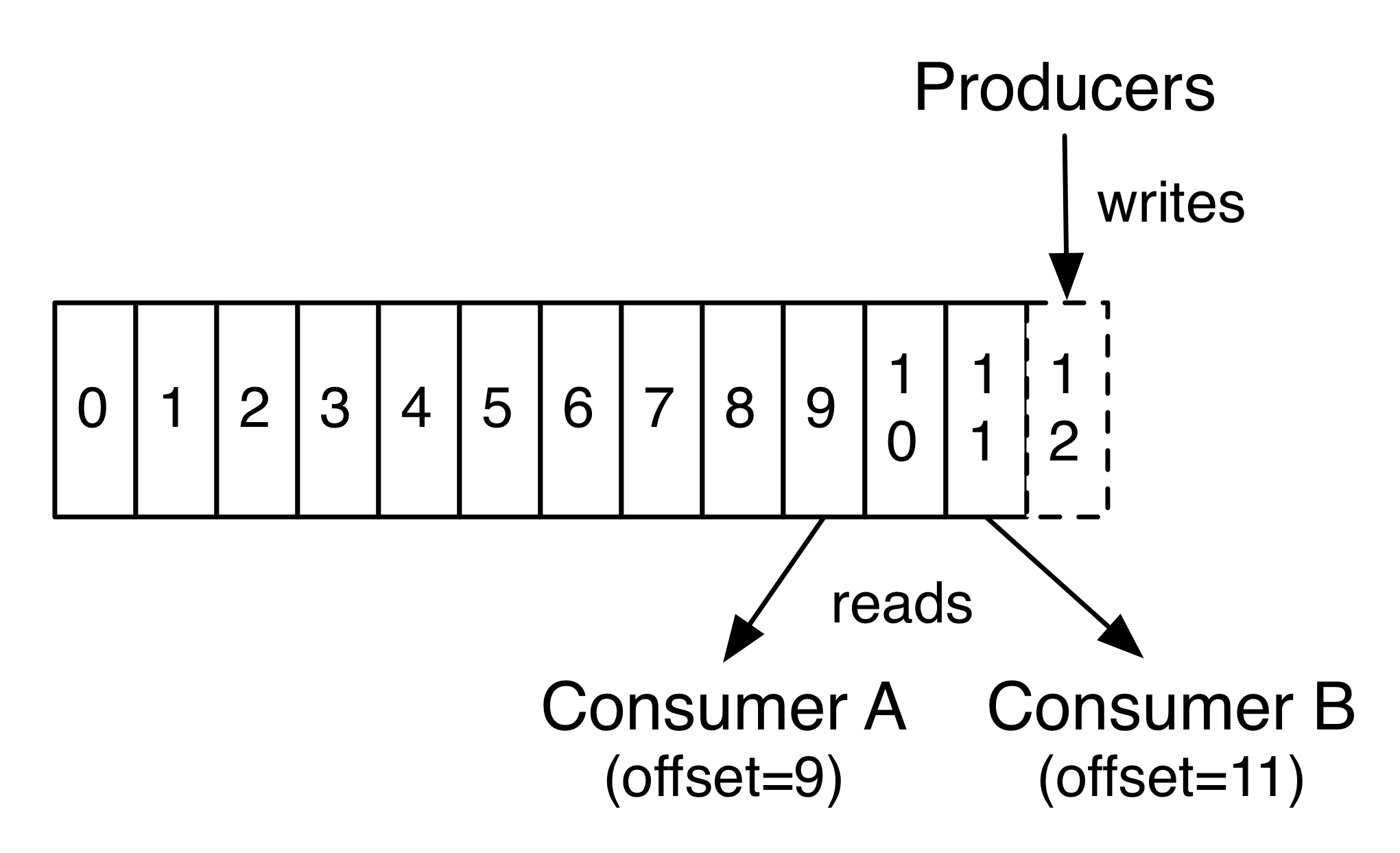

In fact, the only metadata retained on a per-consumer basis is the offset or position of that consumer in the log. This offset is controlled by the consumer: normally a consumer will advance its offset linearly as it reads records, but, in fact, since the position is controlled by the consumer it can consume records in any order it likes. For example a consumer can reset to an older offset to reprocess data from the past or skip ahead to the most recent record and start consuming from "now".

**實際上,元數據僅存每個消費者的主要信息,即偏移或者其在日志中的位置。偏移由消費者來控制:通常一個消費者將線性移動至偏移來讀取記錄,但是,實際上,由于位置由消費者控制,它可以消費想要的任意次序記錄。例如一個消費者可以重置到先前偏移來重新處理之前的數據或者跳到最近的記錄開始消費。**

This combination of features means that Kafka consumers are very cheap—they can come and go without much impact on the cluster or on other consumers. For example, you can use our command line tools to "tail" the contents of any topic without changing what is consumed by any existing consumers.

**這系列特征說明Kafka的消費者(消耗)相當小——他們來去自由卻不會對集群或其他消費者造成影響。例如,你可以使用我們的命令行工具來“tail”(查看最后幾行)任意topic內容而不需要改變現有消費者所需消耗。**

The partitions in the log serve several purposes. First, they allow the log to scale beyond a size that will fit on a single server. Each individual partition must fit on the servers that host it, but a topic may have many partitions so it can handle an arbitrary amount of data. Second they act as the unit of *parallelism*—more on that in a bit.

**日志分區有多種用途。首先,它們能使日志擴展到適應單個服務器的大小。服務器持有的每單個分區(大小)必須適配(規定大小),但是topic可以有多個分區來處理任意數量的數據。其次它們作為并發單位——稍后會進一步解釋。**

Distribution

**分布式**

The partitions of the log are distributed over the servers in the Kafka cluster with each server handling data and requests for a share of the partitions. Each partition is replicated across a configurable number of servers for fault tolerance.

**日志分區分布在Kafka集群上的服務器上,每個處理數據的服務器要求有個共享分區。每個分區復制到可配置數量的服務器上以便容錯。**

Each partition has one server which acts as the "leader" and zero or more servers which act as "followers". The leader handles all read and write requests for the partition while the followers passively replicate the leader. If the leader fails, one of the followers will automatically become the new leader. Each server acts as a leader for some of its partitions and a follower for others so load is well balanced within the cluster.

**每個分區有一臺服務器作為“leader”(主機)和零到多臺服務器作為“followers”(從機)。主機處理分區的所有讀寫請求,與此同時從機被動復制主機。如果主機掛了,其中一個從機將會自動成為新的主機。每臺服務器要么作為這些分區的主機要么作為從機,使得集群內加載平衡。**

Producers

**生產者**

Producers publish data to the topics of their choice. The producer is responsible for choosing which record to assign to which partition within the topic. This can be done in a round-robin fashion simply to balance load or it can be done according to some semantic partition function (say based on some key in the record). More on the use of partitioning in a second!

**生產者向自主選擇的topic推送數據。生產者的職責在于將指定記錄分配到topic中的某個分區。可以通過簡單輪詢當時實現負載均衡或者可以根據一些語義分區函數實現(基于記錄中的key而言)。更多分區用法馬上會講到。**

Consumers

**消費者**

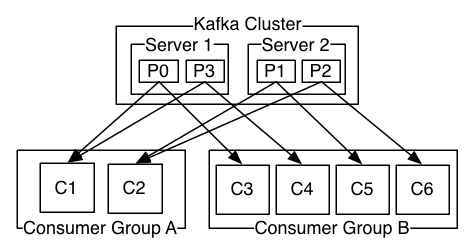

Consumers label themselves with a consumer group name, and each record published to a topic is delivered to one consumer instance within each subscribing consumer group. Consumer instances can be in separate processes or on separate machines.

**消費者將自己分組命名,且每條推給topic的記錄會發往各個訂閱消費者組中的某一消費者實例。消費者實例可以在不同處理器或不同機器上。**

If all the consumer instances have the same consumer group, then the records will effectively be load balanced over the consumer instances.

**如果所有的消費者實例有相同的消費者組,那么記錄會被有效的分攤到消費者實例上。(如下圖,加入都分配到了A組,C1與C2各分攤2條)**

If all the consumer instances have different consumer groups, then each record will be broadcast to all the consumer processes.

**如果所有的消費者實例來自不同消費者組,那么每條記錄會被廣播到所有的消費者處理器上。(如下圖,C1與C3來自不同組,說明P0被廣播到不同的處理器上了)**

A two server Kafka cluster hosting four partitions (P0-P3) with two consumer groups. Consumer group A has two consumer instances and group B has four.

**一個兩臺服務器(組成)的Kafka集群擁有四個分區(P0-P3)和兩個消費者組。組A有兩個消費者實例而組B有四個。**

More commonly, however, we have found that topics have a small number of consumer groups, one for each "logical subscriber". Each group is composed of many consumer instances for scalability and fault tolerance. This is nothing more than publish-subscribe *semantics* where the subscriber is a cluster of consumers instead of a single process.

** ~~通常是這樣,然而~~ 與眾不同的是,我們會發現topic有少數消費者組對應著“邏輯訂閱者”。每組由許多可擴展、容錯的消費者實例組成。~~這不過是 訂閱者是一個消費者集群而不是單個處理器的 發布訂閱模式(語義)。~~ 這也是語義上的發布訂閱,只不過訂閱者是一個消費者集群,而不是單個處理器。**

The way consumption is implemented in Kafka is by dividing up the partitions in the log over the consumer instances so that each instance is the exclusive consumer of a "fair share" of partitions at any point in time. This process of maintaining membership in the group is handled by the Kafka protocol dynamically. If new instances join the group they will take over some partitions from other members of the group; if an instance dies, its partitions will be distributed to the remaining instances.

**Kafka中將日志劃分給分區中的消費者實例來實現消費,因此每個實例在任何時間點都是是“共享”分區的專屬消費者。組員維系處理基于Kafka的動態協議協。如果有新實例加入組,那么它們會占用同組成員的一些分區;如果一個實例掛掉了,它的分區會被分配給剩下的實例。**

Kafka only provides a total order over records within a partition, not between different partitions in a topic. Per-partition ordering combined with the ability to partition data by key is sufficient for most applications. However, if you require a total order over records this can be achieved with a topic that has only one partition, though this will mean only one consumer process per consumer group.

**Kafka僅提供單分區內的所有記錄有序,而非topic中不同分區之間。各分區結合 按key劃分數據的能力 來排序滿足大部分應用。然而,如果你想要全部記錄有序可以通過一個topic(僅有)一個分區實現,各消費組僅有一個消費者進程。**

Guarantees

**保障**

At a high-level Kafka gives the following guarantees:

**一個高級Kafka能提供以下保障:**

* Messages sent by a producer to a particular topic partition will be appended in the order they are sent. That is, if a record M1 is sent by the same producer as a record M2, and M1 is sent first, then M1 will have a lower offset than M2 and appear earlier in the log.

* A consumer instance sees records in the order they are stored in the log.

* For a topic with replication factor N, we will tolerate up to N-1 server failures without losing any records committed to the log.

* 生產者發送消息給特定topic分區將按其發送順序追加。也就是說,如果一條記錄M1和M2由同一生產者發出,且M1先發,那么M1的偏移小于M2而且在日志中出現的更早些。

* 一個消費者實例按日志所存記錄順序讀取。

* 對于N個副本因子的topic,可容許N-1個服務掛掉而不會丟失任何提交到日志的記錄。**

More details on these guarantees are given in the design section of the documentation.

**更多保障的細節可在文檔的設計章節中看到。**

Kafka as a Messaging System

**Kafka消息系統**

How does Kafka's notion of streams compare to a traditional enterprise messaging system?

**kafka的流概念和傳統的企業消息系統有何區別?**

Messaging traditionally has two models: queuing and publish-subscribe. In a queue, a pool of consumers may read from a server and each record goes to one of them; in publish-subscribe the record is broadcast to all consumers. Each of these two models has a strength and a weakness. The strength of queuing is that it allows you to divide up the processing of data over multiple consumer instances, which lets you scale your processing. Unfortunately, queues aren't multi-subscriber—once one process reads the data it's gone. Publish-subscribe allows you broadcast data to multiple processes, but has no way of scaling processing since every message goes to every subscriber.

**傳統消息模式有兩種:隊列和發布訂閱。在隊列(模式)中,一堆消費者的任一消費者都有機會從服務器讀取到記錄;在發布訂閱(模式)中,記錄被廣播給所有消費者。兩者皆有利弊。隊列的好處是允許數據處理劃分給多個消費者實例,~~擴展你的處理~~提高處理性能。不足的是,隊列不支持多訂閱——數據讀取一次就沒了。發布訂閱(模式)允許廣播數據給多進程,但是無法擴展處理,因為消息都到了各自的訂閱者那。**

The consumer group concept in Kafka generalizes these two concepts. As with a queue the consumer group allows you to divide up processing over a collection of processes (the members of the consumer group). As with publish-subscribe, Kafka allows you to broadcast messages to multiple consumer groups.

**Kafka中的消費者組概念結合了以上兩種思想。消費者組與隊列一樣允許劃分進程集合(消費者組成員)。與發布訂閱一樣,支持廣播消息給多個消息組。**

The advantage of Kafka's model is that every topic has both these properties—it can scale processing and is also multi-subscriber—there is no need to choose one or the other.

**Kafka模式的優點在于每個topic有著以下兩方面——提高處理的同時又支持多訂閱者——無需選擇(隊列還是發布訂閱)。**

Kafka has stronger ordering guarantees than a traditional messaging system, too.

**此外,Kafka比傳統消息系統擁有更強的順序保證。**

A traditional queue retains records in-order on the server, and if multiple consumers consume from the queue then the server hands out records in the order they are stored. However, although the server hands out records in order, the records are delivered asynchronously to consumers, so they may arrive out of order on different consumers. This effectively means the ordering of the records is lost in the presence of *parallel consumption*. Messaging systems often work around this by having a notion of "exclusive consumer" that allows only one process to consume from a queue, but of course this means that there is no parallelism in processing.

**傳統隊列在服務器端按順序保留記錄,當多消費者從隊列消費,服務器會按存儲順序分發記錄。然而,即使服務器按順序分發記錄,記錄異步傳輸給消費者,結果他們到達不同消費者可能是無序的。這實際上意味著并發消費情況下記錄順序丟失。消息系統經常為了支持”獨占消費者”的概念,即只允許一個進程去消費隊列,當然也就意味沒有并行處理(能力)了。**

Kafka does it better. By having a notion of parallelism—the partition—within the topics, Kafka is able to provide both ordering guarantees and load balancing over a pool of consumer processes. This is achieved by assigning the partitions in the topic to the consumers in the consumer group so that each partition is consumed by exactly one consumer in the group. By doing this we ensure that the consumer is the only reader of that partition and consumes the data in order. Since there are many partitions this still balances the load over many consumer instances. Note however that there cannot be more consumer instances in a consumer group than partitions.

**Kafka更出眾(相比上文)。基于topic內分區并行概念,kafka既能夠保證順序又能負載均衡消費者進程池。這得意于將topic中的分區分配給消費者組中的消費者,以至于消費者組中的一個消費者能準確的消費分區。由此可見消費者是分區唯一的訂閱者,而且按順序消費。這樣許多的分區還要分攤到更多的消費者實例上。不過需要注意的是一個消費者組里的消費者實例不能多于分區。**

Kafka as a Storage System

**Kafka存儲系統**

Any message queue that allows publishing messages decoupled from consuming them is effectively acting as a storage system for the *in-flight* messages. What is different about Kafka is that it is a very good storage system.

**任何消息隊列能高效地解耦發布消息與消費消息就可以作為*動態*消息的存儲系統。Kafka是非常棒的存儲系統,這點不同于其他。**

Data written to Kafka is written to disk and replicated for fault-tolerance. Kafka allows producers to wait on acknowledgement so that a write isn't considered complete until it is fully replicated and guaranteed to persist even if the server written to fails.

**Kafka的數據寫入是寫到硬盤的,而且備份以便容錯。Kafka允許生產者等待確認,因此直到全部備份完和保證持久化完才認為一次寫入完成,哪怕服務器寫入失敗。**

The disk structures Kafka uses scale well—Kafka will perform the same whether you have 50 KB or 50 TB of persistent data on the server.

**硬盤結構Kafka利用得極好——無論服務器端是50KB或者50TB持久化數據,Kafka性能相同。**

As a result of taking storage seriously and allowing the clients to control their read position, you can think of Kafka as a kind of special purpose distributed filesystem dedicated to high-performance, low-latency commit log storage, replication, and propagation.

**作為重視存儲和允許客戶端控制讀取位置的結果,你可以把Kafka看做一種致力于高性能,低延遲提交日志存儲,可備份,可擴展,這些特殊目的的分布式文件系統。**

For details about the Kafka's commit log storage and replication design, please read [this](https://kafka.apache.org/documentation/#design) page.

**更詳細關于Kafka提交日志存儲和備份設計,請[閱讀](https://kafka.apache.org/documentation/#design)這個頁面。**

Kafka for Stream Processing

**kafka流處理**

It isn't enough to just read, write, and store streams of data, the purpose is to enable real-time processing of streams.

**僅僅讀,寫和存儲數據流,其目的是能夠實時處理流。**

In Kafka a stream processor is anything that takes continual streams of data from input topics, performs some processing on this input, and produces continual streams of data to output topics.

**Kafka中的流處理器從輸入topic連續取數據流,并執行一些處理,然后產生連續的數據流給輸出的topic。**

For example, a retail application might take in input streams of sales and shipments, and output a stream of reorders and price adjustments computed off this data.

**例如,一個零售應用可能從銷售和裝貨上讀取流,計算數據后輸出重訂價格流。**

It is possible to do simple processing directly using the producer and consumer APIs. However for more complex transformations Kafka provides a fully integrated Streams API. This allows building applications that do non-trivial processing that *compute aggregations off of streams or join streams together*.

**這可能只是直接利用生產者和消費者做了簡單處理。然而對于更復雜轉換Kafka提供了一個完全集成流API。允許創建有意義處理的應用,*計算聚合流或者連接流*。**

This facility helps solve the hard problems this type of application faces: handling out-of-order data, reprocessing input as code changes, performing stateful computations, etc.

**該工具用于幫助解決以下類型應用面臨的困難問題:處理無序數據,代碼變更重新處理輸入,執行穩定計算,等等。**

The streams API builds on the core *primitives* Kafka provides: it uses the producer and consumer APIs for input, uses Kafka for stateful storage, and uses the same group mechanism for fault tolerance among the stream processor instances.

**流API建立在Kafka提供的核心基礎上:依靠生產者與消費者API輸入(輸出),依靠Kafka穩定存儲,并依靠流處理器實例間的同樣的分去機制容錯。**

Putting the Pieces Together

**化零為整**

This combination of messaging, storage, and stream processing may seem unusual but it is essential to Kafka's role as a streaming platform.

**把消息,存儲,和流處理結合起來看起來有點奇怪但是它是Kafka作為流平臺角色必不可少的。**

A distributed file system like HDFS allows storing static files for batch processing. Effectively a system like this allows storing and processing historical data from the past.

**諸如HDFS這樣允許存儲靜態文件用于批處理分布式系統,使得存儲和處理過往歷史數據很高效。**

A traditional enterprise messaging system allows processing future messages that will arrive after you subscribe. Applications built in this way process future data as it arrives.

**傳統企業消息系統能夠處理訂閱之后來臨的消息。以這種方式創建的應用也能處理即將到達的數據。**

Kafka combines both of these capabilities, and the combination is critical both for Kafka usage as a platform for streaming applications as well as for streaming data pipelines.

**Kafka結合了上述功能,并且Kafka用在流應用平臺也好,作為流數據管道也好,這種結合都是可圈可點的。**

By combining storage and low-latency subscriptions, streaming applications can treat both past and future data the same way. That is a single application can process historical, stored data but rather than ending when it reaches the last record it can keep processing as future data arrives. This is a generalized notion of stream processing that subsumes batch processing as well as message-driven applications.

**通過結合存儲和低延遲訂閱,流應用能以相同方式處理新舊數據。即一個能處理歷史(數據),存儲數據,持續處理數據(直到最后一條記錄到達而不是結束)的單應用。廣義概念即包括批處理和消息驅動應用的流處理。**

Likewise for streaming data pipelines the combination of subscription to real-time events make it possible to use Kafka for very low-latency pipelines; but the ability to store data reliably make it possible to use it for *critical data* where the delivery of data must be guaranteed or for integration with offline systems that load data only periodically or may go down for extended periods of time for maintenance.The stream processing facilities make it possible to transform data as it arrives.

**同樣還有結合實時訂閱事件的流數據管道,不僅使得使用Kafka作為非常低延遲的管道成為可能。可靠的存儲數據能力還使得有保障的傳輸關鍵數據,整合離線系統定期加載的數據,減少維護周期成為可能。流處理設施使得當數據到達時進行轉換成為可能。**

For more information on the guarantees, APIs, and capabilities Kafka provides see the rest of the documentation.

**更多關于保障,API,和Kafka具備能力參看接下來的文檔。**

譯者的話

---

粗略翻譯,歡迎糾錯,共同進步。

更多有意思的內容歡迎訪問[rebey.cn](http://rebey.cn)。

其他譯文

---

[《kafka中文手冊》-快速開始](http://ifeve.com/kafka-getting-started/);

[kafka文檔(6)----0.10.1-Introduction-基本介紹 ](http://blog.csdn.net/beitiandijun/article/details/53671269);

[英文原文](http://kafka.apache.org/intro);