## soxs的網站圖片都是無效的我們這次拿筆趣閣來試

* **書寫一個item用于給文件下載管道傳輸參數**

這個主要兩個參數一個圖片url一個文件名

`items.py`文件

```

import scrapy

class ImgItem(scrapy.Item):

src = scrapy.Field()

fileName = scrapy.Field()

```

* **獲取url鏈接和書名**

這個很簡單就不細說了

`spiders/xbiquge.py`

```

import scrapy

import re

from ..items import ImgItem

class xbiqugeSpider(scrapy.Spider):

name = 'xbiquge'

allowed_domains = ['www.xbiquge.la'] # 定義只爬取變量內的網站

start_urls = ["https://www.xbiquge.la/"]# 定義爬取的url,

def parse(self, response): # 爬蟲啟動后進入parse方法

list = response.xpath("//div[@id='hotcontent']/div/div")

for i in list:

url = i.xpath('div/a/img/@src').extract_first()

name = i.xpath('div/a/img/@alt').extract_first()

fileName = name+"."+re.search(".([a-z|A-Z]*?)$",url).group(1)

item = ImgItem() #實例化item

item['src'] = url

item['fileName'] = fileName

print("進入管道。。。")

yield item # 通過管道保存

```

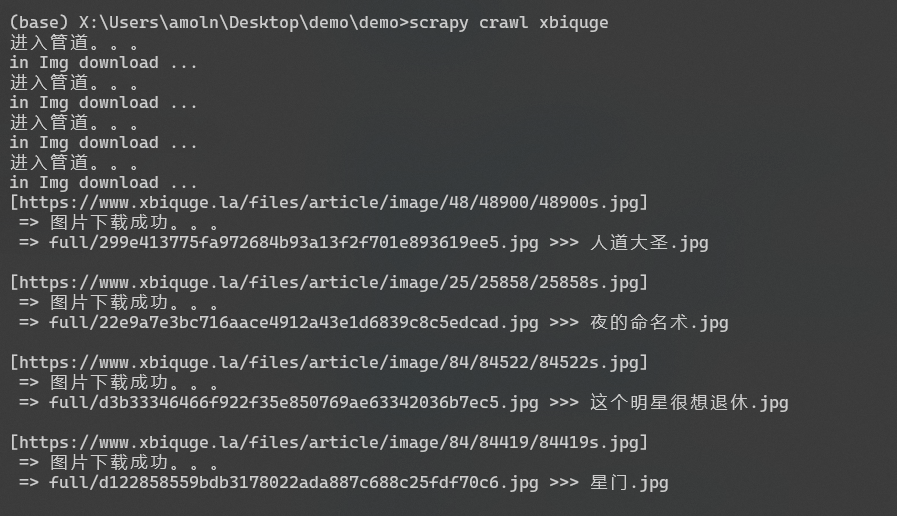

* **開啟管道**

在`setting.py`中添加如下配置

這里demo是項目名,ImgImagesPipeline是類名,根據自己實際情況改

```

ITEM_PIPELINES = {

'demo.pipelines.ImgImagesPipeline': 300,

}

```

* **書寫管道**

文件的寫法是可以固定的,如果想研究原理可以細看,不想的話直接復制就能用

這個類是我自己寫的,可能會有bug

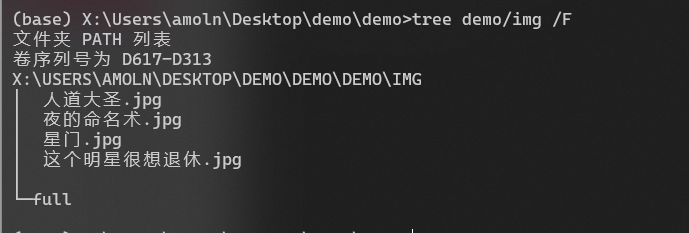

【文件名不可重復,否則報錯,沒做細節】

```

import scrapy

from scrapy.exceptions import DropItem

import json

import os

from scrapy.pipelines.images import ImagesPipeline

IMGFILEPATH = os.path.dirname(os.path.abspath(__file__))+ "/img/"

# 圖片下載管道

class ImgImagesPipeline(ImagesPipeline):

# 返回 圖片鏈接對象

def get_media_requests(self, item, info):

print("in Img download ...")

image_url = item['src']

yield scrapy.Request(url=image_url)

# 下載完成處理文件

def item_completed(self, results, item, info):

global IMGFILEPATH

if results[0][0] == True:

url = results[0][1]['url']

strurl = "["+url+"]"

print(strurl + " \n => 圖片下載成功。。。")

print(" => " + results[0][1]['path'] + " >>> " + item['fileName']+" \n")

# 判斷文件是否存在

if os.path.isfile(IMGFILEPATH + results[0][1]['path']):

# 重命名

os.rename(IMGFILEPATH + results[0][1]['path'] , IMGFILEPATH + item['fileName'])

# 在這里可以自定義代碼,用于處理數據。

# 比如向數據庫保存文件名下載狀態等

return results

print( "["+item['src']+"] \n => 文件url無法訪問 404 ... \n")

return results

```