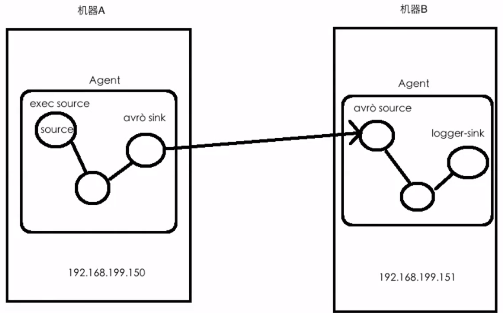

* 技術選型

* 修改配置文件,首先是機器A

* `exec-memory-avro`,agent、source、sink、channel都改了名字。

```

# example.conf: A single-node Flume configuration

# Name the components on this agent

exec-memory-avro.sources = exec-source

exec-memory-avro.sinks = avro-sink

exec-memory-avro.channels = memory-channel

# Describe/configure the source

exec-memory-avro.sources.exec-source.type = exec

# 監控文件的更新

exec-memory-avro.sources.exec-source.command = tail -F /home/bizzbee/data/data.log

exec-memory-avro.sources.exec-source.shell = /bin/sh -c

# Describe the sink

exec-memory-avro.sinks.avro-sink.type = avro

# 輸出到本機,當然也可以到別的機器

exec-memory-avro.sinks.avro-sink.hostname = spark

# 本機的44444端口

exec-memory-avro.sinks.avro-sink.port = 44444

# Use a channel which buffers events in memory

exec-memory-avro.channels.memory-channel.type = memory

# Bind the source and sink to the channel

exec-memory-avro.sources.exec-source.channels = memory-channel

exec-memory-avro.sinks.avro-sink.channel = memory-channel

```

*再創建一個配置文件,是B機器上的flume配置(本次實驗實際上還是本機)`avro-memory-logger.conf`

```

# Name the components on this agent

# i

avro-memory-logger.sources = avro-source

avro-memory-logger.sinks = logger-sink

avro-memory-logger.channels = memory-channel

# Describe/configure the source

avro-memory-logger.sources.avro-source.type = avro

avro-memory-logger.sources.avro-source.bind = spark

avro-memory-logger.sources.avro-source.port = 44444

# Describe the sink

avro-memory-logger.sinks.logger-sink.type = logger

# Use a channel which buffers events in memory

avro-memory-logger.channels.memory-channel.type = memory

# Bind the source and sink to the channel

avro-memory-logger.sources.avro-source.channels = memory-channel

avro-memory-logger.sinks.logger-sink.channel = memory-channel

```

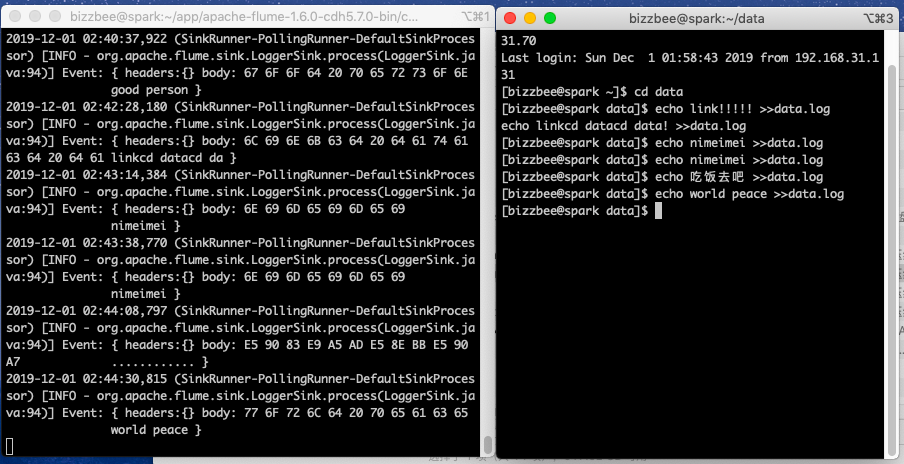

* 啟動,先啟動B機器上的flume

```

flume-ng agent --name avro-memory-logger --conf $FLUME_HOME/conf --conf-file $FLUME_HOME/conf/avro-memory-logger.conf -Dflume.root.logger=INFO,console

```

* 然后啟動A機器上的

```

flume-ng agent --name exec-memory-avro --conf $FLUME_HOME/conf --conf-file $FLUME_HOME/conf/exec-memory-avro.conf -Dflume.root.logger=INFO,console

```

* 然后同樣向data.log里面添加內容,會在B看到輸出。