## Scala

* 下載安裝包

```

wget https://downloads.lightbend.com/scala/2.11.8/scala-2.11.8.tgz

```

## maven

* 解壓

```

tar -zxvf apache-maven-3.6.0-bin.tar.gz -C ~/app/

```

* 環境變量添加

```

export MAVEN_HOME=/home/bizzbee/app/apache-maven-3.6.0

export PATH=$MAVEN_HOME/bin:$PATH

```

* 阿里云鏡像

```

<mirror>

<id>nexus-aliyun</id>

<mirrorOf>central</mirrorOf>

<name>Nexus aliyun</name>

<url>http://maven.aliyun.com/nexus/content/groups/public</url>

</mirror>

```

## Hbase

* 下載

```

wget https://mirrors.tuna.tsinghua.edu.cn/apache/hbase/hbase-1.3.6/hbase-1.3.6-bin.tar.gz

```

* 解壓和環境變量

```

export HBASE_HOME=/home/bizzbee/app/hbase-1.3.6

export PATH=$HBASE_HOME/bin:$PATH

```

* 配置

* hbase-env.sh

```bash

# The java implementation to use. Java 1.7+ required.

# export JAVA_HOME=/usr/java/jdk1.6.0/

export JAVA_HOME=/home/bizzbee/app/jdk1.8.0_221

不使用hbase的zookeeper而是使用我們自己的。

# Tell HBase whether it should manage it's own instance of Zookeeper or not.

export HBASE_MANAGES_ZK=false

```

* hbase-site.sh (記得填寫hosts)

```

<configuration>

# 和hadoop的core-site一致

<property>

<name>hbase.rootdir</name>

<value>hdfs://bizzbee:8020/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

# zookeeper地址

<property>

<name>hbase.zookeeper.quorum</name>

<value>spark:2181</value>

</property>

</configuration>

```

* 啟動zookeeper

```

[bizzbee@spark zookeeper-3.4.5-cdh5.7.0]$ bin/zkServer.sh start

```

* 啟動HBase

```

[bizzbee@spark bin]$ ./start-hbase.sh

[bizzbee@spark bin]$ jps

19378 HMaster

19236 QuorumPeerMain

20157 Jps

19806 HRegionServer

```

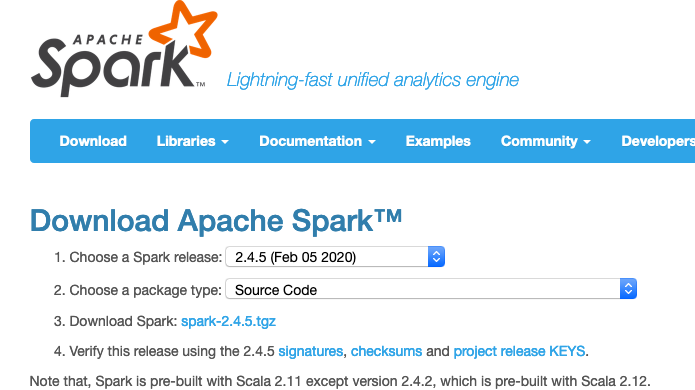

## Spark安裝

* Spark要按照使用不同Hadoop(HDFS)的版本,編譯成不同的版本。所以最好從官網下載源碼包,自己進行編譯。

* 為了節省時間,不自己編譯了。用如下文章中編譯好的,里面有方法。

[編譯方法](https://blog.csdn.net/u013385925/article/details/81290744)

* 解壓

* 配置環境變量

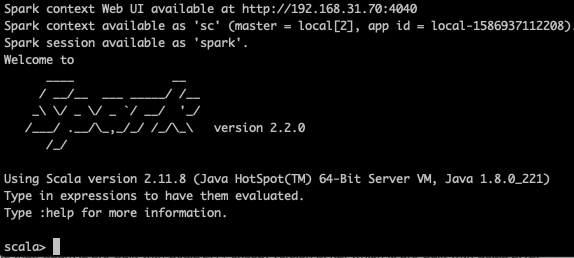

* 啟動

```

[bizzbee@spark bin]$ ./spark-shell --master local[2]

```

##spark開發環境搭建

* pom.xml

~~~

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.bizzbee.spark</groupId>

<artifactId>spark-train</artifactId>

<version>1.0</version>

<inceptionYear>2008</inceptionYear>

<properties>

<scala.version>2.11.8</scala.version>

<kafka.version>0.9.0.0</kafka.version>

<spark.version>2.2.0</spark.version>

<hadoop.version>2.6.0-cdh5.7.0</hadoop.version>

<hbase.version>1.2.0-cdh5.7.0</hbase.version>

</properties>

<!--添加cloudera的repository-->

<repositories>

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos</url>

</repository>

</repositories>

<dependencies>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

</dependency>

<!-- Kafka 依賴-->

<!--

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka_2.11</artifactId>

<version>${kafka.version}</version>

</dependency>

-->

<!-- Hadoop 依賴-->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop.version}</version>

</dependency>

<!-- HBase 依賴-->

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>${hbase.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>${hbase.version}</version>

</dependency>

<!-- Spark Streaming 依賴-->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<!-- Spark Streaming整合Flume 依賴-->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming-flume_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming-flume-sink_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming-kafka-0-8_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

<version>3.5</version>

</dependency>

<!-- Spark SQL 依賴-->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.module</groupId>

<artifactId>jackson-module-scala_2.11</artifactId>

<version>2.6.5</version>

</dependency>

<dependency>

<groupId>net.jpountz.lz4</groupId>

<artifactId>lz4</artifactId>

<version>1.3.0</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.38</version>

</dependency>

<dependency>

<groupId>org.apache.flume.flume-ng-clients</groupId>

<artifactId>flume-ng-log4jappender</artifactId>

<version>1.6.0</version>

</dependency>

<dependency>

<!--groupId jar包所在的項目的包路徑 -->

<groupId>org.apache.hadoop</groupId>

<!--artifactId jar包的名稱 -->

<artifactId>hadoop-hdfs</artifactId>

<!--version jar包的版本 -->

<version>2.6.0-cdh5.7.0</version>

</dependency>

</dependencies>

<build>

<!--

<sourceDirectory>src/main/scala</sourceDirectory>

<testSourceDirectory>src/test/scala</testSourceDirectory>

-->

<plugins>

<plugin>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<executions>

<execution>

<goals>

<goal>compile</goal>

<goal>testCompile</goal>

</goals>

</execution>

</executions>

<configuration>

<scalaVersion>${scala.version}</scalaVersion>

<args>

<arg>-target:jvm-1.5</arg>

</args>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-eclipse-plugin</artifactId>

<configuration>

<downloadSources>true</downloadSources>

<buildcommands>

<buildcommand>ch.epfl.lamp.sdt.core.scalabuilder</buildcommand>

</buildcommands>

<additionalProjectnatures>

<projectnature>ch.epfl.lamp.sdt.core.scalanature</projectnature>

</additionalProjectnatures>

<classpathContainers>

<classpathContainer>org.eclipse.jdt.launching.JRE_CONTAINER</classpathContainer>

<classpathContainer>ch.epfl.lamp.sdt.launching.SCALA_CONTAINER</classpathContainer>

</classpathContainers>

</configuration>

</plugin>

</plugins>

</build>

<reporting>

<plugins>

<plugin>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<configuration>

<scalaVersion>${scala.version}</scalaVersion>

</configuration>

</plugin>

</plugins>

</reporting>

</project>

~~~

* 其中hadoop-hdfs的包一直下載不下來,手動下載添加。

* 方法如下:

[Maven手動加入jar](https://blog.csdn.net/macwx/article/details/96268231)