*****

之前的代碼中,我們有很大一部分時間在尋找下一頁的URL地址或者內容的URL地址上面,這個過程能更簡單一些嗎?

思路:

1.從response中提取所有的a標簽對應的URL地址

2.自動的構造自己resquests請求,發送給引擎

URL地址:`http://www.circ.gov.cn/web/site0/tab5240`

目標:通過爬蟲了解crawlspider的使用

生成crawlspider的命令:`scrapy genspider -t crawl cf cbrc.gov.cn`

```

# -*- coding: utf-8 -*-

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

class YgSpider(CrawlSpider):

name = 'yg'

allowed_domains = ['sun0769.com']

start_urls = ['http://wz.sun0769.com/index.php/question/questionType?type=4&page=0']

rules = (

# LinkExtractor 連接提取器,提取URL地址

# callback 提取出來的URL地址的response會交給callback處理

# follow 當前URL地址的響應是夠重新來rules來提取URL地址

Rule(LinkExtractor(allow=r'wz.sun0769.com/html/question/201811/\d+\.shtml'), callback='parse_item'),

Rule(LinkExtractor(allow=r'http:\/\/wz.sun0769.com/index.php/question/questionType\?type=4&page=\d+'), follow=True),

)

def parse_item(self, response):

item = {}

item['content'] = response.xpath('//div[@class="c1 text14_2"]//text()').extract()

print(item)

```

**注意點**

1.用命令創建一個crawlspider的模板:scrapy genspider -t crawl <爬蟲名字> <all_domain>,也可以手動創建

2.CrawlSpider中不能再有以parse為名字的數據提取方法,這個方法被CrawlSpider用來實現基礎URL提取等功能

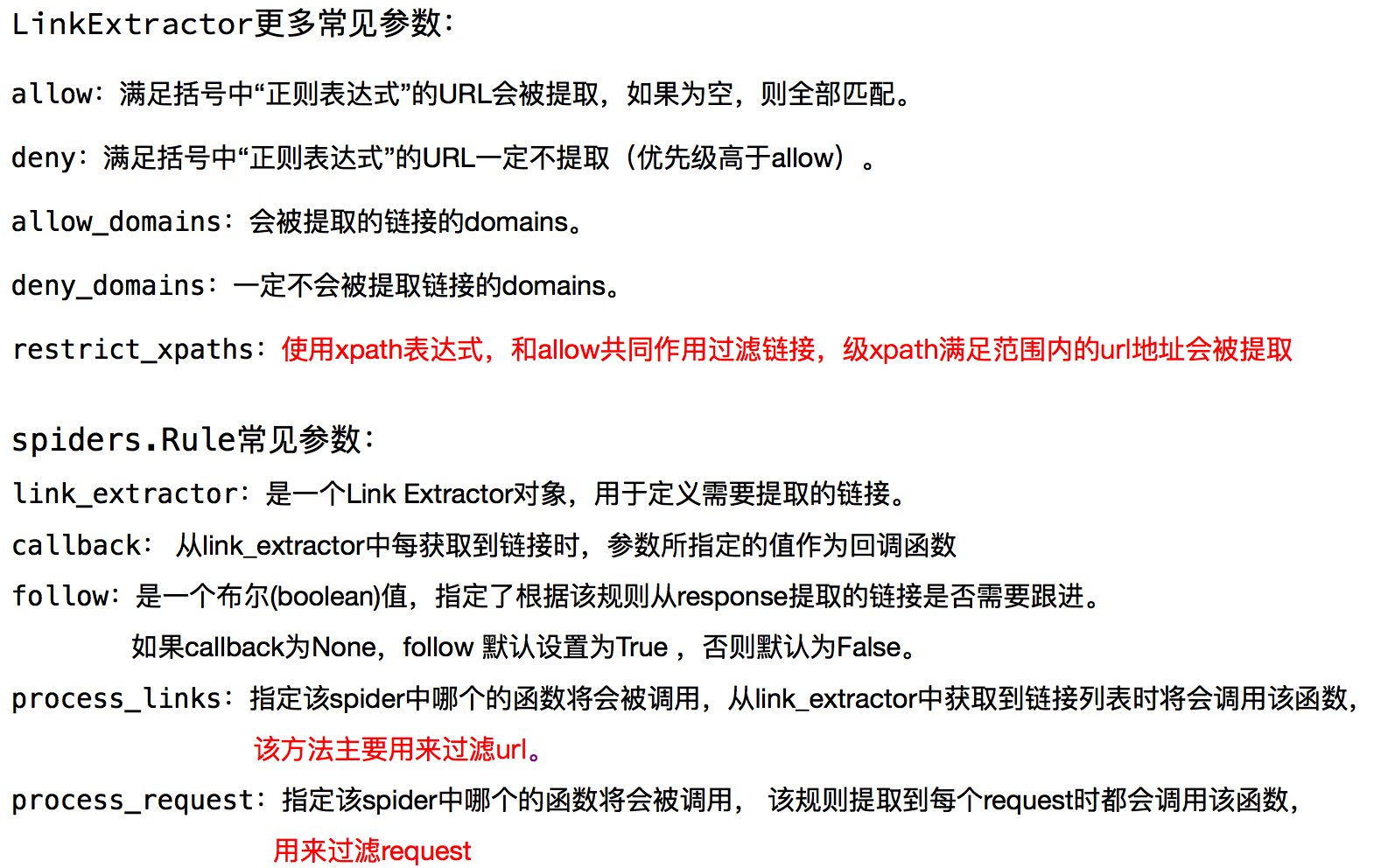

3.一個Rule對象接受很多參數,首先第一個是包含URL規則的LinkExtractor對象,常用的還有callback和follow

- callback:連接提取器提取出來的URL地址對應的響應交給他處理

- follow:連接提取器提取出來的URL地址對應的響應是否繼續被rules來過濾

4.不指定callback函數的請求下,如果follow為True,滿足該rule的URL還會繼續被請求

5.如果多個Rule都滿足某一個URL,會從rules中選擇第一個滿足的進行操作

## CrawlSpider補充(了解)