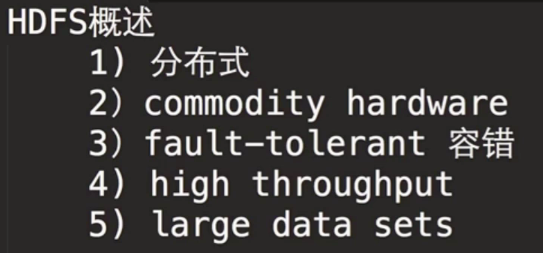

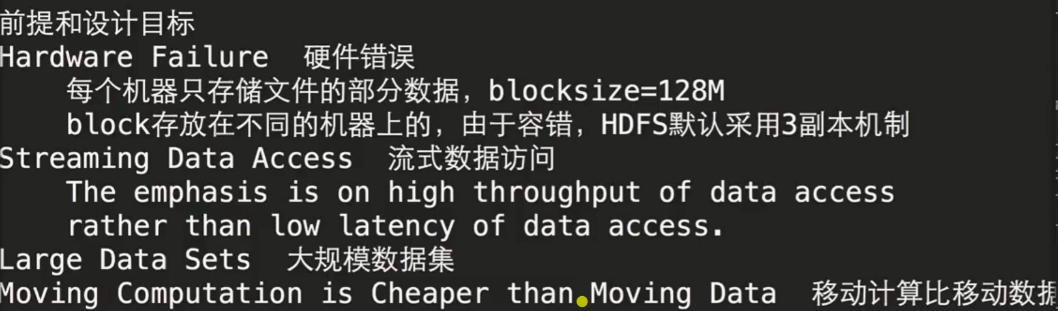

## 概述

> 移動計算比移動數據更劃算。

## HDFS的架構

1. NameNode(master) and DataNodes(slave)

2. master/slave的架構

3. NN:

> the file system namespace

> /home/hadoop/software

> /app

> regulates access to files by clients

4. DN:storage

5. HDFS exposes a file system namespace and allows user data to be stored in files.

6. a file is split into one or more blocks

> blocksize: 128M

> 150M拆成2個block

7. blocks are stored in a set of DataNodes

為什么? 容錯!!!

8. NameNode executes file system namespace operations:CRUD

9. determines the mapping of blocks to DataNodes

> a.txt 150M blocksize=128M

> a.txt 拆分成2個block 一個是block1:128M 另一個是block2:22M

> block1存放在哪個DN?block2存放在哪個DN?

>

> a.txt

> block1:128M, 192.168.199.1

> block2:22M, 192.168.199.2

>

> get a.txt

>

> 這個過程對于用戶來說是不感知的

10. 通常情況下:1個Node部署一個組件

## 開發環境 linux

> 先創建幾個文件夾

```

mkdir software app data lib shell maven_resp

```

****非常重要!!!!!linux主機名改成沒有下劃線的!!!否則有問題!!!****

### Hadoop環境搭建

* 使用的Hadoop相關版本:CDH

* CDH相關軟件包下載地址:http://archive.cloudera.com/cdh5/cdh/5/

* Hadoop使用版本:hadoop-2.6.0-cdh5.15.1

* Hadoop下載:wget http://archive.cloudera.com/cdh5/cdh/5/hadoop-2.6.0-cdh5.15.1.tar.gz

* Hive使用版本:hive-1.1.0-cdh5.15.1

### Hadoop安裝前置要求

Java 1.8+

ssh

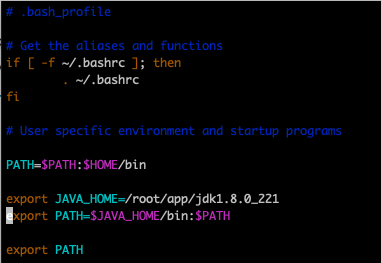

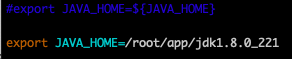

### 安裝Java

* 解壓安裝包。

* 注冊環境變量。

* 環境變量生效。

### 配置無密碼登錄

> 如果??目錄里沒有.ssh 文件夾。`ssh localhost`先訪問下本機,就會自動生成了。

```

ssh-keygen -t rsa 一路回車

```

```

cd ~/.ssh

[hadoop@hadoop000 .ssh]$ ll

總用量 12

-rw------- 1 hadoop hadoop 1679 10月 15 02:54 id_rsa 私鑰

-rw-r--r-- 1 hadoop hadoop 398 10月 15 02:54 id_rsa.pub 公鑰

-rw-r--r-- 1 hadoop hadoop 358 10月 15 02:54 known_hosts

cat id_rsa.pub >> authorized_keys

chmod 600 authorized_keys

```

* 這樣登陸本機就不用輸密碼了。mac連還是要密碼。

```

ssh root@139.155.58.151

```

## 安裝hadoop

* 解壓到:~/app

```

tar -zxvf hadoop-2.6.0-cdh5.15.1.tar.gz.1 -C ~/app/

```

* 添加HADOOP_HOME/bin到系統環境變量

```

vim ~/.bash_profile

```

```

export HADOOP_HOME=/root/app/hadoop-2.6.0-cdh5.15.1

export PATH=$HADOOP_HOME/bin:$PATH

```

```

source ~/.bash_profile

```

* 修改Hadoop配置文件,地址:`/root/app/hadoop-2.6.0-cdh5.15.1/etc/hadoop`

```

vim hadoop-env.sh

```

```

#配置主機

core-site.xml

<property>

<name>fs.defaultFS</name>

<value>hdfs://127.0.0.1:8020</value>

</property>

# 副本系數改為1

hdfs-site.xml

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

#修改默認臨時目錄位置,改為新建的文件夾

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hadoop/app/tmp</value>

</property>

```

slaves

hadoop000

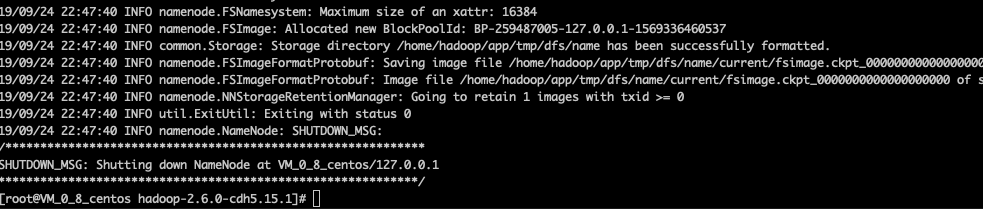

* 啟動HDFS:

* 第一次執行的時候一定要格式化文件系統,不要重復執行: hdfs namenode -format

* 會在指定的tmp目錄生成一些內容。

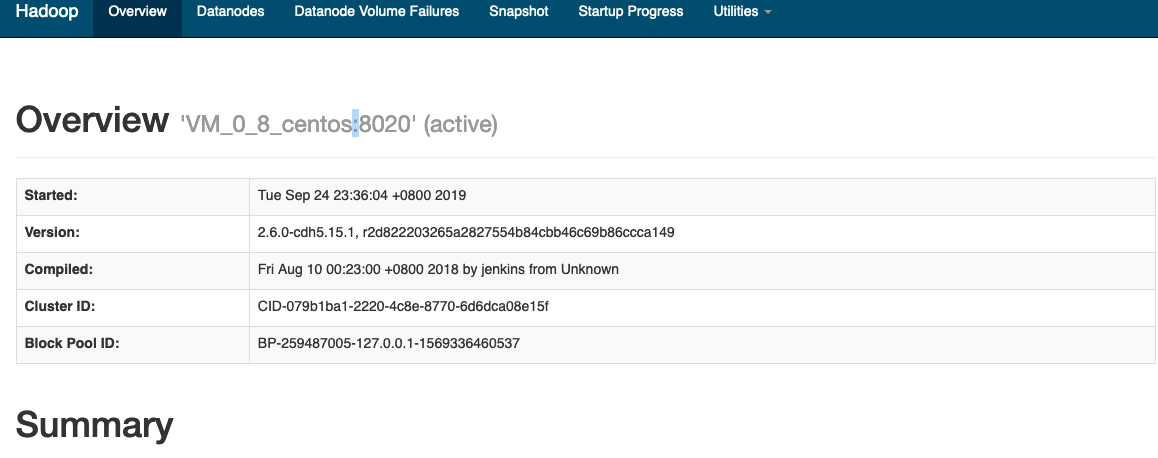

* 啟動集群:$HADOOP_HOME/sbin/start-dfs.sh

* 驗證:

```

[hadoop@hadoop000 sbin]$ jps

60002 DataNode

60171 SecondaryNameNode

59870 NameNode

```

http://139.155.58.151:50070

如果發現jps ok,但是瀏覽器不OK? 十有八九是防火墻問題

查看防火墻狀態:sudo firewall-cmd --state

關閉防火墻: sudo systemctl stop firewalld.service

### hadoop軟件包常見目錄說明

```

bin:hadoop客戶端名單

etc/hadoop:hadoop相關的配置文件存放目錄

sbin:啟動hadoop相關進程的腳本

share:常用例子

```

### 停止hdfs

* start/stop-dfs.sh與hadoop-daemons.sh的關系

```

start-dfs.sh =

hadoop-daemons.sh start namenode

hadoop-daemons.sh start datanode

hadoop-daemons.sh start secondarynamenode

stop-dfs.sh =

....

```

> 視頻課到3-10

[主機改名的問題](https://blog.csdn.net/snowlive/article/details/69662882#hadoop%E9%97%AE%E9%A2%98%E8%A7%A3%E5%86%B3%E4%B8%BB%E6%9C%BA%E5%90%8D%E6%9B%B4%E6%94%B9)