## 創建外部表

```sql

create external table track_info(

ip string,

country string,

province string,

city string,

url string,

time string,

page string

) partitioned by (day string)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

location '/project/trackinfo/';

```

> location后面的路徑指的是hdfs上面的路徑。

* 創建數據輸入文件夾

```

hdfs dfs -mkdir -p /project/input/raw/

```

* 數據上傳到hdfs

```

hdfs dfs -put track.data /project/input/raw/

```

* 現在要用之前的項目中ETLApp類進行數據清洗。

* 先把類中固定的地址設置為運行參數

~~~

Path outputPath = new Path(args[1]);

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

~~~

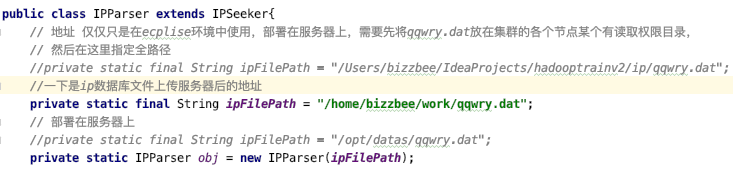

* 還有一個地方,由于使用了ip解析庫,所以先要把ip庫文件上傳,然后更改代碼里面的地址。

* 然后打包,注意不要使用idea命令行,會提示找不到mvn。

```

wangyijiadeMacBook-Air:~ bizzbee$ cd /Users/bizzbee/IdeaProjects/hadooptrainv2

wangyijiadeMacBook-Air:hadooptrainv2 bizzbee$ mvn clean package -DskipTests

```

* 然后上傳服務器

```

wangyijiadeMacBook-Air:target bizzbee$ scp hadoop-train-v2-1.0-SNAPSHOT.jar bizzbee@192.168.31.249:~/work/

```

* 執行(參數分別是輸入輸出路徑)

```

[bizzbee@bizzbee ~]$ hadoop jar /home/bizzbee/work/hadoop-train-v2-1.0-SNAPSHOT.jar com.bizzbee.bigdata.hadoop.mr.project.mr.ETLApp hdfs://bizzbee:8020/project/input/raw/track.data hdfs://bizzbee:8020/project/output/result

```

*可以查看到執行成功了

```

http://192.168.31.249:50070/explorer.html#/project/output/result

```

* 然后把完成etl的數據加載到track_info表中。

```

hive> LOAD DATA INPATH 'hdfs://bizzbee:8020/project/output/result' OVERWRITE INTO TABLE track_info partition(day='2019-11-12');

```

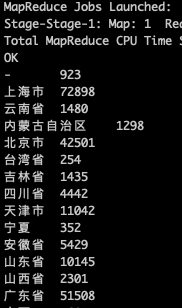

* 在hive中使用sql統計每個省的流量。

```

select province,count(*) as cnt from track_info where day='2019-11-12' group by province ;

```

* 創建每個沈的統計數據表。

```sql

create table track_info_province_stat(

province string,

cnt bigint

) partitioned by (day string)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t';

```

* 把之前查詢的結果作為數據導入省份表。

```sql

insert overwrite table track_info_province_stat partition(day='2019-11-12')

select province,count(*) as cnt from track_info where day='2019-11-12' group by province ;

```