Kubelet組件運行在Node節點上,維持運行中的Pods以及提供kuberntes運行時環境,主要完成以下使命:?

1.監視分配給該Node節點的pods?

2.掛載pod所需要的volumes?

3.下載pod的secret?

4.通過docker/rkt來運行pod中的容器?

5.周期的執行pod中為容器定義的liveness探針?

6.上報pod的狀態給系統的其他組件?

7.上報Node的狀態

### 1、簽發證書

簽發證書??kubelet也對外提供https服務,apiserver主動找?kubelet問節點的一些信息,所以kubelet需要給自己簽發server?證書, 而且server 證書還的列出 kubelet可能用到的所有節點,不能寫IP段,如果要后加:重新簽發新的證書,以前的節點不需要動,新的證書給新的機節點用,隨后重啟服務,但是這時候又兩套證書 。

所以:以前的節點在流量低谷的時候,更新證書?,然后節點重啟

```

vi /opt/certs/kubelet-csr.json

{

"CN": "k8s-kubelet",

"hosts": [

"127.0.0.1",

"10.4.7.10",

"10.4.7.21",

"10.4.7.22",

"10.4.7.23",

"10.4.7.24",

"10.4.7.25",

"10.4.7.26",

"10.4.7.27",

"10.4.7.28"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

```

#### 生成證書

```

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server kubelet-csr.json | cfssl-json -bare kubelet

```

#### 將證書發送到3臺?kubelet機器上

```

scp kubelet.pem kubelet-key.pem HDSS7-21:/opt/kubernetes/server/bin/cert/

```

#### **set-cluster**

注意:在conf目錄下:

cd /opt/kubernetes/server/bin/conf

```

kubectl config set-cluster myk8s \

--certificate-authority=/opt/kubernetes/server/bin/cert/ca.pem \

--embed-certs=true \

--server=https://10.4.7.10:7443 \

--kubeconfig=kubelet.kubeconfig

解釋:

kubectl config set-cluster myk8s \

--certificate-authority=/opt/kubernetes/server/bin/cert/ca.pem \ 根證書ca指進來

--embed-certs=true \ 承載式證書

--server=https://192.168.206.70:7443 \ 指server=vip

--kubeconfig=kubelet.kubeconfig

目的是要給kubectl做一個k8s用戶,k8s客戶體系很復雜,有普通、特殊等用戶,這里是普通用戶,要跟spiserver通信的時候要有一個接入點,接入點就是https://192.168.206.70:7443

```

#### **set-credentials**

創建用戶賬號,即用戶登陸使用的客戶端私有和證書,可以創建多個證書

```

kubectl config set-credentials k8s-node \

--client-certificate=/opt/kubernetes/server/bin/cert/client.pem \

--client-key=/opt/kubernetes/server/bin/cert/client-key.pem \

--embed-certs=true \

--kubeconfig=kubelet.kubeconfig

解釋:

kubectl config set-credentials k8s-node \

--client-certificate=/opt/kubernetes/server/bin/cert/client.pem \ 把client.pem放進來

--client-key=/opt/kubernetes/server/bin/cert/client-key.pem \ 把client-key.pem 放進來

--embed-certs=true \

--kubeconfig=kubelet.kubeconfig

把client.pem 、client-key.pem 放進來,說明我要跟apiuserver 通信,這時候spiserver 是我的服務端,我要拿客戶端的你要要跟你通信,kubectl作為客戶端。

```

#### **set-context**

設置context,即確定賬號和集群對應關系

```

kubectl config set-context myk8s-context \

--cluster=myk8s \

--user=k8s-node \

--kubeconfig=kubelet.kubeconfig

解釋:

kubectl config set-context myk8s-context \

--cluster=myk8s \

--user=k8s-node \ 指定了一個user=k8s-node

--kubeconfig=kubelet.kubeconfig

```

#### **use-context**

設置當前使用哪個context

```

kubectl config use-context myk8s-context --kubeconfig=kubelet.kubeconfig

解釋:

kubectl config use-context myk8s-context --kubeconfig=kubelet.kubeconfig???切換到k8s-node

```

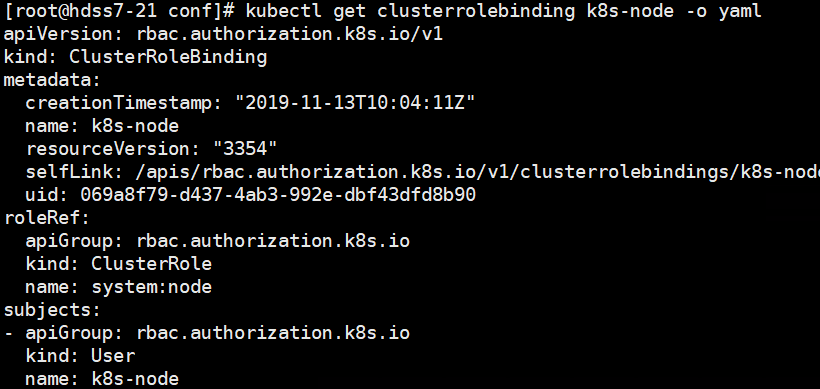

### 2、授權k8s-node用戶

給name: k8s-node 授一個權限,利用rbac規則讓他具有集群里面成為節點的權限

此步驟只需要在一臺master節點執行(因為這兩個節點,只要創建一次,他已經進入k8s,已經落入到etcd里面,無論在那個節點執行,他都已經把資源創建出來,因此需要在一臺master節點執行)

授權 k8s-node 用戶綁定集群角色 system:node ,讓 k8s-node 成為具備運算節點的權限。

hdss-73 上執行: 我創建了一個k8s用戶k8s-node,給k8s-node用戶,授予集群權限,讓他具備運算節點的節點的權限

```

cd /opt/kubernetes/server/bin/conf

vi k8s-node.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: k8s-node

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: k8s-node

解釋:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: k8s-node

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole 做了一個集群角色綁定,讓kind:User 中name:k8s-node這個用戶,具備集群角色,這個集群角色叫system:node

name: system:node

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: k8s-node

資源:rbac建立權限也是一種資源,大致有apiVsersion、kind、metadata(元數據)下有名稱name、roleRef、subjects

```

啟動yaml文件

```

kubectl create -f k8s-node.yaml

kubectl get clusterrolebinding k8s-node

kubectl get clusterrolebinding k8s-node -o yaml

```

#### 建kubelet.kubeconfig證書復制到其他兩個節點

k8s-node.yaml 資源創建一次就行,他會自動把資源加載到etcd中,我們可以把他拷到其他節點

```

scp kubelet.kubeconfig HDSS7-22:/opt/kubernetes/server/bin/conf/

scp kubelet.kubeconfig HDSS7-23:/opt/kubernetes/server/bin/conf/

```

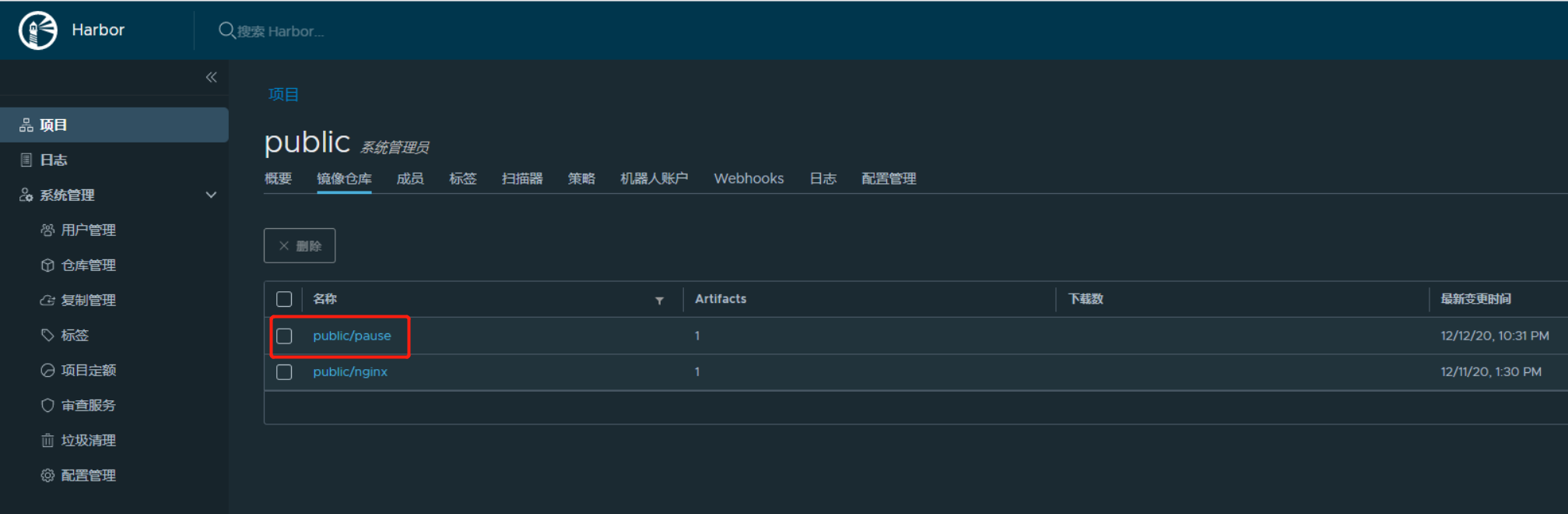

3、裝備pause鏡像

kubectl在啟動的時候,需要一個基礎鏡像,幫助我們啟動pod,kubectl:接收請求把pod拉起來。kubectl 原理是他去調度docker,讓docker引擎把容器真正拉起來,但是拉時候必須有一個基礎鏡像,基礎鏡像干什么:便車模式,一個小鏡像,讓kubectl 控制這個這個小鏡像。先于我們業務容器起來,讓他幫助我們給業務容器設置uts、nat、ipc, 他會先把命名空間占上,也就是業務容器還沒有起來,我們的pod ip已經分配出來

**將pause鏡像放入到harbor私有倉庫中:**

```

docker pull kubernetes/pause

docker tag f9d5de079539 harbor.od.com/public/pause:latest

docker push harbor.od.com/public/pause:latest

```

### 4、創建kubelet啟動腳本

在node節點創建腳本并啟動kubelet

```

vi /opt/kubernetes/server/bin/kubelet.sh

#!/bin/sh

./kubelet \

--anonymous-auth=false \

--cgroup-driver systemd \

--cluster-dns 192.168.0.2 \

--cluster-domain cluster.local \

--runtime-cgroups=/systemd/system.slice \

--kubelet-cgroups=/systemd/system.slice \

--fail-swap-on="false" \

--client-ca-file ./cert/ca.pem \

--tls-cert-file ./cert/kubelet.pem \

--tls-private-key-file ./cert/kubelet-key.pem \

--hostname-override hdss7-21.host.com \

--image-gc-high-threshold 20 \

--image-gc-low-threshold 10 \

--kubeconfig ./conf/kubelet.kubeconfig \

--log-dir /data/logs/kubernetes/kube-kubelet \

--pod-infra-container-image harbor.od.com/public/pause:latest \

--root-dir /data/kubelet

```

#### 創建目錄和授權

```

chmod u+x /opt/kubernetes/server/bin/kubelet.sh

mkdir -p /data/logs/kubernetes/kube-kubelet

```

#### 創建配置文件

```

vi /etc/supervisord.d/kube-kubelet.ini

[program:kube-kubelet]

command=/opt/kubernetes/server/bin/kubelet.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-kubelet/kubelet.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

```

#### 更新配置查看服務啟動情況

```

supervisorctl update

supervisorctl status

```

#### 查看node節點是否加到節點里面:

```

[root@hdss7-21 etcd]# kubectl get node

NAME STATUS ROLES AGE VERSION

hdss7-21.host.com Ready <none> 29h v1.15.4

hdss7-22.host.com Ready <none> 29h v1.15.4

hdss7-23.host.com Ready <none> 28h v1.15.4

```

### 5、修改節點角色(只是打標簽,好看)

過濾相應的節點:假如生產環境,可以主控節點跟node運算節點物理分開部署、比如:主控節點除了部署apiserver,還有controller-manager、kube-scheduler都用容器鏡像的方式托管到K8S,也裝了kubectl ,不想主控節點運行業務節點,這時候用到標簽選擇器,給主控選擇器打一個污點,讓別的鏡像不能容忍這個。

```

[root@hdss7-21 etcd]# kubectl get node

NAME STATUS ROLES AGE VERSION

hdss7-21.host.com Ready <none> 29h v1.15.4

hdss7-22.host.com Ready <none> 29h v1.15.4

hdss7-23.host.com Ready <none> 28h v1.15.4

使用 kubectl get nodes 獲取的Node節點角色為空,可以按照以下方式修改:

kubectl label node hdss7-21.host.com node-role.kubernetes.io/master=

kubectl label node hdss7-23.host.com node-role.kubernetes.io/node=

[root@hdss7-21 etcd]# kubectl get node

NAME STATUS ROLES AGE VERSION

hdss7-21.host.com Ready master 29h v1.15.4

hdss7-22.host.com Ready master 29h v1.15.4

hdss7-23.host.com Ready node 28h v1.15.4

```

- 空白目錄

- k8s

- k8s介紹和架構圖

- 硬件環境和準備工作

- bind9-DNS服務部署

- 私有倉庫harbor部署

- k8s-etcd部署

- api-server部署

- 配置apiserver L4代理

- controller-manager部署

- kube-scheduler部署

- node節點kubelet 部署

- node節點kube-proxy部署

- cfss-certinfo使用

- k8s網絡-Flannel部署

- k8s網絡優化

- CoreDNS部署

- k8s服務暴露之ingress

- 常用命令記錄

- k8s-部署dashboard服務

- K8S平滑升級

- k8s服務交付

- k8s交付dubbo服務

- 服務架構圖

- zookeeper服務部署

- Jenkins服務+共享存儲nfs部署

- 安裝配置maven和java運行時環境的底包鏡像

- 使用blue ocean流水線構建鏡像

- K8S生態--交付prometheus監控

- 介紹

- 部署4個exporter

- 部署prometheus server

- 部署grafana

- alert告警部署

- 日志收集ELK

- 制作Tomcat鏡像

- 部署ElasticSearch

- 部署kafka和kafka-manager

- filebeat鏡像制作

- 部署logstash

- 部署Kibana

- Apollo交付到Kubernetes集群

- Apollo簡介

- 交付apollo-configservice

- 交付apollo-adminservice

- 交付apollo-portal

- k8s-CICD

- 集群整體架構

- 集群安裝

- harbor倉庫和nfs部署

- nginx-ingress-controller服務部署

- gitlab服務部署

- gitlab服務優化

- gitlab-runner部署

- dind服務部署

- CICD自動化服務devops演示

- k8s上服務日志收集