## kube-proxy部署 (連接pod網絡跟集群網絡)

### 1、簽發證書

```

vi /opt/certs/kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

```

#### 生成證書

```

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client kube-proxy-csr.json |cfssl-json -bare kube-proxy-client

\-profile=client? ? ? kube-proxy?跟之前的kubelet? ?clietn 證書通用,原因是"CN": "system:kube-proxy",? CN變了

```

#### 分發證書

```

scp kube-proxy-client-key.pem kube-proxy-client.pem hdss7-21:/opt/kubernetes/server/bin/cert/

```

### 2、創建kube-proxy配置

一個node創建,在所有node節點使用

```

cd /opt/kubernetes/server/bin/conf/? ? ? ? ? 注意:要在conf下

kubectl config set-cluster myk8s \

--certificate-authority=/opt/kubernetes/server/bin/cert/ca.pem \

--embed-certs=true \

--server=https://10.4.7.10:7443 \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=/opt/kubernetes/server/bin/cert/kube-proxy-client.pem \

--client-key=/opt/kubernetes/server/bin/cert/kube-proxy-client-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context myk8s-context \

--cluster=myk8s \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context myk8s-context --kubeconfig=kube-proxy.kubeconfig

```

#### 創建好分發到其他兩個節點

```

scp kube-proxy.kubeconfig hdss7-22:/opt/kubernetes/server/bin/conf/

scp kube-proxy.kubeconfig hdss7-23:/opt/kubernetes/server/bin/conf/

```

### 3、加載ipvs模塊,使得讓kube-proxy使用ipvs調度算法(可以查看一下要是準備工作沒有做這里做一下)

kube-proxy 共有3種流量調度模式,分別是 namespace(做大量用戶態跟內核太態互,太費資源),iptables(標準的,但是不科學,沒有七層調度),ipvs,其中ipvs性能最好啟動ipvs內核模塊腳本

```

[root@hdss7-21 ~]# lsmod | grep ip_vs ? # 查看ipvs模塊空行表示沒有開啟

[root@hdss7-21 ~]# vi ipvs.sh

ipvs_mods_dir="/usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs"

for i in $(ls $ipvs_mods_dir|grep -o "^[^.]*")

do

/sbin/modinfo -F filename $i &>/dev/null

if [ $? -eq 0 ];then

/sbin/modprobe $i

fi

done

[root@hdss7-21 ~]# chmod a+x ipvs.sh

[root@hdss7-21 ~]# ./ipvs.sh

[root@hdss7-21 ~]# lsmod | grep ip_vs ?# 查看ipvs模塊(一個算法一個模塊)

ip_vs_ftp 13079 0

nf_nat 26583 3 ip_vs_ftp,nf_nat_ipv4,nf_nat_masquerade_ipv4

ip_vs_sed 12519 0

ip_vs_nq 12516 0

ip_vs_sh 12688 0

ip_vs_dh 12688 0

ip_vs_lblcr 12922 0

ip_vs_lblc 12819 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 0

ip_vs_wlc 12519 0

ip_vs_lc 12516 0

ip_vs 145458 22 ip_vs_dh,ip_vs_lc,ip_vs_nq,ip_vs_rr,ip_vs_sh,ip_vs_ftp,ip_vs_sed,ip_vs_wlc,ip_vs_wrr,ip_vs_lblcr,ip_vs_lblc

nf_conntrack 139264 7 ip_vs,nf_nat,nf_nat_ipv4,xt_conntrack,nf_nat_masquerade_ipv4,nf_conntrack_netlink,nf_conntrack_ipv4

libcrc32c 12644 4 xfs,ip_vs,nf_nat,nf_conntrac

解釋:

靜態調度算法:一般常用

ip_vs_rr中rr: 輪叫調度(Round-Robin Scheduling)

ip_vs_wrr中wrr: 加權輪叫調度(Weighted Round-Robin Scheduling)

ip_vs_lc中lc: 最小連接調度(Least-Connection Scheduling)

ip_vs_wlc中wlc: 加權最小連接調度(Weighted Least-Connection Scheduling)

動態算法:

ip_vs_lblc、ip_vs_lblcr、ip_vs_dh、ip_vs_sh 比較少用,一般只用于cdn純靜態的。ip_vs_sed、ip_vs_nq 常用

ip_vs_lblc中lblc: 基于局部性的最少鏈接(Locality-Based Least Connections Scheduling)

ip_vs_lblcr中lblcr: 帶復制的基于局部性最少鏈接(Locality-Based Least Connections with Replication Scheduling)

ip_vs_dh中dh: 目標地址散列調度(Destination Hashing Scheduling)

ip_vs_sh中sh: 源地址散列調度(Source Hashing Scheduling)

ip_vs_sed中sed: 最短預期延時調度(Shortest Expected Delay Scheduling)

ip_vs_nq中nq: 不排隊調度(Never Queue Scheduling)

```

### 4、創建啟動腳本

```

vi /opt/kubernetes/server/bin/kube-proxy.sh

#!/bin/sh

./kube-proxy \

--cluster-cidr 172.7.0.0/16 \

--hostname-override hdss7-21.host.com \

--proxy-mode=ipvs \

--ipvs-scheduler=nq \

--kubeconfig ./conf/kube-proxy.kubeconfig

```

#### 創建日志目錄和授權

```

chmod +x /opt/kubernetes/server/bin/kube-proxy.sh

mkdir -p /data/logs/kubernetes/kube-proxy

```

#### 創建配置文件

```

vi /etc/supervisord.d/kube-proxy.ini

[program:kube-proxy]

command=/opt/kubernetes/server/bin/kube-proxy.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-proxy/proxy.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

```

#### 更新配置查看啟動情況

```

supervisorctl update

supervisorctl status

```

### 5、創建一個資源配置清單,導入一個nginx ,啟動pod控制器

```

vim nginx-ds.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: nginx-ds

spec:

template:

metadata:

labels:

app: nginx-ds

spec:

containers:

- name: my-nginx

image: harbor.od.com/public/nginx:v1.7.9

ports:

- containerPort: 80

```

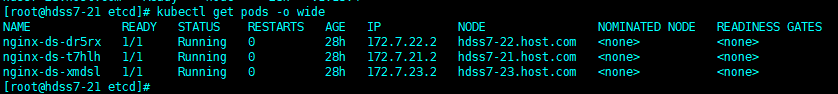

#### 啟動和查看

```

kubectl create -f nginx-ds.yaml

kubectl get pods -o wide

```

- 空白目錄

- k8s

- k8s介紹和架構圖

- 硬件環境和準備工作

- bind9-DNS服務部署

- 私有倉庫harbor部署

- k8s-etcd部署

- api-server部署

- 配置apiserver L4代理

- controller-manager部署

- kube-scheduler部署

- node節點kubelet 部署

- node節點kube-proxy部署

- cfss-certinfo使用

- k8s網絡-Flannel部署

- k8s網絡優化

- CoreDNS部署

- k8s服務暴露之ingress

- 常用命令記錄

- k8s-部署dashboard服務

- K8S平滑升級

- k8s服務交付

- k8s交付dubbo服務

- 服務架構圖

- zookeeper服務部署

- Jenkins服務+共享存儲nfs部署

- 安裝配置maven和java運行時環境的底包鏡像

- 使用blue ocean流水線構建鏡像

- K8S生態--交付prometheus監控

- 介紹

- 部署4個exporter

- 部署prometheus server

- 部署grafana

- alert告警部署

- 日志收集ELK

- 制作Tomcat鏡像

- 部署ElasticSearch

- 部署kafka和kafka-manager

- filebeat鏡像制作

- 部署logstash

- 部署Kibana

- Apollo交付到Kubernetes集群

- Apollo簡介

- 交付apollo-configservice

- 交付apollo-adminservice

- 交付apollo-portal

- k8s-CICD

- 集群整體架構

- 集群安裝

- harbor倉庫和nfs部署

- nginx-ingress-controller服務部署

- gitlab服務部署

- gitlab服務優化

- gitlab-runner部署

- dind服務部署

- CICD自動化服務devops演示

- k8s上服務日志收集