[TOC]

### 文章鏈接:

[Android OkHttp源碼解析入門教程:同步和異步(一)](https://juejin.im/post/5c46822c6fb9a049ea394510)

[Android OkHttp源碼解析入門教程:攔截器和責任鏈(二)](https://juejin.im/post/5c4682d2f265da6130752a1d)

### 前言

上一篇文章我們主要講解OkHttp的基本使用以及同步請求,異步請求的源碼分析,相信大家也對其內部的基本流程以及作用有了大致的了解,還記得上一篇我們提到過getResponseWithInterceptorChain責任鏈,那么這篇文章就帶你深入OkHttp內部5大攔截器(Interceptors)和責任鏈模式的源碼分析,滴滴滴。

### 正文

首先,我們要清楚OkHttp攔截器(Interceptors)是干什么用的,來看看官網的解釋

> Interceptors are a powerful mechanism that can monitor, rewrite, and retry calls

> 攔截器是一種強大的機制,可以做網絡監視、重寫和重試調用

這句話怎么理解呢?簡單來說,就好比如你帶著盤纏上京趕考,遇到了五批賊寇,但其奇怪的是他們的目標有的是為財,有的是為色,各不相同;例子中的你就相當于一個正在執行任務的網絡請求,賊寇就是攔截器,它們的作用就是取走請求所攜帶的參數拿過去修改,判斷,校驗然后放行,其實際是實現了[AOP(面向切面編程)](https://baike.baidu.com/item/AOP/1332219?fr=aladdin),關于AOP的了解,相信接觸過Spring框架的是最熟悉不過的。

我們再來看官方給出的攔截器圖

> 攔截器有兩種:APP層面的攔截器(Application Interception)、網絡攔截器(Network Interception),而我們這篇文章注重講解OkHttp core(系統內部五大攔截器)

那么OkHttp系統內部的攔截器有哪些呢?作用分別是干什么的?

緊接上篇文章,我們到getResponseWithInterceptorChain()源碼中分析,這些攔截器是怎么發揮各自作用的?

~~~

Response getResponseWithInterceptorChain() throws IOException {

// Build a full stack of interceptors.

// 責任鏈

List<Interceptor> interceptors = new ArrayList<>();

// 添加自定義攔截器(Application Interception)

interceptors.addAll(client.interceptors());

// 添加 負責處理錯誤,失敗重試,重定向攔截器 RetryAndFollowUpInterceptor

interceptors.add(retryAndFollowUpInterceptor);

// 添加 負責補充用戶創建請求中缺少一些必須的請求頭以及壓縮處理的 BridgeInterceptor

interceptors.add(new BridgeInterceptor(client.cookieJar()));

// 添加 負責進行緩存處理的 CacheInterceptor

interceptors.add(new CacheInterceptor(client.internalCache()));

// 添加 負責與服務器建立鏈接的 ConnectInterceptor

interceptors.add(new ConnectInterceptor(client));

if (!forWebSocket) {

// 添加網絡攔截器(Network Interception)

interceptors.addAll(client.networkInterceptors());

}

//添加 負責向服務器發送請求數據、從服務器讀取響應數據的 CallServerInterceptor

interceptors.add(new CallServerInterceptor(forWebSocket));

// 將interceptors集合以及相應參數傳到RealInterceptorChain構造方法中完成責任鏈的創建

Interceptor.Chain chain = new RealInterceptorChain(interceptors, null, null, null, 0,

originalRequest, this, eventListener, client.connectTimeoutMillis(),

client.readTimeoutMillis(), client.writeTimeoutMillis());

// 調用責任鏈的執行

return chain.proceed(originalRequest);

}

~~~

從這里可以看出,getResponseWithInterceptorChain方法首先創建了一系列攔截器,并整合到一個Interceptor集合當中,同時每個攔截器各自負責不同的部分,處理不同的功能,然后把集合加入到RealInterceptorChain構造方法中完成攔截器鏈的創建,而[責任鏈](https://blog.csdn.net/u012810020/article/details/71194853)模式就是管理多個攔截器鏈。 關于責任鏈模式的理解,簡單來說,其實日常的開發代碼中隨處可見

~~~

@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data) {

super.onActivityResult(requestCode, resultCode, data);

if (resultCode == RESULT_OK) {

switch (requestCode) {

case 1:

System.out.println("我是第一個攔截器: " + requestCode);

break;

case 2:

System.out.println("我是第二個攔截器: " + requestCode);

break;

case 3:

System.out.println("我是第三個攔截器: " + requestCode);

break;

default:

break;

}

}

}

~~~

相信當你看到這段代碼就明白責任鏈模式是干什么的吧,根據requestCode的狀態處理不同的業務,不屬于自己的任務傳遞給下一個,如果還不明白,你肯定是個假的Android程序員,當然這是個非常簡化的責任鏈模式,很多定義都有錯誤,這里只是給大家做個簡單介紹下責任鏈模式的流程是怎樣的,要想深入并應用到實際開發中,還需要看相關文檔,你懂我意思吧。

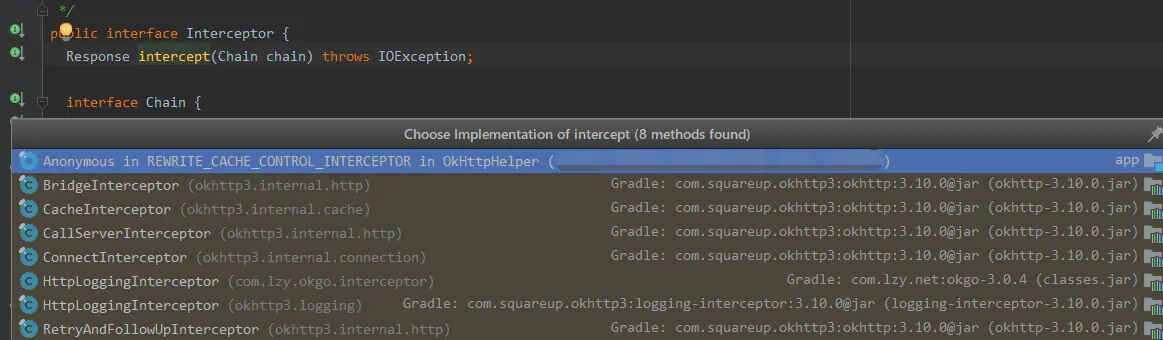

我們直奔主題,上文也說到,getResponseWithInterceptorChain最終調用的RealInterceptorChain的proceed方法,我們接著從源碼出發

~~~

public interface Interceptor {

// 每個攔截器會根據Chain攔截器鏈觸發對下一個攔截器的調用,直到最后一個攔截器不觸發

// 當攔截器鏈中所有的攔截器被依次執行完成后,就會將每次生成后的結果進行組裝

Response intercept(Chain chain) throws IOException;

interface Chain {

// 返回請求

Request request();

// 處理請求

Response proceed(Request request) throws IOException;

}

}

public final class RealInterceptorChain implements Interceptor.Chain {

private final List<Interceptor> interceptors;

private final StreamAllocation streamAllocation;

private final HttpCodec httpCodec;

private final RealConnection connection;

private final int index;

private final Request request;

private final Call call;

private final EventListener eventListener;

private final int connectTimeout;

private final int readTimeout;

private final int writeTimeout;

private int calls;

public RealInterceptorChain(List<Interceptor> interceptors, StreamAllocation streamAllocation,

HttpCodec httpCodec, RealConnection connection, int index, Request request, Call call,

EventListener eventListener, int connectTimeout, int readTimeout, int writeTimeout) {

this.interceptors = interceptors;

this.connection = connection;

this.streamAllocation = streamAllocation;

this.httpCodec = httpCodec;

this.index = index;

this.request = request;

this.call = call;

this.eventListener = eventListener;

this.connectTimeout = connectTimeout;

this.readTimeout = readTimeout;

this.writeTimeout = writeTimeout;

}

@Override public Response proceed(Request request) throws IOException {

return proceed(request, streamAllocation, httpCodec, connection);

}

}

~~~

上述代碼中可以看出Interceptor是個接口類,RealInterceptorChain實現了Interceptor.chain方法,在這里我們也看到了之前我們初始化時傳進來的參數,可見我們實際調用的是RealInterceptorChain的proceed方法,接著往下看

~~~

public Response proceed(Request request, StreamAllocation streamAllocation, HttpCodec httpCodec,

RealConnection connection) throws IOException {

if (index >= interceptors.size()) throw new AssertionError();

calls++;

// 去掉一些邏輯判斷處理,留下核心代碼

// Call the next interceptor in the chain.(調用鏈中的下一個攔截器)

// 創建下一個攔截器鏈,index+1表示如果要繼續訪問攔截器鏈中的攔截器,只能從下一個攔截器訪問,而不能從當前攔截器開始

// 即根據index光標不斷創建新的攔截器鏈,新的攔截器鏈會比之前少一個攔截器,這樣就可以防止重復執行

RealInterceptorChain next = new RealInterceptorChain(interceptors, streamAllocation, httpCodec,

connection, index + 1, request, call, eventListener, connectTimeout, readTimeout,

writeTimeout);

// 獲取當前攔截器

Interceptor interceptor = interceptors.get(index);

// 執行當前攔截器,并將下一個攔截器鏈傳入

Response response = interceptor.intercept(next);

return response;

}

~~~

看到這里你會覺得一頭霧水,簡單了解后,你的疑問可能如下

1.`index+1的作用是干什么的?`

2.`當前攔截器是怎么執行的?`

3.`攔截器鏈是怎么依次調用執行的?`

4.`上面3點都理解,但每個攔截器返回的結果都不一樣,它是怎么返回給我一個最終結果?`

首先,我們要清楚責任鏈模式的流程,如同上文所說的,書生攜帶書籍,軟銀,家眷上京趕考,途遇強盜,第一批強盜搶走了書籍并且放行,第二批強盜搶走了軟銀放行,一直到最后一無所有才會停止。 書生就是最開始的RealInterceptorChain,強盜就是Interceptor,,index+1的作用就是創建一個新的攔截器鏈,簡單來說,就是 書生(書籍,軟銀,家眷) →劫書籍強盜→書生(軟銀,家眷)→劫財強盜→書生(家眷)→... 即通過不斷創建新的RealInterceptorChain鏈輪循執行interceptors中的攔截器形成一個責任鏈(搶劫鏈)模式直到全部攔截器鏈中的攔截器處理完成后返回最終結果

// 獲取當前攔截器 Interceptor interceptor = interceptors.get(index); index+1不斷執行,list.get(0),list.get(1),... 這樣就能取出interceptors中所有的攔截器,我們之前也說過Interceptor是一個接口,okhttp內部的攔截器都實現了這個接口處理各自的業務

如此,每當 Interceptor interceptor = interceptors.get(index)執行時,就可以根據interceptor.intercept(next)執行相應攔截器實現的intercept方法處理相應的業務,同時把創建好新的攔截器鏈傳進來,這樣就可以避免重復執行一個攔截器。

### **RetryAndFollowUpInterceptor**(重定向攔截器)

負責處理錯誤,失敗重試,重定向

~~~

/**

* This interceptor recovers from failures and follows redirects as necessary. It may throw an

* {@link IOException} if the call was canceled.

*/

public final class RetryAndFollowUpInterceptor implements Interceptor {

/**

* How many redirects and auth challenges should we attempt? Chrome follows 21 redirects; Firefox,

* curl, and wget follow 20; Safari follows 16; and HTTP/1.0 recommends 5.

*/

//最大失敗重連次數:

private static final int MAX_FOLLOW_UPS = 20;

public RetryAndFollowUpInterceptor(OkHttpClient client, boolean forWebSocket) {

this.client = client;

this.forWebSocket = forWebSocket;

}

@Override public Response intercept(Chain chain) throws IOException {

Request request = chain.request();

// 建立執行Http請求所需要的對象,,通過責任鏈模式不斷傳遞,直到在ConnectInterceptor中具體使用,

// 主要用于 ①獲取連接服務端的Connection ②連接用于服務端進行數據傳輸的輸入輸出流

// 1.全局的連接池,2.連接線路Address,3.堆棧對象

streamAllocation = new StreamAllocation(

client.connectionPool(), createAddress(request.url()), callStackTrace);

int followUpCount = 0;

Response priorResponse = null; // 最終response

while (true) {

if (canceled) {

streamAllocation.release();

throw new IOException("Canceled");

}

Response response = null;

boolean releaseConnection = true;

try {

// 執行下一個攔截器,即BridgeInterceptor

// 將初始化好的連接對象傳遞給下一個攔截器,通過proceed方法執行下一個攔截器鏈

// 這里返回的response是下一個攔截器處理返回的response,通過priorResponse不斷結合,最終成為返回給我們的結果

response = ((RealInterceptorChain) chain).proceed(request, streamAllocation, null, null);

releaseConnection = false;

} catch (RouteException e) {

// The attempt to connect via a route failed. The request will not have been sent.

// 如果有異常,判斷是否要恢復

if (!recover(e.getLastConnectException(), false, request)) {

throw e.getLastConnectException();

}

releaseConnection = false;

continue;

} catch (IOException e) {

// An attempt to communicate with a server failed. The request may have been sent.

boolean requestSendStarted = !(e instanceof ConnectionShutdownException);

if (!recover(e, requestSendStarted, request)) throw e;

releaseConnection = false;

continue;

} finally {

// We're throwing an unchecked exception. Release any resources.

if (releaseConnection) {

streamAllocation.streamFailed(null);

streamAllocation.release();

}

}

// Attach the prior response if it exists. Such responses never have a body.

if (priorResponse != null) {

response = response.newBuilder()

.priorResponse(priorResponse.newBuilder()

.body(null)

.build())

.build();

}

// 檢查是否符合要求

Request followUp = followUpRequest(response);

if (followUp == null) {

if (!forWebSocket) {

streamAllocation.release();

}

// 返回結果

return response;

}

//不符合,關閉響應流

closeQuietly(response.body());

// 是否超過最大限制

if (++followUpCount > MAX_FOLLOW_UPS) {

streamAllocation.release();

throw new ProtocolException("Too many follow-up requests: " + followUpCount);

}

if (followUp.body() instanceof UnrepeatableRequestBody) {

streamAllocation.release();

throw new HttpRetryException("Cannot retry streamed HTTP body", response.code());

}

// 是否有相同的連接

if (!sameConnection(response, followUp.url())) {

streamAllocation.release();

streamAllocation = new StreamAllocation(

client.connectionPool(), createAddress(followUp.url()), callStackTrace);

} else if (streamAllocation.codec() != null) {

throw new IllegalStateException("Closing the body of " + response

+ " didn't close its backing stream. Bad interceptor?");

}

request = followUp;

priorResponse = response;

}

}

~~~

在這里我們看到response = ((RealInterceptorChain) chain).proceed(request, streamAllocation, null, null),很明顯proceed執行的就是我們傳進來的新攔截器鏈,從而形成責任鏈,這樣也就能明白攔截器鏈是如何依次執行的。 其實RetryAndFollowUpInterceptor 主要負責的是失敗重連,但是要注意的,`不是所有的網絡請求失敗后都可以重連`,所以RetryAndFollowUpInterceptor內部會幫我們進行檢測網絡請求異常和響應碼情況判斷,符合條件即可進行失敗重連。

> [StreamAllocation](https://www.jianshu.com/p/e6fccf55ca01): 建立執行Http請求所需的對象,主要用于

`1. 獲取連接服務端的Connection` `2.連接用于服務端進行數據傳輸的輸入輸出流`

通過責任鏈模式不斷傳遞,直到在ConnectInterceptor中具體使用,

(1)全局的連接池,(2)連接線路Address,(3)堆棧對象

streamAllocation = new StreamAllocation( client.connectionPool(), createAddress(request.url()), callStackTrace); 這里的createAddress(request.url())是根據Url創建一個基于Okio的Socket連接的Address對象。狀態處理情況流程如下

1、首先執行whie(true)循環,如果取消網絡請求canceled,則釋放streamAllocation 資源同時拋出異常結束

2、執行下一個攔截器鏈,如果發生異常,走到catch里面,判斷是否恢復請求繼續執行,否則退出釋放

3、 如果priorResponse不為空,則結合當前返回Response和之前響應返回后的Response (這就是為什么最后返回的是一個完整的Response)

4、調用followUpRequest查看響應是否需要重定向,如果不需要重定向則返回當前請求

5、followUpCount 重定向次數+1,同時判斷是否達到最大重定向次數。符合則釋放streamAllocation并拋出異常

6、sameConnection檢查是否有相同的鏈接,相同則StreamAllocation釋放并重建

7、重新設置request,并把當前的Response保存到priorResponse,繼續while循環

由此可以看出RetryAndFollowUpInterceptor主要執行流程:

1)創建StreamAllocation對象

2)調用RealInterceptorChain.proceed(...)進行網絡請求

3)根據異常結果或則響應結果判斷是否要進行重新請求

4)調用下一個攔截器,處理response并返回給上一個攔截器

### **BridgeInterceptor**(橋接攔截器)

負責設置編碼方式,添加頭部,Keep-Alive 連接以及應用層和網絡層請求和響應類型之間的相互轉換

~~~

/**

* Bridges from application code to network code. First it builds a network request from a user

* request. Then it proceeds to call the network. Finally it builds a user response from the network

* response.

*/

public final class BridgeInterceptor implements Interceptor {

private final CookieJar cookieJar;

public BridgeInterceptor(CookieJar cookieJar) {

this.cookieJar = cookieJar;

}

@Override

//1、cookie的處理, 2、Gzip壓縮

public Response intercept(Interceptor.Chain chain) throws IOException {

Request userRequest = chain.request();

Request.Builder requestBuilder = userRequest.newBuilder();

RequestBody body = userRequest.body();

if (body != null) {

MediaType contentType = body.contentType();

if (contentType != null) {

requestBuilder.header("Content-Type", contentType.toString());

}

long contentLength = body.contentLength();

if (contentLength != -1) {

requestBuilder.header("Content-Length", Long.toString(contentLength));

requestBuilder.removeHeader("Transfer-Encoding");

} else {

requestBuilder.header("Transfer-Encoding", "chunked");

requestBuilder.removeHeader("Content-Length");

}

}

if (userRequest.header("Host") == null) {

requestBuilder.header("Host", hostHeader(userRequest.url(), false));

}

if (userRequest.header("Connection") == null) {

requestBuilder.header("Connection", "Keep-Alive");

}

// If we add an "Accept-Encoding: gzip" header field we're responsible for also decompressing

// the transfer stream.

boolean transparentGzip = false;

if (userRequest.header("Accept-Encoding") == null && userRequest.header("Range") == null) {

transparentGzip = true;

requestBuilder.header("Accept-Encoding", "gzip");

}

// 所以返回的cookies不能為空,否則這里會報空指針

List<Cookie> cookies = cookieJar.loadForRequest(userRequest.url());

if (!cookies.isEmpty()) {

// 創建Okhpptclitent時候配置的cookieJar,

requestBuilder.header("Cookie", cookieHeader(cookies));

}

if (userRequest.header("User-Agent") == null) {

requestBuilder.header("User-Agent", Version.userAgent());

}

// 以上為請求前的頭處理

Response networkResponse = chain.proceed(requestBuilder.build());

// 以下是請求完成,拿到返回后的頭處理

// 響應header, 如果沒有自定義配置cookie不會解析

HttpHeaders.receiveHeaders(cookieJar, userRequest.url(), networkResponse.headers());

Response.Builder responseBuilder = networkResponse.newBuilder()

.request(userRequest);

}

~~~

從這里可以看出,BridgeInterceptor在發送網絡請求之前所做的操作都是幫我們傳進來的普通Request添加必要的頭部信息Content-Type、Content-Length、Transfer-Encoding、Host、Connection(默認Keep-Alive)、Accept-Encoding、User-Agent,使之變成可以發送網絡請求的Request。 我們具體來看HttpHeaders.receiveHeaders(cookieJar, userRequest.url(), networkResponse.headers()),調用Http頭部的receiveHeaders靜態方法將服務器響應回來的Response轉化為用戶響應可以使用的Response

~~~

public static void receiveHeaders(CookieJar cookieJar, HttpUrl url, Headers headers) {

// 無配置則不解析

if (cookieJar == CookieJar.NO_COOKIES) return;

// 遍歷Cookie解析

List<Cookie> cookies = Cookie.parseAll(url, headers);

if (cookies.isEmpty()) return;

// 然后保存,即自定義

cookieJar.saveFromResponse(url, cookies);

}

~~~

當我們自定義Cookie配置后,receiveHeaders方法就會幫我們解析Cookie并添加到header頭部中保存

~~~

// 前面解析完header后,判斷服務器是否支持Gzip壓縮格式,如果支持將交給Okio處理

if (transparentGzip

&& "gzip".equalsIgnoreCase(networkResponse.header("Content-Encoding"))

&& HttpHeaders.hasBody(networkResponse)) {

GzipSource responseBody = new GzipSource(networkResponse.body().source());

Headers strippedHeaders = networkResponse.headers().newBuilder()

.removeAll("Content-Encoding")

.removeAll("Content-Length")

.build();

responseBuilder.headers(strippedHeaders);

// 處理完成后,重新生成一個response

responseBuilder.body(new RealResponseBody(strippedHeaders, Okio.buffer(responseBody)));

}

return responseBuilder.build();

}

~~~

1. transparentGzip判斷服務器是否支持Gzip壓縮

2. 滿足判斷當前頭部Content-Encoding是否支持gzip

3. 判斷Http頭部是否有body體

滿足上述條件,就將Response.body輸入流轉換成GzipSource類型,獲得解壓過后的數據流后,移除響應中的header Content-Encoding和Content-Length,構造新的響應返回。

由此可以看出BridgeInterceptor主要執行流程:

1)負責將用戶構建的Request請求轉化成能夠進行網絡訪問的請求

2)將符合條件的Request執行網絡請求

3)將網絡請求響應后的Response轉化(Gzip壓縮,Gzip解壓縮)為用戶可用的Response

### **CacheInterceptor**(緩存攔截器)

負責進行緩存處理

~~~

/** Serves requests from the cache and writes responses to the cache. */

public final class CacheInterceptor implements Interceptor {

final InternalCache cache;

public CacheInterceptor(InternalCache cache) {

this.cache = cache;

}

@Override public Response intercept(Chain chain) throws IOException {

// 通過Request從緩存中獲取Response

Response cacheCandidate = cache != null

? cache.get(chain.request())

: null;

long now = System.currentTimeMillis(); // 獲取系統時間

// 緩存策略類,該類決定了是使用緩存還是進行網絡請求

// 根據請求頭獲取用戶指定的緩存策略,并根據緩存策略來獲取networkRequest,cacheResponse;

CacheStrategy strategy = new CacheStrategy.Factory(now, chain.request(), cacheCandidate).get();

// 網絡請求,如果為null就代表不用進行網絡請求

Request networkRequest = strategy.networkRequest;

// 獲取CacheStrategy緩存中的Response,如果為null,則代表不使用緩存

Response cacheResponse = strategy.cacheResponse;

if (cache != null) {//根據緩存策略,更新統計指標:請求次數、使用網絡請求次數、使用緩存次數

cache.trackResponse(strategy);

}

if (cacheCandidate != null && cacheResponse == null) {

//cacheResponse不讀緩存,那么cacheCandidate不可用,關閉它

closeQuietly(cacheCandidate.body()); // The cache candidate wasn't applicable. Close it.

}

// If we're forbidden from using the network and the cache is insufficient, fail.

// 如果我們禁止使用網絡和緩存不足,則返回504。

if (networkRequest == null && cacheResponse == null) {

return new Response.Builder()

.request(chain.request())

.protocol(Protocol.HTTP_1_1)

.code(504)

.message("Unsatisfiable Request (only-if-cached)")

.body(Util.EMPTY_RESPONSE)

.sentRequestAtMillis(-1L)

.receivedResponseAtMillis(System.currentTimeMillis())

.build();

}

// If we don't need the network, we're done.

// 不使用網絡請求 且存在緩存 直接返回響應

if (networkRequest == null) {

return cacheResponse.newBuilder()

.cacheResponse(stripBody(cacheResponse))

.build();

}

~~~

如果開始之前,你對[Http緩存協議](https://my.oschina.net/leejun2005/blog/369148)還不太懂,建議先去了解在回來看講解會更好理解 上面的代碼可以看出,CacheInterceptor的主要作用就是負責緩存的管理,期間也涉及到對網絡狀態的判斷,更新緩存等,流程如下

`1.首先根據Request中獲取緩存的Response來獲取緩存(Chain就是Interceptor接口類中的接口方法,主要處理請求和返回請求)`

`2.獲取當前時間戳,同時通過 CacheStrategy.Factory工廠類來獲取緩存策略`

~~~

public final class CacheStrategy {

/** The request to send on the network, or null if this call doesn't use the network. */

public final @Nullable Request networkRequest;

/** The cached response to return or validate; or null if this call doesn't use a cache. */

public final @Nullable Response cacheResponse;

CacheStrategy(Request networkRequest, Response cacheResponse) {

this.networkRequest = networkRequest;

this.cacheResponse = cacheResponse;

}

public CacheStrategy get() {

CacheStrategy candidate = getCandidate();

if (candidate.networkRequest != null && request.cacheControl().onlyIfCached()) {

// We're forbidden from using the network and the cache is insufficient.

return new CacheStrategy(null, null);

}

return candidate;

}

/** Returns a strategy to use assuming the request can use the network. */

private CacheStrategy getCandidate() {

//如果緩存沒有命中(即null),網絡請求也不需要加緩存Header了

if (cacheResponse == null) {

//沒有緩存的網絡請求,查上文的表可知是直接訪問

return new CacheStrategy(request, null);

}

// Drop the cached response if it's missing a required handshake.

// 如果緩存的TLS握手信息丟失,返回進行直接連接

if (request.isHttps() && cacheResponse.handshake() == null) {

return new CacheStrategy(request, null);

}

//檢測response的狀態碼,Expired時間,是否有no-cache標簽

if (!isCacheable(cacheResponse, request)) {

return new CacheStrategy(request, null);

}

CacheControl requestCaching = request.cacheControl();

// 如果請求指定不使用緩存響應并且當前request是可選擇的get請求

if (requestCaching.noCache() || hasConditions(request)) {

// 重新請求

return new CacheStrategy(request, null);

}

CacheControl responseCaching = cacheResponse.cacheControl();

// 如果緩存的response中的immutable標志位為true,則不請求網絡

if (responseCaching.immutable()) {

return new CacheStrategy(null, cacheResponse);

}

long ageMillis = cacheResponseAge();

long freshMillis = computeFreshnessLifetime();

if (requestCaching.maxAgeSeconds() != -1) {

freshMillis = Math.min(freshMillis, SECONDS.toMillis(requestCaching.maxAgeSeconds()));

}

long minFreshMillis = 0;

if (requestCaching.minFreshSeconds() != -1) {

minFreshMillis = SECONDS.toMillis(requestCaching.minFreshSeconds());

}

long maxStaleMillis = 0;

if (!responseCaching.mustRevalidate() && requestCaching.maxStaleSeconds() != -1) {

maxStaleMillis = SECONDS.toMillis(requestCaching.maxStaleSeconds());

}

if (!responseCaching.noCache() && ageMillis + minFreshMillis < freshMillis + maxStaleMillis) {

Response.Builder builder = cacheResponse.newBuilder();

if (ageMillis + minFreshMillis >= freshMillis) {

builder.addHeader("Warning", "110 HttpURLConnection \"Response is stale\"");

}

long oneDayMillis = 24 * 60 * 60 * 1000L;

if (ageMillis > oneDayMillis && isFreshnessLifetimeHeuristic()) {

builder.addHeader("Warning", "113 HttpURLConnection \"Heuristic expiration\"");

}

return new CacheStrategy(null, builder.build());

}

Headers.Builder conditionalRequestHeaders = request.headers().newBuilder();

Internal.instance.addLenient(conditionalRequestHeaders, conditionName, conditionValue);

Request conditionalRequest = request.newBuilder()

.headers(conditionalRequestHeaders.build())

.build();

return new CacheStrategy(conditionalRequest, cacheResponse);

}

~~~

> CacheStrategy緩存策略維護兩個變量networkRequest和cacheResponse,其內部工廠類Factory中的getCandidate方法會通過相應的邏輯判斷對比選擇最好的策略,如果返回networkRequest為null,則表示不進行網絡請求;而如果返回cacheResponse為null,則表示沒有有效的緩存。

`3.緊接上文,判斷緩存是否為空,則調用trackResponse(如果有緩存,根據緩存策略,更新統計指標:請求次數、使用網絡請求次數、使用緩存次數)`

`4.緩存無效,則關閉緩存`

`5.如果networkRequest和cacheResponse都為null,則表示不請求網絡而緩存又為null,那就返回504,請求失敗`

`6.如果當前返回網絡請求為空,且存在緩存,直接返回響應`

~~~

// 緊接上文

Response networkResponse = null;

try {

//執行下一個攔截器

networkResponse = chain.proceed(networkRequest);

} finally {

// If we're crashing on I/O or otherwise, don't leak the cache body.

if (networkResponse == null && cacheCandidate != null) {

closeQuietly(cacheCandidate.body());

}

}

// If we have a cache response too, then we're doing a conditional get.

if (cacheResponse != null) {

if (networkResponse.code() == HTTP_NOT_MODIFIED) {

Response response = cacheResponse.newBuilder()

.headers(combine(cacheResponse.headers(), networkResponse.headers()))

.sentRequestAtMillis(networkResponse.sentRequestAtMillis())

.receivedResponseAtMillis(networkResponse.receivedResponseAtMillis())

.cacheResponse(stripBody(cacheResponse))

.networkResponse(stripBody(networkResponse))

.build();

networkResponse.body().close();

// Update the cache after combining headers but before stripping the

// Content-Encoding header (as performed by initContentStream()).

cache.trackConditionalCacheHit();

cache.update(cacheResponse, response);

return response;

} else {

closeQuietly(cacheResponse.body());

}

}

Response response = networkResponse.newBuilder()

.cacheResponse(stripBody(cacheResponse))

.networkResponse(stripBody(networkResponse))

.build();

if (cache != null) {

if (HttpHeaders.hasBody(response) && CacheStrategy.isCacheable(response, networkRequest)) {

// Offer this request to the cache.

CacheRequest cacheRequest = cache.put(response);

return cacheWritingResponse(cacheRequest, response);

}

if (HttpMethod.invalidatesCache(networkRequest.method())) {

try {

cache.remove(networkRequest);

} catch (IOException ignored) {

// The cache cannot be written.

}

}

}

return response;

}

~~~

下部分代碼主要做了這幾件事:

1)執行下一個攔截器,即ConnectInterceptor

2)責任鏈執行完畢后,會返回最終響應數據,如果返回結果為空,即無網絡情況下,關閉緩存

3)如果cacheResponse緩存不為空并且最終響應數據的返回碼為304,那么就直接從緩存中讀取數據,否則關閉緩存

4)有網絡狀態下,直接返回最終響應數據

5)如果Http頭部是否有響應體且緩存策略是可以緩存的,true=將響應體寫入到Cache,下次直接調用

6)判斷最終響應數據的是否是無效緩存方法,true,則從Cache清除掉

7)返回Response

### **ConnectInterceptor**

負責與服務器建立鏈接

~~~

/** Opens a connection to the target server and proceeds to the next interceptor. */

public final class ConnectInterceptor implements Interceptor {

public final OkHttpClient client;

public ConnectInterceptor(OkHttpClient client) {

this.client = client;

}

@Override public Response intercept(Chain chain) throws IOException {

RealInterceptorChain realChain = (RealInterceptorChain) chain;

Request request = realChain.request();

// 建立執行Http請求所需要的對象

// 主要用于 ①獲取連接服務端的Connection ②連接用于服務端進行數據傳輸的輸入輸出流

StreamAllocation streamAllocation = realChain.streamAllocation();

// We need the network to satisfy this request. Possibly for validating a conditional GET.

boolean doExtensiveHealthChecks = !request.method().equals("GET");

HttpCodec httpCodec = streamAllocation.newStream(client, chain, doExtensiveHealthChecks);

RealConnection connection = streamAllocation.connection();

return realChain.proceed(request, streamAllocation, httpCodec, connection);

}

}

~~~

上面我們講到重定向攔截器時,發現RetryAndFollowUpInterceptor創建初始化StreamAllocation,但沒有使用,只是跟著攔截器鏈傳遞給下一個攔截器,最終會傳到ConnectInterceptor中使用。從上面代碼可以看出ConnectInterceptor主要執行的流程:

1.ConnectInterceptor獲取Interceptor傳過來的StreamAllocation,streamAllocation.newStream。

2.將剛才創建的用于網絡IO的[RealConnection](https://blog.csdn.net/chunqiuwei/article/details/74936885)對象以及對于與服務器交互最為關鍵的HttpCodec等對象傳遞給后面的攔截。

ConnectInterceptor的Interceptor代碼很簡單,但是關鍵代碼還是在streamAllocation.newStream(…)方法里,在這個方法完成所有鏈接的建立。

~~~

public HttpCodec newStream(

OkHttpClient client, Interceptor.Chain chain, boolean doExtensiveHealthChecks) {

int connectTimeout = chain.connectTimeoutMillis();

int readTimeout = chain.readTimeoutMillis();

int writeTimeout = chain.writeTimeoutMillis();

int pingIntervalMillis = client.pingIntervalMillis();

boolean connectionRetryEnabled = client.retryOnConnectionFailure();

try {

RealConnection resultConnection = findHealthyConnection(connectTimeout, readTimeout,

writeTimeout, pingIntervalMillis, connectionRetryEnabled, doExtensiveHealthChecks);

HttpCodec resultCodec = resultConnection.newCodec(client, chain, this);

synchronized (connectionPool) {

codec = resultCodec;

return resultCodec;

}

} catch (IOException e) {

throw new RouteException(e);

}

}

~~~

這里的流程主要就是

1.調用findHealthyConnection來創建RealConnection進行實際的網絡連接(能復用就復用,不能復用就新建)

2.通過獲取到的RealConnection來創建HttpCodec對象并通過同步代碼中返回

我們具體往findHealthyConnection看

~~~

/**

* Finds a connection and returns it if it is healthy. If it is unhealthy the process is repeated

* until a healthy connection is found.

* 找到一個健康的連接并返回它。 如果不健康,則重復該過程直到發現健康的連接。

*/

private RealConnection findHealthyConnection(int connectTimeout, int readTimeout,

int writeTimeout, boolean connectionRetryEnabled, boolean doExtensiveHealthChecks)

throws IOException {

while (true) {

RealConnection candidate = findConnection(connectTimeout, readTimeout, writeTimeout,

connectionRetryEnabled);

// If this is a brand new connection, we can skip the extensive health checks.

// 如果這是一個全新的聯系,我們可以跳過廣泛的健康檢查

synchronized (connectionPool) {

if (candidate.successCount == 0) {

return candidate;

}

}

// Do a (potentially slow) check to confirm that the pooled connection is still good. If it

// isn't, take it out of the pool and start again.

// 做一個(潛在的慢)檢查,以確認匯集的連接是否仍然良好。 如果不是,請將其從池中取出并重新開始。

if (!candidate.isHealthy(doExtensiveHealthChecks)) {

noNewStreams();

continue;

}

return candidate;

}

}

~~~

1.首先開啟while(true){}循環,循環不斷地從findConnection方法中獲取Connection對象

2.然后在同步代碼塊,如果滿足candidate.successCount ==0(就是整個網絡連接結束了),就返回

3.接著判斷如果這個candidate是不健康的,則執行銷毀并且重新調用findConnection獲取Connection對象 不健康的RealConnection條件為如下幾種情況:

* ①`RealConnection對象的socket沒有關閉`

* ②`socket的輸入流沒有關閉`

* ③`socket的輸出流沒有關閉`

* ④`http2時連接沒有關閉`

我們接著看findConnection中具體做了什么操作

~~~

/**

* Returns a connection to host a new stream. This prefers the existing connection if it exists,

* then the pool, finally building a new connection.

*/

private RealConnection findConnection(int connectTimeout, int readTimeout, int writeTimeout,

boolean connectionRetryEnabled) throws IOException {

boolean foundPooledConnection = false;

RealConnection result = null;

Route selectedRoute = null;

Connection releasedConnection;

Socket toClose;

synchronized (connectionPool) {

if (released) throw new IllegalStateException("released");

if (codec != null) throw new IllegalStateException("codec != null");

if (canceled) throw new IOException("Canceled");

// Attempt to use an already-allocated connection. We need to be careful here because our

// already-allocated connection may have been restricted from creating new streams.

//選擇嘗試復用Connection

releasedConnection = this.connection;

toClose = releaseIfNoNewStreams();

//判斷可復用的Connection是否為空

if (this.connection != null) {

// We had an already-allocated connection and it's good.

result = this.connection;

releasedConnection = null;

}

if (!reportedAcquired) {

// If the connection was never reported acquired, don't report it as released!

// 如果連接從未被報告獲得,不要報告它被釋放

releasedConnection = null;

}

//如果RealConnection不能復用,就從連接池中獲取

if (result == null) {

// Attempt to get a connection from the pool.

Internal.instance.get(connectionPool, address, this, null);

if (connection != null) {

foundPooledConnection = true;

result = connection;

} else {

selectedRoute = route;

}

}

}

closeQuietly(toClose);

if (releasedConnection != null) {

eventListener.connectionReleased(call, releasedConnection);

}

if (foundPooledConnection) {

eventListener.connectionAcquired(call, result);

}

if (result != null) {

// If we found an already-allocated or pooled connection, we're done.

return result;

}

// If we need a route selection, make one. This is a blocking operation.

boolean newRouteSelection = false;

if (selectedRoute == null && (routeSelection == null || !routeSelection.hasNext())) {

newRouteSelection = true;

routeSelection = routeSelector.next();

}

synchronized (connectionPool) {

if (canceled) throw new IOException("Canceled");

if (newRouteSelection) {

// Now that we have a set of IP addresses, make another attempt at getting a connection from

// the pool. This could match due to connection coalescing.

// 遍歷所有路由地址,再次嘗試從ConnectionPool中獲取connection

List<Route> routes = routeSelection.getAll();

for (int i = 0, size = routes.size(); i < size; i++) {

Route route = routes.get(i);

Internal.instance.get(connectionPool, address, this, route);

if (connection != null) {

foundPooledConnection = true;

result = connection;

this.route = route;

break;

}

}

}

if (!foundPooledConnection) {

if (selectedRoute == null) {

selectedRoute = routeSelection.next();

}

// Create a connection and assign it to this allocation immediately. This makes it possible

// for an asynchronous cancel() to interrupt the handshake we're about to do.

route = selectedRoute;

refusedStreamCount = 0;

result = new RealConnection(connectionPool, selectedRoute);

acquire(result, false);

}

}

// If we found a pooled connection on the 2nd time around, we're done.

if (foundPooledConnection) {

eventListener.connectionAcquired(call, result);

return result;

}

// Do TCP + TLS handshakes. This is a blocking operation.

//進行實際網絡連接

result.connect(

connectTimeout, readTimeout, writeTimeout, connectionRetryEnabled, call, eventListener);

routeDatabase().connected(result.route());

Socket socket = null;

synchronized (connectionPool) {

reportedAcquired = true;

// Pool the connection.

//獲取成功后放入到連接池當中

Internal.instance.put(connectionPool, result);

// If another multiplexed connection to the same address was created concurrently, then

// release this connection and acquire that one.

if (result.isMultiplexed()) {

socket = Internal.instance.deduplicate(connectionPool, address, this);

result = connection;

}

}

closeQuietly(socket);

eventListener.connectionAcquired(call, result);

return result;

}

~~~

代碼量很多,重點我都標識出來方便理解,大致流程如下:

1.首先判斷當前StreamAllocation對象是否存在Connection對象,有則返回(能復用就復用)

2.如果1沒有獲取到,就去ConnectionPool中獲取

3.如果2也沒有拿到,則會遍歷所有路由地址,并再次嘗試從ConnectionPool中獲取

4.如果3還是拿不到,就嘗試重新創建一個新的Connection進行實際的網絡連接

5.將新的Connection添加到ConnectionPool連接池中去,返回結果

從流程中可以,findConnection印證了其命名,主要都是在如何尋找可復用的Connection連接,能復用就復用,不能復用就重建,這里我們注重關注`result.connect()`,它是怎么創建一個可進行實際網絡連接的Connection

~~~

public void connect(int connectTimeout, int readTimeout, int writeTimeout,

int pingIntervalMillis, boolean connectionRetryEnabled, Call call,

EventListener eventListener) {

//檢查鏈接是否已經建立,protocol 標識位代表請求協議,在介紹OkHttpClient的Builder模式下參數講過

if (protocol != null) throw new IllegalStateException("already connected");

RouteException routeException = null;

//Socket鏈接的配置

List<ConnectionSpec> connectionSpecs = route.address().connectionSpecs();

//用于選擇鏈接(隧道鏈接還是Socket鏈接)

ConnectionSpecSelector connectionSpecSelector = new ConnectionSpecSelector(connectionSpecs);

while (true) {

try {

//是否建立Tunnel隧道鏈接

if (route.requiresTunnel()) {

connectTunnel(connectTimeout, readTimeout, writeTimeout, call, eventListener);

if (rawSocket == null) {

// We were unable to connect the tunnel but properly closed down our resources.

break;

}

} else {

connectSocket(connectTimeout, readTimeout, call, eventListener);

}

establishProtocol(connectionSpecSelector, pingIntervalMillis, call, eventListener);

eventListener.connectEnd(call, route.socketAddress(), route.proxy(), protocol);

break;

} catch (IOException e) {

closeQuietly(socket);

closeQuietly(rawSocket);

socket = null;

rawSocket = null;

source = null;

sink = null;

handshake = null;

protocol = null;

http2Connection = null;

eventListener.connectFailed(call, route.socketAddress(), route.proxy(), null, e);

}

}

}

~~~

我們這里留下重點代碼來講解

1.首先判斷當前protocol協議是否為空,如果不為空,則拋出異常(鏈接已存在)

2.創建Socket鏈接配置的list集合

3.通過2創建好的list構造了一個ConnectionSpecSelector(用于選擇隧道鏈接還是Socket鏈接)

4.接著執行while循環,route.requiresTunnel()判斷是否需要建立隧道鏈接,否則建立Socket鏈接

5.執行establishProtocol方法設置protocol協議并執行網絡請求

### **ConnectionPool**

不管protocol[協議](https://www.jianshu.com/p/52d86558ca57)是http1.1的Keep-Live機制還是http2.0的Multiplexing機制都需要引入連接池來維護整個鏈接,而OkHttp會將客戶端和服務端的鏈接作為一個Connection類,RealConnection就是Connection的實現類,ConnectionPool連接池就是負責維護管理所有Connection,并在有限時間內選擇Connection是否復用還是保持打開狀態,超時則及時清理掉

#### Get方法

~~~

//每當要選擇復用Connection時,就會調用ConnectionPool的get方法獲取

@Nullable RealConnection get(Address address, StreamAllocation streamAllocation, Route route) {

assert (Thread.holdsLock(this));

for (RealConnection connection : connections) {

if (connection.isEligible(address, route)) {

streamAllocation.acquire(connection, true);

return connection;

}

}

return null;

}

~~~

首先執行for循環遍歷Connections隊列,根據地址address和路由route判斷鏈接是否為可復用,true則執行streamAllocation.acquire返回connection,這里也就明白之前講解findConnection時,ConnectionPool時怎么查找并返回可復用的Connection的

~~~

/**

* Use this allocation to hold {@code connection}. Each call to this must be paired with a call to

* {@link #release} on the same connection.

*/

public void acquire(RealConnection connection, boolean reportedAcquired) {

assert (Thread.holdsLock(connectionPool));

if (this.connection != null) throw new IllegalStateException();

this.connection = connection;

this.reportedAcquired = reportedAcquired;

connection.allocations.add(new StreamAllocationReference(this, callStackTrace));

}

public final List<Reference<StreamAllocation>> allocations = new ArrayList<>();

~~~

首先就是把從連接池中獲取到的RealConnection對象賦值給StreamAllocation的connection屬性,然后把StreamAllocation對象的弱引用添加到RealConnection對象的allocations集合中去,判斷出當前鏈接對象所持有的StreamAllocation數目,該數組的大小用來判定一個鏈接的負載量是否超過OkHttp指定的最大次數。

#### Put方法

我們在講到findConnection時,ConnectionPool如果找不到可復用的Connection,就會重新創建一個Connection并通過Put方法添加到ConnectionPool中

~~~

void put(RealConnection connection) {

assert (Thread.holdsLock(this));

if (!cleanupRunning) {

cleanupRunning = true;

// 異步回收線程

executor.execute(cleanupRunnable);

}

connections.add(connection);

}

private final Deque<RealConnection> connections = new ArrayDeque<>();

~~~

在這里我們可以看到,首先在進行添加隊列之前,代碼會先判斷當前的cleanupRunning(當前清理是否正在執行),符合條件則執行cleanupRunnable異步清理任務回收連接池中的無效連接,其內部時如何執行的呢?

~~~

private final Runnable cleanupRunnable = new Runnable() {

@Override public void run() {

while (true) {

// 下次清理的間隔時間

long waitNanos = cleanup(System.nanoTime());

if (waitNanos == -1) return;

if (waitNanos > 0) {

long waitMillis = waitNanos / 1000000L;

waitNanos -= (waitMillis * 1000000L);

synchronized (ConnectionPool.this) {

try {

// 等待釋放鎖和時間間隔

ConnectionPool.this.wait(waitMillis, (int) waitNanos);

} catch (InterruptedException ignored) {

}

}

}

}

}

};

~~~

這里我們重點看cleanUp方法是如何清理無效連接的,請看代碼

~~~

long cleanup(long now) {

int inUseConnectionCount = 0;

int idleConnectionCount = 0;

RealConnection longestIdleConnection = null;

long longestIdleDurationNs = Long.MIN_VALUE;

// Find either a connection to evict, or the time that the next eviction is due.

synchronized (this) {

// 循環遍歷RealConnection

for (Iterator<RealConnection> i = connections.iterator(); i.hasNext(); ) {

RealConnection connection = i.next();

// If the connection is in use, keep searching.

// 判斷當前connection是否正在使用,true則inUseConnectionCount+1,false則idleConnectionCount+1

if (pruneAndGetAllocationCount(connection, now) > 0) {

// 正在使用的連接數

inUseConnectionCount++;

continue;

}

// 空閑連接數

idleConnectionCount++;

// If the connection is ready to be evicted, we're done.

long idleDurationNs = now - connection.idleAtNanos;

if (idleDurationNs > longestIdleDurationNs) {

longestIdleDurationNs = idleDurationNs;

longestIdleConnection = connection;

}

}

//

if (longestIdleDurationNs >= this.keepAliveDurationNs

|| idleConnectionCount > this.maxIdleConnections) {

// 如果被標記的連接數超過5個,就移除這個連接

// We've found a connection to evict. Remove it from the list, then close it below (outside

// of the synchronized block).

connections.remove(longestIdleConnection);

} else if (idleConnectionCount > 0) {

//如果上面處理返回的空閑連接數目大于0。則返回保活時間與空閑時間差

// A connection will be ready to evict soon.

return keepAliveDurationNs - longestIdleDurationNs;

} else if (inUseConnectionCount > 0) {

// 如果上面處理返回的都是活躍連接。則返回保活時間

// All connections are in use. It'll be at least the keep alive duration 'til we run again.

return keepAliveDurationNs;

} else {

// 跳出死循環

// No connections, idle or in use.

cleanupRunning = false;

return -1;

}

}

closeQuietly(longestIdleConnection.socket());

// Cleanup again immediately.

return 0;

}

~~~

1. for循環遍歷connections隊列,如果當前的connection對象正在被使用,則`inUseConnectionCount+1 (活躍連接數)`,跳出當前判斷邏輯,執行下一個判斷邏輯,否則`idleConnectionCount+1(空閑連接數)`

2. 判斷`當前的connection對象的空閑時間是否比已知的長`,true就記錄

3. 如果`空閑連接的時間超過5min,空閑連接的數量超過5`,就直接從連接池中移除,并關閉底層socket,返回等待時間0,直接再次循環遍歷連接池(這是個死循環)

4. 如果3不滿足,`判斷idleConnectionCount是否大于0 (返回的空閑連接數目大于0)`。則返回保活時間與空閑時間差

5. 如果4不滿足,判斷有沒有正在使用的連接,有的話返回保活時間

6. 如果5也不滿足,當前說明連接池中沒有連接,`跳出死循環返回-1`

> 這里可以算是核心代碼,引用Java GC算法中的標記-擦除算法,標記處最不活躍的連接進行清除。每當要創建一個新的Connection時,ConnectionPool都會循環遍歷整個connections隊列,標記查找不活躍的連接,當不活躍連接達到一定數量時及時清除,這也是OkHttp 連接復用的核心。

還有一點要注意,pruneAndGetAllocationCount方法就是判斷當前connection對象是空閑還是活躍的

~~~

private int pruneAndGetAllocationCount(RealConnection connection, long now) {

List<Reference<StreamAllocation>> references = connection.allocations;

for (int i = 0; i < references.size(); ) {

Reference<StreamAllocation> reference = references.get(i);

if (reference.get() != null) {

i++;

continue;

}

// We've discovered a leaked allocation. This is an application bug.

StreamAllocation.StreamAllocationReference streamAllocRef =

(StreamAllocation.StreamAllocationReference) reference;

String message = "A connection to " + connection.route().address().url()

+ " was leaked. Did you forget to close a response body?";

Platform.get().logCloseableLeak(message, streamAllocRef.callStackTrace);

references.remove(i);

connection.noNewStreams = true;

// If this was the last allocation, the connection is eligible for immediate eviction.

if (references.isEmpty()) {

connection.idleAtNanos = now - keepAliveDurationNs;

return 0;

}

}

return references.size();

}

~~~

1.首先for循環遍歷RealConnection的StreamAllocationList列表,判斷每個StreamAllocation是否為空,false則表示無對象引用這個StreamAllocation,及時清除,執行下一個邏輯判斷(這里有個坑,當作彩蛋吧)

2.接著判斷如果當前StreamAllocation集合因為1的remove而導致列表為空,即沒有任何對象引用,返回0結束

3.返回結果

## **CallServerInterceptor**

負責發起網絡請求和接受服務器返回響應

~~~

/** This is the last interceptor in the chain. It makes a network call to the server. */

public final class CallServerInterceptor implements Interceptor {

private final boolean forWebSocket;

public CallServerInterceptor(boolean forWebSocket) {

this.forWebSocket = forWebSocket;

}

@Override public Response intercept(Chain chain) throws IOException {

// 攔截器鏈

RealInterceptorChain realChain = (RealInterceptorChain) chain;

// HttpCodec接口,封裝了底層IO可以直接用來收發數據的組件流對象

HttpCodec httpCodec = realChain.httpStream();

// 用來Http網絡請求所需要的組件

StreamAllocation streamAllocation = realChain.streamAllocation();

// Connection類的具體實現

RealConnection connection = (RealConnection) realChain.connection();

Request request = realChain.request();

long sentRequestMillis = System.currentTimeMillis();

realChain.eventListener().requestHeadersStart(realChain.call());

// 先向Socket中寫請求頭部信息

httpCodec.writeRequestHeaders(request);

realChain.eventListener().requestHeadersEnd(realChain.call(), request);

Response.Builder responseBuilder = null;

//詢問服務器是否可以發送帶有請求體的信息

if (HttpMethod.permitsRequestBody(request.method()) && request.body() != null) {

// If there's a "Expect: 100-continue" header on the request, wait for a "HTTP/1.1 100

// Continue" response before transmitting the request body. If we don't get that, return

// what we did get (such as a 4xx response) without ever transmitting the request body.

//特殊處理,如果服務器允許請求頭部可以攜帶Expect或則100-continue字段,直接獲取響應信息

if ("100-continue".equalsIgnoreCase(request.header("Expect"))) {

httpCodec.flushRequest();

realChain.eventListener().responseHeadersStart(realChain.call());

responseBuilder = httpCodec.readResponseHeaders(true);

}

if (responseBuilder == null) {

// Write the request body if the "Expect: 100-continue" expectation was met.

realChain.eventListener().requestBodyStart(realChain.call());

long contentLength = request.body().contentLength();

CountingSink requestBodyOut =

new CountingSink(httpCodec.createRequestBody(request, contentLength));

BufferedSink bufferedRequestBody = Okio.buffer(requestBodyOut);

// 向Socket中寫入請求信息

request.body().writeTo(bufferedRequestBody);

bufferedRequestBody.close();

realChain.eventListener()

.requestBodyEnd(realChain.call(), requestBodyOut.successfulCount);

} else if (!connection.isMultiplexed()) {

// If the "Expect: 100-continue" expectation wasn't met, prevent the HTTP/1 connection

// from being reused. Otherwise we're still obligated to transmit the request body to

// leave the connection in a consistent state.

streamAllocation.noNewStreams();

}

}

// 完成網絡請求的寫入

httpCodec.finishRequest();

if (responseBuilder == null) {

realChain.eventListener().responseHeadersStart(realChain.call());

//讀取響應信息的頭部

responseBuilder = httpCodec.readResponseHeaders(false);

}

Response response = responseBuilder

.request(request)

.handshake(streamAllocation.connection().handshake())

.sentRequestAtMillis(sentRequestMillis)

.receivedResponseAtMillis(System.currentTimeMillis())

.build();

realChain.eventListener()

.responseHeadersEnd(realChain.call(), response);

int code = response.code();

//

if (forWebSocket && code == 101) {

// Connection is upgrading, but we need to ensure interceptors see a non-null response body.

response = response.newBuilder()

.body(Util.EMPTY_RESPONSE)

.build();

} else {

response = response.newBuilder()

.body(httpCodec.openResponseBody(response))

.build();

}

//

if ("close".equalsIgnoreCase(response.request().header("Connection"))

|| "close".equalsIgnoreCase(response.header("Connection"))) {

streamAllocation.noNewStreams();

}

if ((code == 204 || code == 205) && response.body().contentLength() > 0) {

throw new ProtocolException(

"HTTP " + code + " had non-zero Content-Length: " + response.body().contentLength());

}

return response;

}

~~~

其實代碼很簡單,我們這里只做OkHttp上的講解,關于HttpCodec`(HttpCodec接口,封裝了底層IO可以直接用來收發數據的組件流對象)`可以去相關文獻中了解。流程如下

1.首先初始化對象,同時調用`httpCodec.writeRequestHeaders(request)`向Socket中寫入Header信息

2.判斷服務器是否可以發送帶有請求體的信息,`返回為True時會做一個特殊處理,判斷服務器是否允許請求頭部可以攜帶Expect或則100-continue字段(握手操作)`,可以就直接獲取響應信息

3.當前面的"100-continue",需要握手,但又握手失敗,如果body信息為空,并寫入請求body信息,否則判斷是否是多路復用,`滿足則關閉寫入流和Connection`

4.完成網絡請求的寫入

5.`判斷body信息是否為空(即表示沒有處理特殊情況)`,就直接讀取響應的頭部信息,并通過構造者模式一個寫入原請求,握手情況,請求時間,得到的結果時間的Response

6.`通過狀態碼判斷以及是否webSocket判斷,是否返回一個空的body`,true則返回空的body,否則讀取body信息

7.如果設置了連接 close ,斷開連接,關閉寫入流和Connection

8.返回Response

到此,OkHttp內部5大攔截器的作用已經講解完成,流程大致如下

鏈接:https://juejin.im/post/5c4682d2f265da6130752a1d