```

#!/usr/bin/python3

# coding:utf-8

# @Author: Lin Misaka

# @File: net.py

# @Data: 2020/11/30

# @IDE: PyCharm

from os import listdir

import numpy as np

import matplotlib.pyplot as plt

import pickle

# 函數img2vector將圖像轉換為向量

def img2vector(filename):

returnVect = np.zeros((1, 1024))

fr = open(filename)

for i in range(32):

lineStr = fr.readline()

for j in range(32):

returnVect[0, 32 * i + j] = int(lineStr[j])

return returnVect

# 讀取手寫字體txt數據

def handwritingData(dataPath):

hwLabels = []

FileList = listdir(dataPath) # 1 獲取目錄內容

m = len(FileList)

digitalmat = np.zeros((m, 1024))

for i in range(m):

# 2 從文件名解析分類數字

fileNameStr = FileList[i]

fileStr = fileNameStr.split('.')[0] # take off .txt

classNumStr = int(fileStr.split('_')[0])

hwLabels.append(classNumStr)

#print(digitalmat[i, :].shape)#(1024,)

#print(img2vector(dataPath + '/%s' % fileNameStr).shape) # (1, 1024)

#print(digitalmat[i].shape) # (1024,)

digitalmat[i] = img2vector(dataPath + '/%s' % fileNameStr)

return digitalmat, hwLabels

# diff = True求導

def Sigmoid(x, diff=False):

def sigmoid(x): # sigmoid函數

return 1 / (1 + np.exp(-x))

def dsigmoid(x):

f = sigmoid(x)

return f * (1 - f)

if (diff == True):

return dsigmoid(x)

return sigmoid(x)

# diff = True求導

def SquareErrorSum(y_hat, y, diff=False):

if (diff == True):

return y_hat - y

return (np.square(y_hat - y) * 0.5).sum()

class Net():

def __init__(self):

# X Input

self.X = np.random.randn(1024, 1)

self.W1 = np.random.randn(16, 1024)

self.b1 = np.random.randn(16, 1)

self.W2 = np.random.randn(16, 16)

self.b2 = np.random.randn(16, 1)

self.W3 = np.random.randn(10, 16)

self.b3 = np.random.randn(10, 1)

self.alpha = 0.01 #學習率

self.losslist = [] #用于作圖

def forward(self, X, y, activate):

self.X = X

self.z1 = np.dot(self.W1, self.X) + self.b1

self.a1 = activate(self.z1)

self.z2 = np.dot(self.W2, self.a1) + self.b2

self.a2 = activate(self.z2)

self.z3 = np.dot(self.W3, self.a2) + self.b3

self.y_hat = activate(self.z3)

Loss = SquareErrorSum(self.y_hat, y)

return Loss, self.y_hat

def backward(self, y, activate):

self.delta3 = activate(self.z3, True) * SquareErrorSum(self.y_hat, y, True)

self.delta2 = activate(self.z2, True) * (np.dot(self.W3.T, self.delta3))

self.delta1 = activate(self.z1, True) * (np.dot(self.W2.T, self.delta2))

dW3 = np.dot(self.delta3, self.a2.T)

dW2 = np.dot(self.delta2, self.a1.T)

dW1 = np.dot(self.delta1, self.X.T)

d3 = self.delta3

d2 = self.delta2

d1 = self.delta1

#update weight

self.W3 -= self.alpha * dW3

self.W2 -= self.alpha * dW2

self.W1 -= self.alpha * dW1

self.b3 -= self.alpha * d3

self.b2 -= self.alpha * d2

self.b1 -= self.alpha * d1

def setLearnrate(self, l):

self.alpha = l

def save(self,path):

obj = pickle.dumps(self)

with open(path,"wb") as f:

f.write(obj)

def load(path):

obj = None

with open(path, "rb") as f:

try:

obj = pickle.load(f)

except:

print("IOError")

return obj

def train(self, trainMat, trainLabels, Epoch=5, bitch=None):

for epoch in range(Epoch):

acc = 0.0

acc_cnt = 0

label = np.zeros((10, 1))#先生成一個10x1是向量,減少運算。用于生成one_hot格式的label

for i in range(len(trainMat)):#可以用batch,數據較少,一次訓練所有數據集

X = trainMat[i, :].reshape((1024, 1)) #生成輸入

labelidx = trainLabels[i]

label[labelidx][0] = 1.0

Loss, y_hat = self.forward(X, label, Sigmoid)#前向傳播

self.backward(label, Sigmoid)#反向傳播

label[labelidx][0] = 0.0#還原為0向量

acc_cnt += int(trainLabels[i] == np.argmax(y_hat))

acc = acc_cnt / len(trainMat)

self.losslist.append(Loss)

print("epoch:%d,loss:%02f,accrucy : %02f%%" % (epoch, Loss, acc*100))

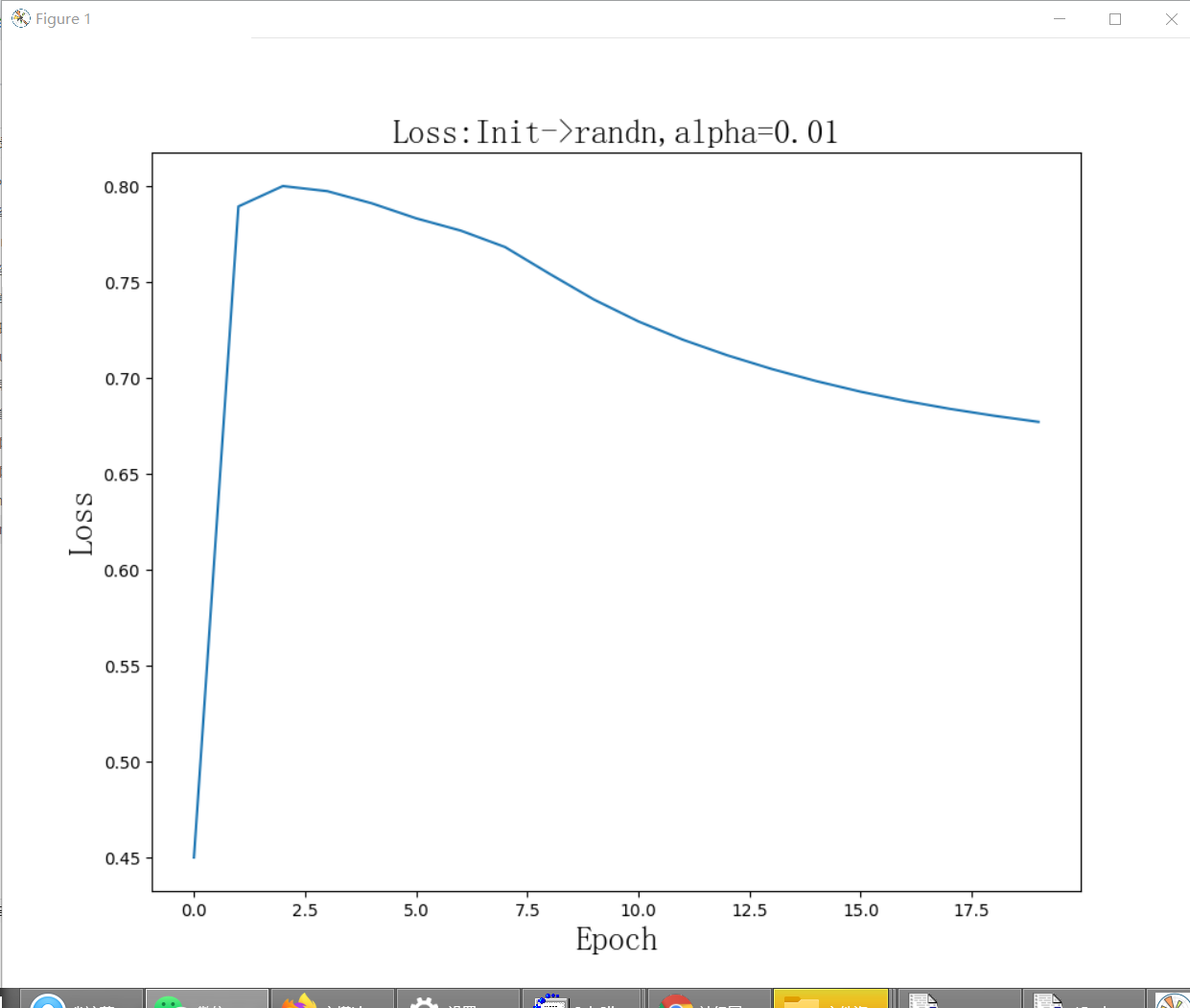

self.plotLosslist(self.losslist, "Loss:Init->randn,alpha=0.01")

def plotLosslist(self, Loss, title):

font = {'family': 'simsun',

'weight': 'bold',

'size': 20,

}

m = len(Loss)

X = range(m)

# plt.figure(1)

plt.subplots(nrows=1, ncols=1, figsize=(10, 8))

plt.subplot(111)

plt.title(title, font)

plt.plot(X, Loss)

plt.xlabel(r'Epoch', font)

plt.ylabel(u'Loss', font)

plt.show()

def test(self, testMat, testLabels, bitch=None):

acc = 0.0

acc_cnt = 0

label = np.zeros((10, 1))#先生成一個10x1是向量,減少運算。用于生成one_hot格式的label

if(bitch == None):

bitch = len(testMat)

for i in range(bitch):#可以用batch,數據較少,一次訓練所有數據集

X = testMat[i, :].reshape((1024, 1)) #生成輸入

labelidx = testLabels[i]

label[labelidx][0] = 1.0

Loss, y_hat = self.forward(X, label, Sigmoid)#前向傳播

label[labelidx][0] = 0.0#還原為0向量

acc_cnt += int(testLabels[i] == np.argmax(y_hat))

acc = acc_cnt / bitch

print("test num: %d, accrucy : %05.3f%%"%(bitch,acc*100))

# 讀取訓練數據

trainDataPath = "./trainingDigits"

trainMat, trainLabels = handwritingData(trainDataPath)

testDataPath = "./testDigits"

testMat, testLabels = handwritingData(testDataPath)

net = Net()

net.setLearnrate(0.01)

net.train(trainMat, trainLabels, Epoch=20) #net.train(trainMat, trainLabels, Epoch=200)

net.save("hr.model")

net.test(testMat, testLabels)

newmodel = Net.load("hr.model")

newmodel.test(testMat, testLabels)

```

.

>>>

===================== RESTART: E:\012digits210201\net.py =====================

epoch:0,loss:0.884695,accrucy : 29.452055%

epoch:1,loss:0.803985,accrucy : 40.924658%

epoch:2,loss:0.801575,accrucy : 48.630137%

epoch:3,loss:0.804054,accrucy : 55.308219%

epoch:4,loss:0.801643,accrucy : 61.815068%

epoch:5,loss:0.794812,accrucy : 66.780822%

epoch:6,loss:0.785229,accrucy : 70.376712%

epoch:7,loss:0.773085,accrucy : 74.657534%

epoch:8,loss:0.758082,accrucy : 78.253425%

epoch:9,loss:0.740774,accrucy : 80.479452%

epoch:10,loss:0.724316,accrucy : 83.390411%

epoch:11,loss:0.710766,accrucy : 85.787671%

epoch:12,loss:0.700182,accrucy : 87.328767%

epoch:13,loss:0.691778,accrucy : 88.869863%

epoch:14,loss:0.684741,accrucy : 89.897260%

epoch:15,loss:0.678793,accrucy : 90.924658%

epoch:16,loss:0.673885,accrucy : 91.438356%

epoch:17,loss:0.670210,accrucy : 91.438356%

epoch:18,loss:0.667625,accrucy : 91.780822%

epoch:19,loss:0.665471,accrucy : 92.123288%

test num: 276, accrucy : 94.565%

test num: 276, accrucy : 94.565%

>>>

- BP神經網絡到c++實現等--機器學習“掐死教程”

- 訓練bp(神經)網絡學會“乘法”--用”蚊子“訓練高射炮

- Ann計算異或&前饋神經網絡20200302

- 神經網絡ANN的表示20200312

- 簡單神經網絡的后向傳播(Backpropagration, BP)算法

- 牛頓迭代法求局部最優(解)20200310

- ubuntu安裝numpy和pip3等

- 從零實現一個神經網絡-numpy篇01

- _美國普林斯頓大學VictorZhou神經網絡神文的改進和翻譯20200311

- c語言-普林斯頓victorZhou神經網絡實現210301

- bp網絡實現xor異或的C語言實現202102

- bp網絡實現xor異或-自動錄入輸入(寫死20210202

- Mnist在python3.6上跑tensorFlow2.0一步一坑20210210

- numpy手寫數字識別-直接用bp網絡識別210201