# 五、文本預處理

> 作者:[Chris Albon](https://chrisalbon.com/)

>

> 譯者:[飛龍](https://github.com/wizardforcel)

>

> 協議:[CC BY-NC-SA 4.0](http://creativecommons.org/licenses/by-nc-sa/4.0/)

## 詞袋

```py

# 加載庫

import numpy as np

from sklearn.feature_extraction.text import CountVectorizer

import pandas as pd

# 創建文本

text_data = np.array(['I love Brazil. Brazil!',

'Sweden is best',

'Germany beats both'])

# 創建詞袋特征矩陣

count = CountVectorizer()

bag_of_words = count.fit_transform(text_data)

# 展示特征矩陣

bag_of_words.toarray()

'''

array([[0, 0, 0, 2, 0, 0, 1, 0],

[0, 1, 0, 0, 0, 1, 0, 1],

[1, 0, 1, 0, 1, 0, 0, 0]], dtype=int64)

'''

# 獲取特征名稱

feature_names = count.get_feature_names()

# 查看特征名稱

feature_names

# ['beats', 'best', 'both', 'brazil', 'germany', 'is', 'love', 'sweden']

# 創建數據幀

pd.DataFrame(bag_of_words.toarray(), columns=feature_names)

```

| | beats | best | both | brazil | germany | is | love | sweden |

| --- | --- | --- | --- | --- | --- | --- | --- | --- |

| 0 | 0 | 0 | 0 | 2 | 0 | 0 | 1 | 0 |

| 1 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 1 |

| 2 | 1 | 0 | 1 | 0 | 1 | 0 | 0 | 0 |

## 解析 HTML

```py

# 加載庫

from bs4 import BeautifulSoup

# 創建一些 HTML 代碼

html = "<div class='full_name'><span style='font-weight:bold'>Masego</span> Azra</div>"

# 解析 html

soup = BeautifulSoup(html, "lxml")

# 尋找帶有 "full_name" 類的 <div>,展示文本

soup.find("div", { "class" : "full_name" }).text

# 'Masego Azra'

```

## 移除標點

```py

# 加載庫

import string

import numpy as np

# 創建文本

text_data = ['Hi!!!! I. Love. This. Song....',

'10000% Agree!!!! #LoveIT',

'Right?!?!']

# 創建函數,使用 string.punctuation 移除所有標點

def remove_punctuation(sentence: str) -> str:

return sentence.translate(str.maketrans('', '', string.punctuation))

# 應用函數

[remove_punctuation(sentence) for sentence in text_data]

# ['Hi I Love This Song', '10000 Agree LoveIT', 'Right']

```

## 移除停止詞

```py

# 加載庫

from nltk.corpus import stopwords

# 你第一次需要下載停止詞的集合

import nltk

nltk.download('stopwords')

'''

[nltk_data] Downloading package stopwords to

[nltk_data] /Users/chrisalbon/nltk_data...

[nltk_data] Package stopwords is already up-to-date!

True

'''

# 創建單詞標記

tokenized_words = ['i', 'am', 'going', 'to', 'go', 'to', 'the', 'store', 'and', 'park']

# 加載停止詞

stop_words = stopwords.words('english')

# 展示停止詞

stop_words[:5]

# ['i', 'me', 'my', 'myself', 'we']

# 移除停止詞

[word for word in tokenized_words if word not in stop_words]

# ['going', 'go', 'store', 'park']

```

## 替換字符

```py

# 導入庫

import re

# 創建文本

text_data = ['Interrobang. By Aishwarya Henriette',

'Parking And Going. By Karl Gautier',

'Today Is The night. By Jarek Prakash']

# 移除句號

remove_periods = [string.replace('.', '') for string in text_data]

# 展示文本

remove_periods

'''

['Interrobang By Aishwarya Henriette',

'Parking And Going By Karl Gautier',

'Today Is The night By Jarek Prakash']

'''

# 創建函數

def replace_letters_with_X(string: str) -> str:

return re.sub(r'[a-zA-Z]', 'X', string)

# 應用函數

[replace_letters_with_X(string) for string in remove_periods]

'''

['XXXXXXXXXXX XX XXXXXXXXX XXXXXXXXX',

'XXXXXXX XXX XXXXX XX XXXX XXXXXXX',

'XXXXX XX XXX XXXXX XX XXXXX XXXXXXX']

'''

```

## 詞干提取

```py

# 加載庫

from nltk.stem.porter import PorterStemmer

# 創建單詞標記

tokenized_words = ['i', 'am', 'humbled', 'by', 'this', 'traditional', 'meeting']

```

詞干提取通過識別和刪除詞綴(例如動名詞)同時保持詞的根本意義,將詞語簡化為詞干。 NLTK 的`PorterStemmer`實現了廣泛使用的 Porter 詞干算法。

```py

# 創建提取器

porter = PorterStemmer()

# 應用提取器

[porter.stem(word) for word in tokenized_words]

# ['i', 'am', 'humbl', 'by', 'thi', 'tradit', 'meet']

```

## 移除空白

```py

# 創建文本

text_data = [' Interrobang. By Aishwarya Henriette ',

'Parking And Going. By Karl Gautier',

' Today Is The night. By Jarek Prakash ']

# 移除空白

strip_whitespace = [string.strip() for string in text_data]

# 展示文本

strip_whitespace

'''

['Interrobang. By Aishwarya Henriette',

'Parking And Going. By Karl Gautier',

'Today Is The night. By Jarek Prakash']

'''

```

## 詞性標簽

```py

# 加載庫

from nltk import pos_tag

from nltk import word_tokenize

# 創建文本

text_data = "Chris loved outdoor running"

# 使用預訓練的詞性標注器

text_tagged = pos_tag(word_tokenize(text_data))

# 展示詞性

text_tagged

# [('Chris', 'NNP'), ('loved', 'VBD'), ('outdoor', 'RP'), ('running', 'VBG')]

```

輸出是一個元組列表,包含單詞和詞性的標記。 NLTK 使用 Penn Treebank 詞性標簽。

| 標簽 | 詞性 |

| --- | --- |

| NNP | 專有名詞,單數 |

| NN | 名詞,單數或集體 |

| RB | 副詞 |

| VBD | 動詞,過去式 |

| VBG | 動詞,動名詞或現在分詞 |

| JJ | 形容詞 |

| PRP | 人稱代詞 |

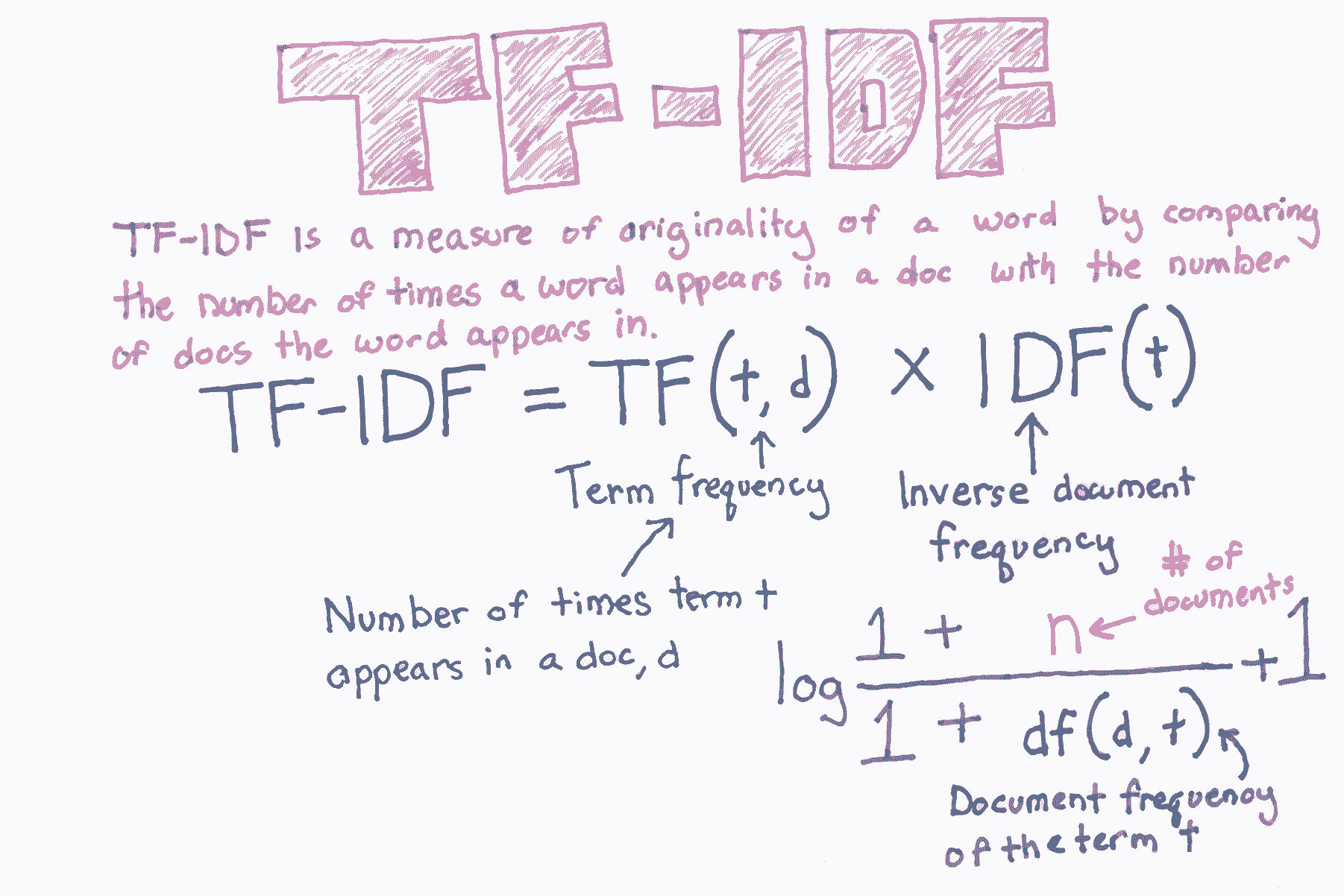

## TF-IDF

```py

# 加載庫

import numpy as np

from sklearn.feature_extraction.text import TfidfVectorizer

import pandas as pd

# 創建文本

text_data = np.array(['I love Brazil. Brazil!',

'Sweden is best',

'Germany beats both'])

# 創建 tf-idf 特征矩陣

tfidf = TfidfVectorizer()

feature_matrix = tfidf.fit_transform(text_data)

# 展示 tf-idf 特征矩陣

feature_matrix.toarray()

'''

array([[ 0. , 0. , 0. , 0.89442719, 0. ,

0. , 0.4472136 , 0. ],

[ 0. , 0.57735027, 0. , 0. , 0. ,

0.57735027, 0. , 0.57735027],

[ 0.57735027, 0. , 0.57735027, 0. , 0.57735027,

0. , 0. , 0. ]])

'''

# 展示 tf-idf 特征矩陣

tfidf.get_feature_names()

# ['beats', 'best', 'both', 'brazil', 'germany', 'is', 'love', 'sweden']

# 創建數據幀

pd.DataFrame(feature_matrix.toarray(), columns=tfidf.get_feature_names())

```

| | beats | best | both | brazil | germany | is | love | sweden |

| --- | --- | --- | --- | --- | --- | --- | --- | --- |

| 0 | 0.00000 | 0.00000 | 0.00000 | 0.894427 | 0.00000 | 0.00000 | 0.447214 | 0.00000 |

| 1 | 0.00000 | 0.57735 | 0.00000 | 0.000000 | 0.00000 | 0.57735 | 0.000000 | 0.57735 |

| 2 | 0.57735 | 0.00000 | 0.57735 | 0.000000 | 0.57735 | 0.00000 | 0.000000 | 0.00000 |

## 文本分詞

```py

# 加載庫

from nltk.tokenize import word_tokenize, sent_tokenize

# 創建文本

string = "The science of today is the technology of tomorrow. Tomorrow is today."

# 對文本分詞

word_tokenize(string)

'''

['The',

'science',

'of',

'today',

'is',

'the',

'technology',

'of',

'tomorrow',

'.',

'Tomorrow',

'is',

'today',

'.']

'''

# 對句子分詞

sent_tokenize(string)

# ['The science of today is the technology of tomorrow.', 'Tomorrow is today.']

```