# 如何制定多站點多元空氣污染時間序列預測的基線預測

> 原文: [https://machinelearningmastery.com/how-to-develop-baseline-forecasts-for-multi-site-multivariate-air-pollution-time-series-forecasting/](https://machinelearningmastery.com/how-to-develop-baseline-forecasts-for-multi-site-multivariate-air-pollution-time-series-forecasting/)

實時世界時間序列預測具有挑戰性,其原因不僅限于問題特征,例如具有多個輸入變量,需要預測多個時間步驟,以及需要對多個物理站點執行相同類型的預測。

EMC Data Science Global Hackathon 數據集或簡稱“_ 空氣質量預測 _”數據集描述了多個站點的天氣狀況,需要預測隨后三天的空氣質量測量結果。

使用新的時間序列預測數據集時,重要的第一步是開發模型表現基線,通過該基線可以比較所有其他更復雜策略的技能。基線預測策略簡單快捷。它們被稱為“樸素”策略,因為它們對特定預測問題的假設很少或根本沒有。

在本教程中,您將了解如何為多步驟多變量空氣污染時間序列預測問題開發樸素的預測方法。

完成本教程后,您將了解:

* 如何開發用于評估大氣污染數據集預測策略的測試工具。

* 如何開發使用整個訓練數據集中的數據的全球樸素預測策略。

* 如何開發使用來自預測的特定區間的數據的本地樸素預測策略。

讓我們開始吧。

如何制定多站點多元空氣污染時間序列預測的基準預測

照片由 [DAVID HOLT](https://www.flickr.com/photos/zongo/38524476520/) ,保留一些權利。

## 教程概述

本教程分為六個部分;他們是:

* 問題描述

* 樸素的方法

* 模型評估

* 全球樸素的方法

* 大塊樸素的方法

* 結果摘要

## 問題描述

空氣質量預測數據集描述了多個地點的天氣狀況,需要預測隨后三天的空氣質量測量結果。

具體而言,對于多個站點,每小時提供 8 天的溫度,壓力,風速和風向等天氣觀測。目標是預測未來 3 天在多個地點的空氣質量測量。預測的提前期不是連續的;相反,必須在 72 小時預測期內預測特定提前期。他們是:

```py

+1, +2, +3, +4, +5, +10, +17, +24, +48, +72

```

此外,數據集被劃分為不相交但連續的數據塊,其中 8 天的數據隨后是需要預測的 3 天。

并非所有站點或塊都可以獲得所有觀察結果,并且并非所有站點和塊都可以使用所有輸出變量。必須解決大部分缺失數據。

該數據集被用作 2012 年 Kaggle 網站上[短期機器學習競賽](https://www.kaggle.com/c/dsg-hackathon)(或黑客馬拉松)的基礎。

根據從參與者中扣留的真實觀察結果評估競賽的提交,并使用平均絕對誤差(MAE)進行評分。提交要求在由于缺少數據而無法預測的情況下指定-1,000,000 的值。實際上,提供了一個插入缺失值的模板,并且要求所有提交都采用(模糊的是什么)。

獲勝者在滯留測試集([私人排行榜](https://www.kaggle.com/c/dsg-hackathon/leaderboard))上使用隨機森林在滯后觀察中獲得了 0.21058 的 MAE。該帖子中提供了此解決方案的說明:

* [把所有東西都扔進隨機森林:Ben Hamner 贏得空氣質量預測黑客馬拉松](http://blog.kaggle.com/2012/05/01/chucking-everything-into-a-random-forest-ben-hamner-on-winning-the-air-quality-prediction-hackathon/),2012。

在本教程中,我們將探索如何為可用作基線的問題開發樸素預測,以確定模型是否具有該問題的技能。

## 樸素的預測方法

預測表現的基線提供了一個比較點。

它是您問題的所有其他建模技術的參考點。如果模型達到或低于基線的表現,則應該修復或放棄該技術。

用于生成預測以計算基準表現的技術必須易于實現,并且不需要特定于問題的細節。原則是,如果復雜的預測方法不能勝過使用很少或沒有特定問題信息的模型,那么它就沒有技巧。

可以并且應該首先使用與問題無關的預測方法,然后是使用少量特定于問題的信息的樸素方法。

可以使用的兩個與問題無關的樸素預測方法的例子包括:

* 保留每個系列的最后觀察值。

* 預測每個系列的觀測值的平均值。

數據被分成時間塊或間隔。每個時間塊都有多個站點的多個變量來預測。持久性預測方法在數據組織的這個組塊級別是有意義的。

可以探索其他持久性方法;例如:

* 每個系列的接下來三天預測前一天的觀察結果。

* 每個系列的接下來三天預測前三天的觀察結果。

這些是需要探索的理想基線方法,但是大量缺失數據和大多數數據塊的不連續結構使得在沒有非平凡數據準備的情況下實施它們具有挑戰性。

可以進一步詳細說明預測每個系列的平均觀測值;例如:

* 預測每個系列的全局(跨塊)平均值。

* 預測每個系列的本地(塊內)平均值。

每個系列都需要三天的預測,具有不同的開始時間,例如:一天的時間。因此,每個塊的預測前置時間將在一天中的不同時段下降。

進一步詳細說明預測平均值是為了納入正在預測的一天中的小時數;例如:

* 預測每個預測提前期的一小時的全球(跨塊)平均值。

* 預測每個預測提前期的當天(當地塊)平均值。

在多個站點測量許多變量;因此,可以跨系列使用信息,例如計算預測提前期的每小時平均值或平均值。這些很有意思,但可能會超出樸素的使命。

這是一個很好的起點,盡管可能會進一步詳細闡述您可能想要考慮和探索的樸素方法。請記住,目標是使用非常少的問題特定信息來開發預測基線。

總之,我們將研究針對此問題的五種不同的樸素預測方法,其中最好的方法將提供表現的下限,通過該方法可以比較其他模型。他們是:

1. 每個系列的全球平均價值

2. 每個系列的預測提前期的全球平均值

3. 每個系列的本地持久價值

4. 每個系列的本地平均值

5. 每個系列的預測提前期的當地平均值

## 模型評估

在我們評估樸素的預測方法之前,我們必須開發一個測試工具。

這至少包括如何準備數據以及如何評估預測。

### 加載數據集

第一步是下載數據集并將其加載到內存中。

數據集可以從 Kaggle 網站免費下載。您可能必須創建一個帳戶并登錄才能下載數據集。

下載整個數據集,例如“_ 將所有 _”下載到您的工作站,并使用名為' _AirQualityPrediction_ '的文件夾解壓縮當前工作目錄中的存檔。

* [EMC 數據科學全球黑客馬拉松(空氣質量預測)數據](https://www.kaggle.com/c/dsg-hackathon/data)

我們的重點將是包含訓練數據集的' _TrainingData.csv_ '文件,特別是塊中的數據,其中每個塊是八個連續的觀察日和目標變量。

我們可以使用 Pandas [read_csv()函數](https://pandas.pydata.org/pandas-docs/stable/generated/pandas.read_csv.html)將數據文件加載到內存中,并在第 0 行指定標題行。

```py

# load dataset

dataset = read_csv('AirQualityPrediction/TrainingData.csv', header=0)

```

我們可以通過'chunkID'變量(列索引 1)對數據進行分組。

首先,讓我們獲取唯一的塊標識符列表。

```py

chunk_ids = unique(values[:, 1])

```

然后,我們可以收集每個塊標識符的所有行,并將它們存儲在字典中以便于訪問。

```py

chunks = dict()

# sort rows by chunk id

for chunk_id in chunk_ids:

selection = values[:, chunk_ix] == chunk_id

chunks[chunk_id] = values[selection, :]

```

下面定義了一個名為 _to_chunks()_ 的函數,它接受加載數據的 NumPy 數組,并將 _chunk_id_ 的字典返回到塊的行。

```py

# split the dataset by 'chunkID', return a dict of id to rows

def to_chunks(values, chunk_ix=1):

chunks = dict()

# get the unique chunk ids

chunk_ids = unique(values[:, chunk_ix])

# group rows by chunk id

for chunk_id in chunk_ids:

selection = values[:, chunk_ix] == chunk_id

chunks[chunk_id] = values[selection, :]

return chunks

```

下面列出了加載數據集并將其拆分為塊的完整示例。

```py

# load data and split into chunks

from numpy import unique

from pandas import read_csv

# split the dataset by 'chunkID', return a dict of id to rows

def to_chunks(values, chunk_ix=1):

chunks = dict()

# get the unique chunk ids

chunk_ids = unique(values[:, chunk_ix])

# group rows by chunk id

for chunk_id in chunk_ids:

selection = values[:, chunk_ix] == chunk_id

chunks[chunk_id] = values[selection, :]

return chunks

# load dataset

dataset = read_csv('AirQualityPrediction/TrainingData.csv', header=0)

# group data by chunks

values = dataset.values

chunks = to_chunks(values)

print('Total Chunks: %d' % len(chunks))

```

運行該示例將打印數據集中的塊數。

```py

Total Chunks: 208

```

### 數據準備

既然我們知道如何加載數據并將其拆分成塊,我們就可以將它們分成訓練和測試數據集。

盡管每個塊內的實際觀測數量可能差異很大,但每個塊的每小時觀察間隔為 8 天。

我們可以將每個塊分成前五天的訓練觀察和最后三天的測試。

每個觀察都有一行稱為' _position_within_chunk_ ',從 1 到 192(8 天* 24 小時)不等。因此,我們可以將此列中值小于或等于 120(5 * 24)的所有行作為訓練數據,將任何大于 120 的值作為測試數據。

此外,任何在訓練或測試分割中沒有任何觀察的塊都可以被丟棄,因為不可行。

在使用樸素模型時,我們只對目標變量感興趣,而不對輸入的氣象變量感興趣。因此,我們可以刪除輸入數據,并使訓練和測試數據僅包含每個塊的 39 個目標變量,以及塊和觀察時間內的位置。

下面的 _split_train_test()_ 函數實現了這種行為;給定一個塊的字典,它將每個分成訓練和測試塊數據。

```py

# split each chunk into train/test sets

def split_train_test(chunks, row_in_chunk_ix=2):

train, test = list(), list()

# first 5 days of hourly observations for train

cut_point = 5 * 24

# enumerate chunks

for k,rows in chunks.items():

# split chunk rows by 'position_within_chunk'

train_rows = rows[rows[:,row_in_chunk_ix] <= cut_point, :]

test_rows = rows[rows[:,row_in_chunk_ix] > cut_point, :]

if len(train_rows) == 0 or len(test_rows) == 0:

print('>dropping chunk=%d: train=%s, test=%s' % (k, train_rows.shape, test_rows.shape))

continue

# store with chunk id, position in chunk, hour and all targets

indices = [1,2,5] + [x for x in range(56,train_rows.shape[1])]

train.append(train_rows[:, indices])

test.append(test_rows[:, indices])

return train, test

```

我們不需要整個測試數據集;相反,我們只需要在三天時間內的特定提前期進行觀察,特別是提前期:

```py

+1, +2, +3, +4, +5, +10, +17, +24, +48, +72

```

其中,每個提前期相對于訓練期結束。

首先,我們可以將這些提前期放入函數中以便于參考:

```py

# return a list of relative forecast lead times

def get_lead_times():

return [1, 2 ,3, 4, 5, 10, 17, 24, 48, 72]

```

接下來,我們可以將測試數據集縮減為僅在首選提前期的數據。

我們可以通過查看' _position_within_chunk_ '列并使用提前期作為距離訓練數據集末尾的偏移量來實現,例如: 120 + 1,120 +2 等

如果我們在測試集中找到匹配的行,則保存它,否則生成一行 NaN 觀測值。

下面的函數 _to_forecasts()_ 實現了這一點,并為每個塊的每個預測提前期返回一行 NumPy 數組。

```py

# convert the rows in a test chunk to forecasts

def to_forecasts(test_chunks, row_in_chunk_ix=1):

# get lead times

lead_times = get_lead_times()

# first 5 days of hourly observations for train

cut_point = 5 * 24

forecasts = list()

# enumerate each chunk

for rows in test_chunks:

chunk_id = rows[0, 0]

# enumerate each lead time

for tau in lead_times:

# determine the row in chunk we want for the lead time

offset = cut_point + tau

# retrieve data for the lead time using row number in chunk

row_for_tau = rows[rows[:,row_in_chunk_ix]==offset, :]

# check if we have data

if len(row_for_tau) == 0:

# create a mock row [chunk, position, hour] + [nan...]

row = [chunk_id, offset, nan] + [nan for _ in range(39)]

forecasts.append(row)

else:

# store the forecast row

forecasts.append(row_for_tau[0])

return array(forecasts)

```

我們可以將所有這些組合在一起并將數據集拆分為訓練集和測試集,并將結果保存到新文件中。

完整的代碼示例如下所示。

```py

# split data into train and test sets

from numpy import unique

from numpy import nan

from numpy import array

from numpy import savetxt

from pandas import read_csv

# split the dataset by 'chunkID', return a dict of id to rows

def to_chunks(values, chunk_ix=1):

chunks = dict()

# get the unique chunk ids

chunk_ids = unique(values[:, chunk_ix])

# group rows by chunk id

for chunk_id in chunk_ids:

selection = values[:, chunk_ix] == chunk_id

chunks[chunk_id] = values[selection, :]

return chunks

# split each chunk into train/test sets

def split_train_test(chunks, row_in_chunk_ix=2):

train, test = list(), list()

# first 5 days of hourly observations for train

cut_point = 5 * 24

# enumerate chunks

for k,rows in chunks.items():

# split chunk rows by 'position_within_chunk'

train_rows = rows[rows[:,row_in_chunk_ix] <= cut_point, :]

test_rows = rows[rows[:,row_in_chunk_ix] > cut_point, :]

if len(train_rows) == 0 or len(test_rows) == 0:

print('>dropping chunk=%d: train=%s, test=%s' % (k, train_rows.shape, test_rows.shape))

continue

# store with chunk id, position in chunk, hour and all targets

indices = [1,2,5] + [x for x in range(56,train_rows.shape[1])]

train.append(train_rows[:, indices])

test.append(test_rows[:, indices])

return train, test

# return a list of relative forecast lead times

def get_lead_times():

return [1, 2 ,3, 4, 5, 10, 17, 24, 48, 72]

# convert the rows in a test chunk to forecasts

def to_forecasts(test_chunks, row_in_chunk_ix=1):

# get lead times

lead_times = get_lead_times()

# first 5 days of hourly observations for train

cut_point = 5 * 24

forecasts = list()

# enumerate each chunk

for rows in test_chunks:

chunk_id = rows[0, 0]

# enumerate each lead time

for tau in lead_times:

# determine the row in chunk we want for the lead time

offset = cut_point + tau

# retrieve data for the lead time using row number in chunk

row_for_tau = rows[rows[:,row_in_chunk_ix]==offset, :]

# check if we have data

if len(row_for_tau) == 0:

# create a mock row [chunk, position, hour] + [nan...]

row = [chunk_id, offset, nan] + [nan for _ in range(39)]

forecasts.append(row)

else:

# store the forecast row

forecasts.append(row_for_tau[0])

return array(forecasts)

# load dataset

dataset = read_csv('AirQualityPrediction/TrainingData.csv', header=0)

# group data by chunks

values = dataset.values

chunks = to_chunks(values)

# split into train/test

train, test = split_train_test(chunks)

# flatten training chunks to rows

train_rows = array([row for rows in train for row in rows])

# print(train_rows.shape)

print('Train Rows: %s' % str(train_rows.shape))

# reduce train to forecast lead times only

test_rows = to_forecasts(test)

print('Test Rows: %s' % str(test_rows.shape))

# save datasets

savetxt('AirQualityPrediction/naive_train.csv', train_rows, delimiter=',')

savetxt('AirQualityPrediction/naive_test.csv', test_rows, delimiter=',')

```

運行該示例首先評論了從數據集中移除了塊 69 以獲得不足的數據。

然后我們可以看到每個訓練和測試集中有 42 列,一個用于塊 ID,塊內位置,一天中的小時和 39 個訓練變量。

我們還可以看到測試數據集的顯著縮小版本,其中行僅在預測前置時間。

新的訓練和測試數據集分別保存在' _naive_train.csv_ '和' _naive_test.csv_ '文件中。

```py

>dropping chunk=69: train=(0, 95), test=(28, 95)

Train Rows: (23514, 42)

Test Rows: (2070, 42)

```

### 預測評估

一旦做出預測,就需要對它們進行評估。

在評估預測時,使用更簡單的格式會很有幫助。例如,我們將使用 _[chunk] [變量] [時間]_ 的三維結構,其中變量是從 0 到 38 的目標變量數,time 是從 0 到 9 的提前期索引。

模型有望以這種格式進行預測。

我們還可以重新構建測試數據集以使此數據集進行比較。下面的 _prepare_test_forecasts()_ 函數實現了這一點。

```py

# convert the test dataset in chunks to [chunk][variable][time] format

def prepare_test_forecasts(test_chunks):

predictions = list()

# enumerate chunks to forecast

for rows in test_chunks:

# enumerate targets for chunk

chunk_predictions = list()

for j in range(3, rows.shape[1]):

yhat = rows[:, j]

chunk_predictions.append(yhat)

chunk_predictions = array(chunk_predictions)

predictions.append(chunk_predictions)

return array(predictions)

```

我們將使用平均絕對誤差或 MAE 來評估模型。這是在競爭中使用的度量,并且在給定目標變量的非高斯分布的情況下是合理的選擇。

如果提前期不包含測試集中的數據(例如 _NaN_ ),則不會計算該預測的錯誤。如果提前期確實在測試集中有數據但預測中沒有數據,那么觀察的全部大小將被視為錯誤。最后,如果測試集具有觀察值并進行預測,則絕對差值將被記錄為誤差。

_calculate_error()_ 函數實現這些規則并返回給定預測的錯誤。

```py

# calculate the error between an actual and predicted value

def calculate_error(actual, predicted):

# give the full actual value if predicted is nan

if isnan(predicted):

return abs(actual)

# calculate abs difference

return abs(actual - predicted)

```

錯誤在所有塊和所有提前期之間求和,然后取平均值。

將計算總體 MAE,但我們還將計算每個預測提前期的 MAE。這通常有助于模型選擇,因為某些模型在不同的提前期可能會有不同的表現。

下面的 evaluate_forecasts()函數實現了這一點,計算了 _[chunk] [variable] [time]_ 格式中提供的預測和期望值的 MAE 和每個引導時間 MAE。

```py

# evaluate a forecast in the format [chunk][variable][time]

def evaluate_forecasts(predictions, testset):

lead_times = get_lead_times()

total_mae, times_mae = 0.0, [0.0 for _ in range(len(lead_times))]

total_c, times_c = 0, [0 for _ in range(len(lead_times))]

# enumerate test chunks

for i in range(len(test_chunks)):

# convert to forecasts

actual = testset[i]

predicted = predictions[i]

# enumerate target variables

for j in range(predicted.shape[0]):

# enumerate lead times

for k in range(len(lead_times)):

# skip if actual in nan

if isnan(actual[j, k]):

continue

# calculate error

error = calculate_error(actual[j, k], predicted[j, k])

# update statistics

total_mae += error

times_mae[k] += error

total_c += 1

times_c[k] += 1

# normalize summed absolute errors

total_mae /= total_c

times_mae = [times_mae[i]/times_c[i] for i in range(len(times_mae))]

return total_mae, times_mae

```

一旦我們對模型進行評估,我們就可以呈現它。

下面的 _summarize_error()_ 函數首先打印模型表現的一行摘要,然后創建每個預測提前期的 MAE 圖。

```py

# summarize scores

def summarize_error(name, total_mae, times_mae):

# print summary

lead_times = get_lead_times()

formatted = ['+%d %.3f' % (lead_times[i], times_mae[i]) for i in range(len(lead_times))]

s_scores = ', '.join(formatted)

print('%s: [%.3f MAE] %s' % (name, total_mae, s_scores))

# plot summary

pyplot.plot([str(x) for x in lead_times], times_mae, marker='.')

pyplot.show()

```

我們現在準備開始探索樸素預測方法的表現。

## 全球樸素的方法

在本節中,我們將探索使用訓練數據集中所有數據的樸素預測方法,而不是約束我們正在進行預測的塊。

我們將看兩種方法:

* 預測每個系列的平均值

* 預測每個系列的每日小時的平均值

### 預測每個系列的平均值

第一步是實現一個通用函數,用于為每個塊進行預測。

該函數獲取測試集的訓練數據集和輸入列(塊 ID,塊中的位置和小時),并返回具有 _[塊] [變量] [時間]的預期 3D 格式的所有塊的預測 _。

該函數枚舉預測中的塊,然后枚舉 39 個目標列,調用另一個名為 _forecast_variable()_ 的新函數,以便對給定目標變量的每個提前期進行預測。

完整的功能如下所列。

```py

# forecast for each chunk, returns [chunk][variable][time]

def forecast_chunks(train_chunks, test_input):

lead_times = get_lead_times()

predictions = list()

# enumerate chunks to forecast

for i in range(len(train_chunks)):

# enumerate targets for chunk

chunk_predictions = list()

for j in range(39):

yhat = forecast_variable(train_chunks, train_chunks[i], test_input[i], lead_times, j)

chunk_predictions.append(yhat)

chunk_predictions = array(chunk_predictions)

predictions.append(chunk_predictions)

return array(predictions)

```

我們現在可以實現 _forecast_variable()_ 的一個版本,該版本計算給定系列的平均值,并預測每個提前期的平均值。

首先,我們必須在所有塊中收集目標列中的所有觀測值,然后計算觀測值的平均值,同時忽略 NaN 值。 _nanmean()_ NumPy 函數將計算陣列的平均值并忽略 _NaN_ 值。

下面的 _forecast_variable()_ 函數實現了這種行為。

```py

# forecast all lead times for one variable

def forecast_variable(train_chunks, chunk_train, chunk_test, lead_times, target_ix):

# convert target number into column number

col_ix = 3 + target_ix

# collect obs from all chunks

all_obs = list()

for chunk in train_chunks:

all_obs += [x for x in chunk[:, col_ix]]

# return the average, ignoring nan

value = nanmean(all_obs)

return [value for _ in lead_times]

```

我們現在擁有我們需要的一切。

下面列出了在所有預測提前期內預測每個系列的全局均值的完整示例。

```py

# forecast global mean

from numpy import loadtxt

from numpy import nan

from numpy import isnan

from numpy import count_nonzero

from numpy import unique

from numpy import array

from numpy import nanmean

from matplotlib import pyplot

# split the dataset by 'chunkID', return a list of chunks

def to_chunks(values, chunk_ix=0):

chunks = list()

# get the unique chunk ids

chunk_ids = unique(values[:, chunk_ix])

# group rows by chunk id

for chunk_id in chunk_ids:

selection = values[:, chunk_ix] == chunk_id

chunks.append(values[selection, :])

return chunks

# return a list of relative forecast lead times

def get_lead_times():

return [1, 2 ,3, 4, 5, 10, 17, 24, 48, 72]

# forecast all lead times for one variable

def forecast_variable(train_chunks, chunk_train, chunk_test, lead_times, target_ix):

# convert target number into column number

col_ix = 3 + target_ix

# collect obs from all chunks

all_obs = list()

for chunk in train_chunks:

all_obs += [x for x in chunk[:, col_ix]]

# return the average, ignoring nan

value = nanmean(all_obs)

return [value for _ in lead_times]

# forecast for each chunk, returns [chunk][variable][time]

def forecast_chunks(train_chunks, test_input):

lead_times = get_lead_times()

predictions = list()

# enumerate chunks to forecast

for i in range(len(train_chunks)):

# enumerate targets for chunk

chunk_predictions = list()

for j in range(39):

yhat = forecast_variable(train_chunks, train_chunks[i], test_input[i], lead_times, j)

chunk_predictions.append(yhat)

chunk_predictions = array(chunk_predictions)

predictions.append(chunk_predictions)

return array(predictions)

# convert the test dataset in chunks to [chunk][variable][time] format

def prepare_test_forecasts(test_chunks):

predictions = list()

# enumerate chunks to forecast

for rows in test_chunks:

# enumerate targets for chunk

chunk_predictions = list()

for j in range(3, rows.shape[1]):

yhat = rows[:, j]

chunk_predictions.append(yhat)

chunk_predictions = array(chunk_predictions)

predictions.append(chunk_predictions)

return array(predictions)

# calculate the error between an actual and predicted value

def calculate_error(actual, predicted):

# give the full actual value if predicted is nan

if isnan(predicted):

return abs(actual)

# calculate abs difference

return abs(actual - predicted)

# evaluate a forecast in the format [chunk][variable][time]

def evaluate_forecasts(predictions, testset):

lead_times = get_lead_times()

total_mae, times_mae = 0.0, [0.0 for _ in range(len(lead_times))]

total_c, times_c = 0, [0 for _ in range(len(lead_times))]

# enumerate test chunks

for i in range(len(test_chunks)):

# convert to forecasts

actual = testset[i]

predicted = predictions[i]

# enumerate target variables

for j in range(predicted.shape[0]):

# enumerate lead times

for k in range(len(lead_times)):

# skip if actual in nan

if isnan(actual[j, k]):

continue

# calculate error

error = calculate_error(actual[j, k], predicted[j, k])

# update statistics

total_mae += error

times_mae[k] += error

total_c += 1

times_c[k] += 1

# normalize summed absolute errors

total_mae /= total_c

times_mae = [times_mae[i]/times_c[i] for i in range(len(times_mae))]

return total_mae, times_mae

# summarize scores

def summarize_error(name, total_mae, times_mae):

# print summary

lead_times = get_lead_times()

formatted = ['+%d %.3f' % (lead_times[i], times_mae[i]) for i in range(len(lead_times))]

s_scores = ', '.join(formatted)

print('%s: [%.3f MAE] %s' % (name, total_mae, s_scores))

# plot summary

pyplot.plot([str(x) for x in lead_times], times_mae, marker='.')

pyplot.show()

# load dataset

train = loadtxt('AirQualityPrediction/naive_train.csv', delimiter=',')

test = loadtxt('AirQualityPrediction/naive_test.csv', delimiter=',')

# group data by chunks

train_chunks = to_chunks(train)

test_chunks = to_chunks(test)

# forecast

test_input = [rows[:, :3] for rows in test_chunks]

forecast = forecast_chunks(train_chunks, test_input)

# evaluate forecast

actual = prepare_test_forecasts(test_chunks)

total_mae, times_mae = evaluate_forecasts(forecast, actual)

# summarize forecast

summarize_error('Global Mean', total_mae, times_mae)

```

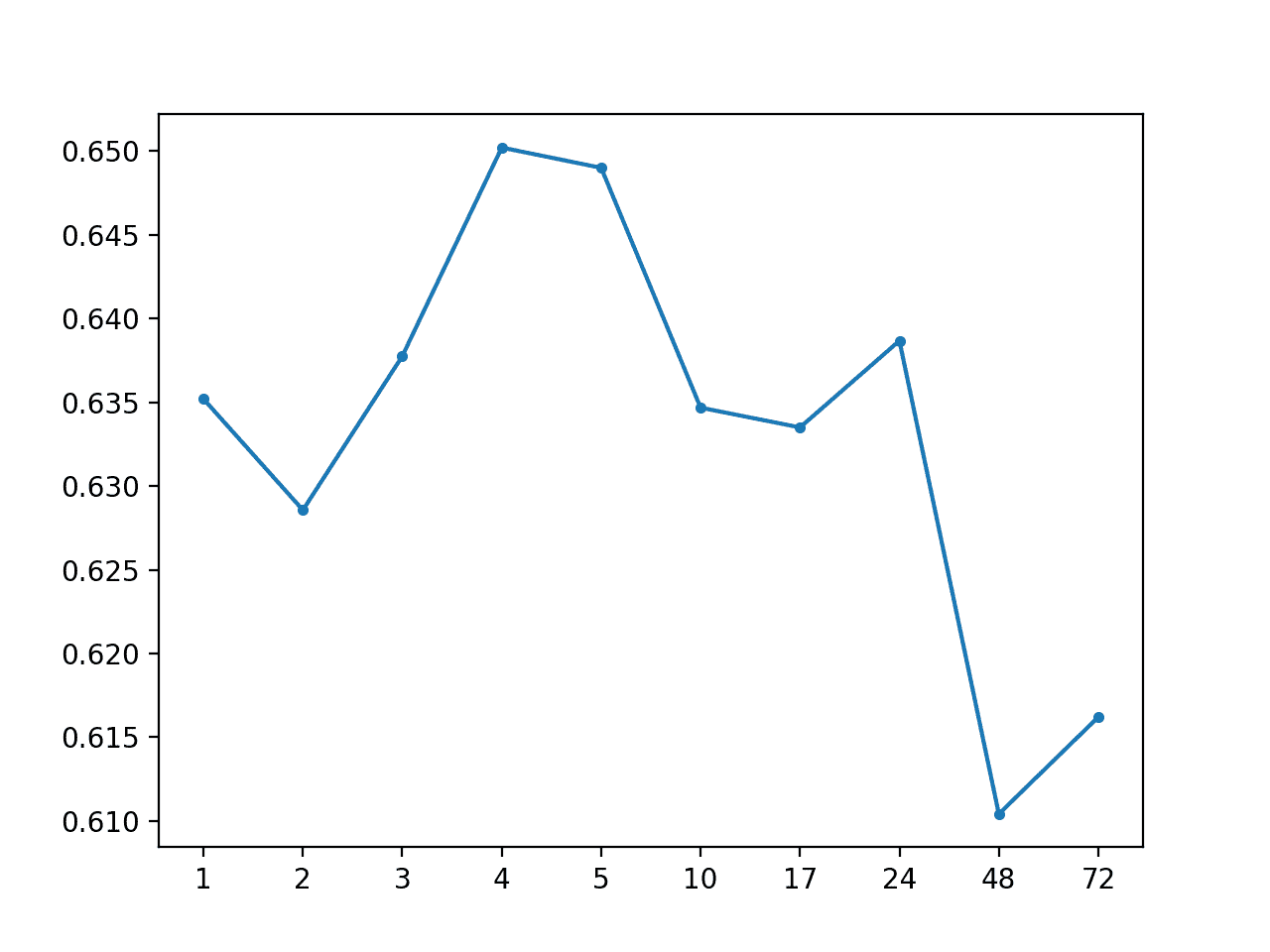

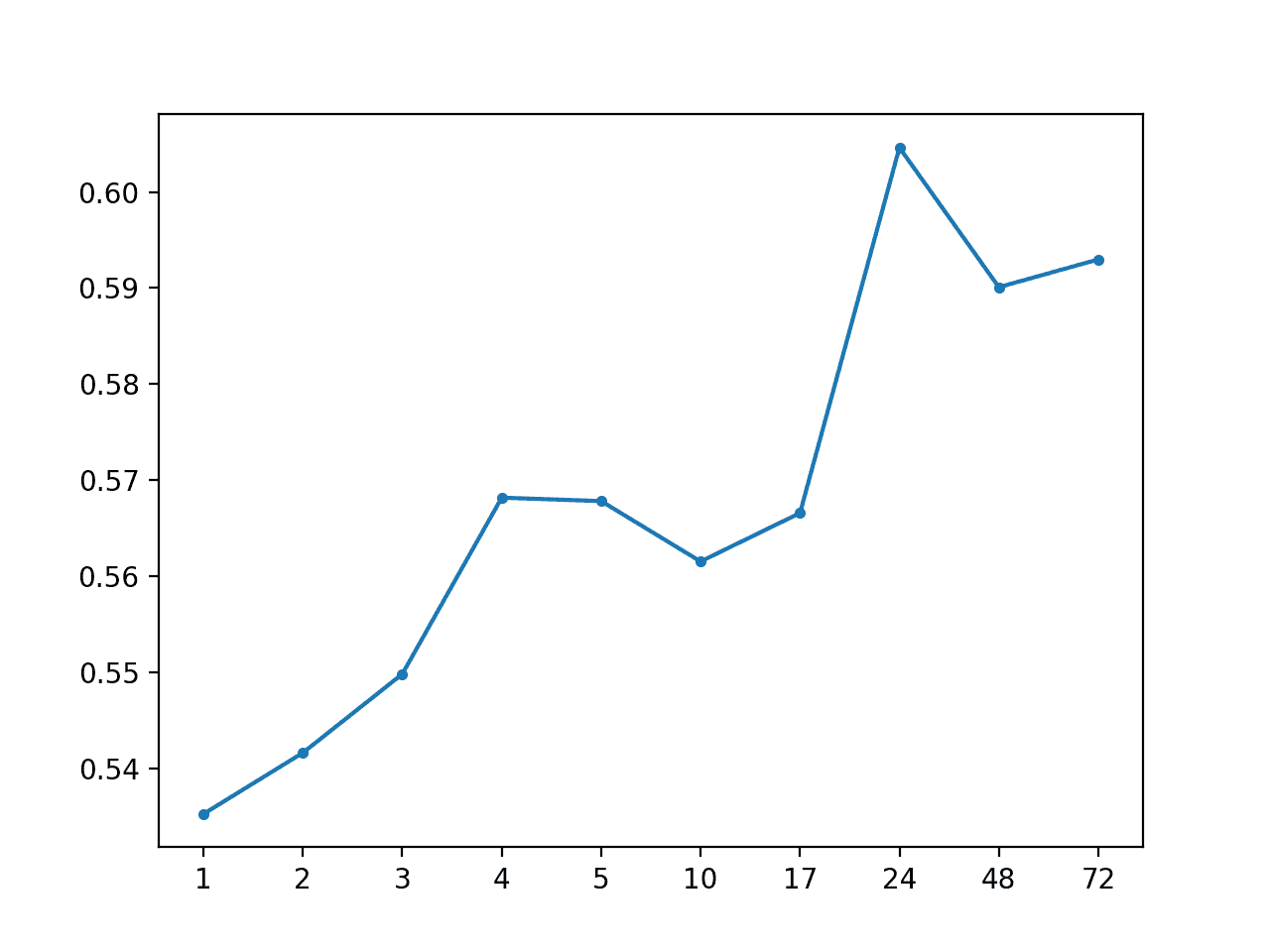

首先運行該示例打印的總體 MAE 為 0.634,然后是每個預測提前期的 MAE 分數。

```py

# Global Mean: [0.634 MAE] +1 0.635, +2 0.629, +3 0.638, +4 0.650, +5 0.649, +10 0.635, +17 0.634, +24 0.641, +48 0.613, +72 0.618

```

創建一個線圖,顯示每個預測前置時間的 MAE 分數,從+1 小時到+72 小時。

我們無法看到預測前置時間與預測錯誤有任何明顯的關系,正如我們對更熟練的模型所期望的那樣。

MAE 按預測帶領全球均值時間

我們可以更新示例來預測全局中位數而不是平均值。

考慮到數據似乎顯示的非高斯分布,中值可能比使用該數據的均值更有意義地用作集中趨勢。

NumPy 提供 _nanmedian()_ 功能,我們可以在 _forecast_variable()_ 函數中代替 _nanmean()_。

完整更新的示例如下所示。

```py

# forecast global median

from numpy import loadtxt

from numpy import nan

from numpy import isnan

from numpy import count_nonzero

from numpy import unique

from numpy import array

from numpy import nanmedian

from matplotlib import pyplot

# split the dataset by 'chunkID', return a list of chunks

def to_chunks(values, chunk_ix=0):

chunks = list()

# get the unique chunk ids

chunk_ids = unique(values[:, chunk_ix])

# group rows by chunk id

for chunk_id in chunk_ids:

selection = values[:, chunk_ix] == chunk_id

chunks.append(values[selection, :])

return chunks

# return a list of relative forecast lead times

def get_lead_times():

return [1, 2 ,3, 4, 5, 10, 17, 24, 48, 72]

# forecast all lead times for one variable

def forecast_variable(train_chunks, chunk_train, chunk_test, lead_times, target_ix):

# convert target number into column number

col_ix = 3 + target_ix

# collect obs from all chunks

all_obs = list()

for chunk in train_chunks:

all_obs += [x for x in chunk[:, col_ix]]

# return the average, ignoring nan

value = nanmedian(all_obs)

return [value for _ in lead_times]

# forecast for each chunk, returns [chunk][variable][time]

def forecast_chunks(train_chunks, test_input):

lead_times = get_lead_times()

predictions = list()

# enumerate chunks to forecast

for i in range(len(train_chunks)):

# enumerate targets for chunk

chunk_predictions = list()

for j in range(39):

yhat = forecast_variable(train_chunks, train_chunks[i], test_input[i], lead_times, j)

chunk_predictions.append(yhat)

chunk_predictions = array(chunk_predictions)

predictions.append(chunk_predictions)

return array(predictions)

# convert the test dataset in chunks to [chunk][variable][time] format

def prepare_test_forecasts(test_chunks):

predictions = list()

# enumerate chunks to forecast

for rows in test_chunks:

# enumerate targets for chunk

chunk_predictions = list()

for j in range(3, rows.shape[1]):

yhat = rows[:, j]

chunk_predictions.append(yhat)

chunk_predictions = array(chunk_predictions)

predictions.append(chunk_predictions)

return array(predictions)

# calculate the error between an actual and predicted value

def calculate_error(actual, predicted):

# give the full actual value if predicted is nan

if isnan(predicted):

return abs(actual)

# calculate abs difference

return abs(actual - predicted)

# evaluate a forecast in the format [chunk][variable][time]

def evaluate_forecasts(predictions, testset):

lead_times = get_lead_times()

total_mae, times_mae = 0.0, [0.0 for _ in range(len(lead_times))]

total_c, times_c = 0, [0 for _ in range(len(lead_times))]

# enumerate test chunks

for i in range(len(test_chunks)):

# convert to forecasts

actual = testset[i]

predicted = predictions[i]

# enumerate target variables

for j in range(predicted.shape[0]):

# enumerate lead times

for k in range(len(lead_times)):

# skip if actual in nan

if isnan(actual[j, k]):

continue

# calculate error

error = calculate_error(actual[j, k], predicted[j, k])

# update statistics

total_mae += error

times_mae[k] += error

total_c += 1

times_c[k] += 1

# normalize summed absolute errors

total_mae /= total_c

times_mae = [times_mae[i]/times_c[i] for i in range(len(times_mae))]

return total_mae, times_mae

# summarize scores

def summarize_error(name, total_mae, times_mae):

# print summary

lead_times = get_lead_times()

formatted = ['+%d %.3f' % (lead_times[i], times_mae[i]) for i in range(len(lead_times))]

s_scores = ', '.join(formatted)

print('%s: [%.3f MAE] %s' % (name, total_mae, s_scores))

# plot summary

pyplot.plot([str(x) for x in lead_times], times_mae, marker='.')

pyplot.show()

# load dataset

train = loadtxt('AirQualityPrediction/naive_train.csv', delimiter=',')

test = loadtxt('AirQualityPrediction/naive_test.csv', delimiter=',')

# group data by chunks

train_chunks = to_chunks(train)

test_chunks = to_chunks(test)

# forecast

test_input = [rows[:, :3] for rows in test_chunks]

forecast = forecast_chunks(train_chunks, test_input)

# evaluate forecast

actual = prepare_test_forecasts(test_chunks)

total_mae, times_mae = evaluate_forecasts(forecast, actual)

# summarize forecast

summarize_error('Global Median', total_mae, times_mae)

```

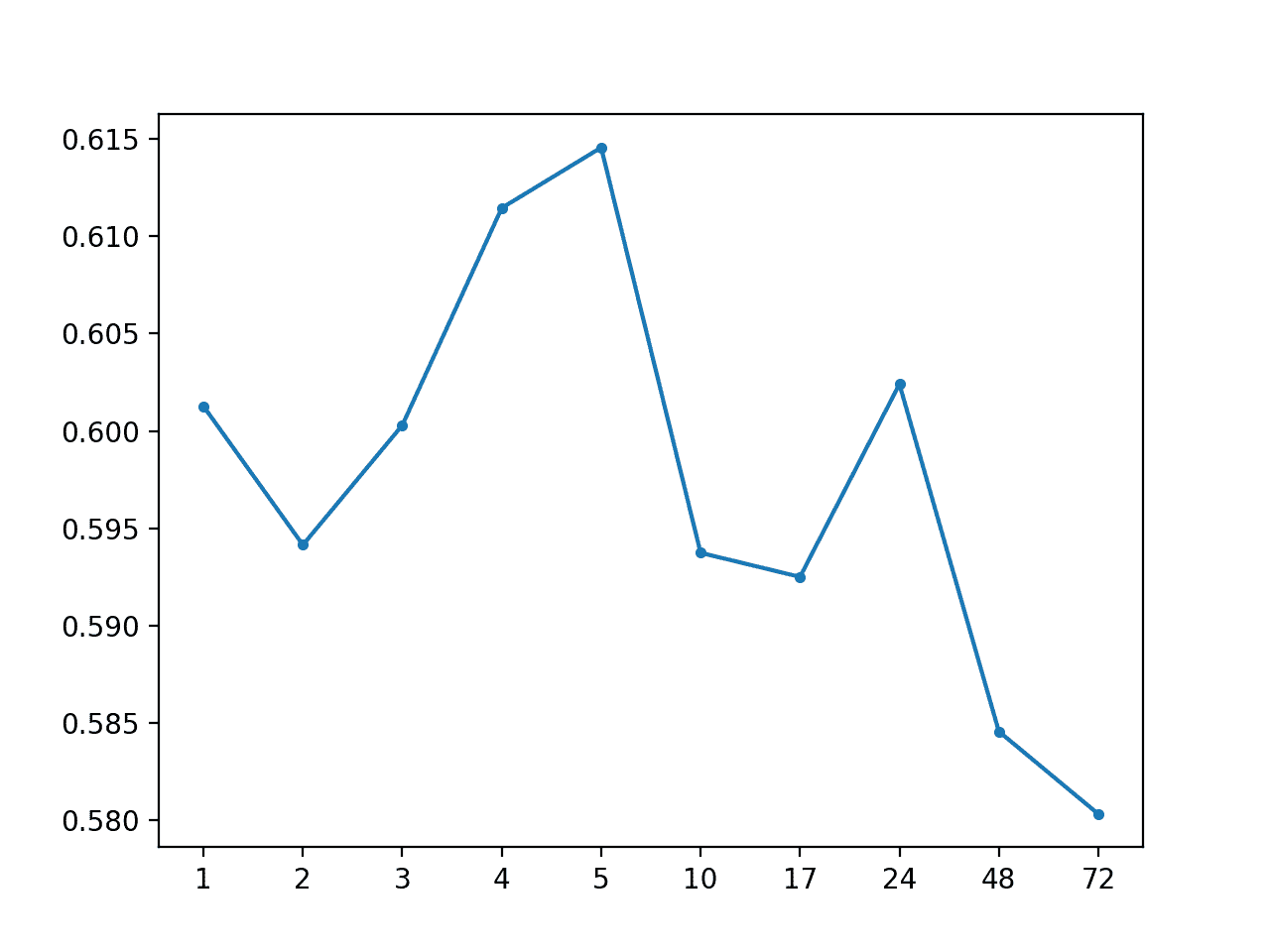

運行該示例顯示 MAE 下降至約 0.59,表明確實使用中位數作為集中趨勢可能是更好的基線策略。

```py

Global Median: [0.598 MAE] +1 0.601, +2 0.594, +3 0.600, +4 0.611, +5 0.615, +10 0.594, +17 0.592, +24 0.602, +48 0.585, +72 0.580

```

還創建了每個提前期 MAE 的線圖。

MAE 預測帶領全球中位數的時間

### 預測每個系列的每日小時的平均值

我們可以通過系列更新用于計算集中趨勢的樸素模型,以僅包括與預測提前期具有相同時段的行。

例如,如果+1 提前時間具有小時 6(例如 0600 或 6AM),那么我們可以在該小時的所有塊中找到訓練數據集中的所有其他行,并從這些行計算給定目標變量的中值。 。

我們在測試數據集中記錄一天中的小時數,并在進行預測時將其提供給模型。一個問題是,在某些情況下,測試數據集沒有給定提前期的記錄,而且必須用 _NaN_ 值發明一個,包括小時的 _NaN_ 值。在這些情況下,不需要預測,因此我們將跳過它們并預測 _NaN_ 值。

下面的 _forecast_variable()_ 函數實現了這種行為,返回給定變量的每個提前期的預測。

效率不高,首先為每個變量預先計算每小時的中值,然后使用查找表進行預測可能會更有效。此時效率不是問題,因為我們正在尋找模型表現的基線。

```py

# forecast all lead times for one variable

def forecast_variable(train_chunks, chunk_train, chunk_test, lead_times, target_ix):

forecast = list()

# convert target number into column number

col_ix = 3 + target_ix

# enumerate lead times

for i in range(len(lead_times)):

# get the hour for this forecast lead time

hour = chunk_test[i, 2]

# check for no test data

if isnan(hour):

forecast.append(nan)

continue

# get all rows in training for this hour

all_rows = list()

for rows in train_chunks:

[all_rows.append(row) for row in rows[rows[:,2]==hour]]

# calculate the central tendency for target

all_rows = array(all_rows)

value = nanmedian(all_rows[:, col_ix])

forecast.append(value)

return forecast

```

下面列出了按一天中的小時預測全球中值的完整示例。

```py

# forecast global median by hour of day

from numpy import loadtxt

from numpy import nan

from numpy import isnan

from numpy import count_nonzero

from numpy import unique

from numpy import array

from numpy import nanmedian

from matplotlib import pyplot

# split the dataset by 'chunkID', return a list of chunks

def to_chunks(values, chunk_ix=0):

chunks = list()

# get the unique chunk ids

chunk_ids = unique(values[:, chunk_ix])

# group rows by chunk id

for chunk_id in chunk_ids:

selection = values[:, chunk_ix] == chunk_id

chunks.append(values[selection, :])

return chunks

# return a list of relative forecast lead times

def get_lead_times():

return [1, 2, 3, 4, 5, 10, 17, 24, 48, 72]

# forecast all lead times for one variable

def forecast_variable(train_chunks, chunk_train, chunk_test, lead_times, target_ix):

forecast = list()

# convert target number into column number

col_ix = 3 + target_ix

# enumerate lead times

for i in range(len(lead_times)):

# get the hour for this forecast lead time

hour = chunk_test[i, 2]

# check for no test data

if isnan(hour):

forecast.append(nan)

continue

# get all rows in training for this hour

all_rows = list()

for rows in train_chunks:

[all_rows.append(row) for row in rows[rows[:,2]==hour]]

# calculate the central tendency for target

all_rows = array(all_rows)

value = nanmedian(all_rows[:, col_ix])

forecast.append(value)

return forecast

# forecast for each chunk, returns [chunk][variable][time]

def forecast_chunks(train_chunks, test_input):

lead_times = get_lead_times()

predictions = list()

# enumerate chunks to forecast

for i in range(len(train_chunks)):

# enumerate targets for chunk

chunk_predictions = list()

for j in range(39):

yhat = forecast_variable(train_chunks, train_chunks[i], test_input[i], lead_times, j)

chunk_predictions.append(yhat)

chunk_predictions = array(chunk_predictions)

predictions.append(chunk_predictions)

return array(predictions)

# convert the test dataset in chunks to [chunk][variable][time] format

def prepare_test_forecasts(test_chunks):

predictions = list()

# enumerate chunks to forecast

for rows in test_chunks:

# enumerate targets for chunk

chunk_predictions = list()

for j in range(3, rows.shape[1]):

yhat = rows[:, j]

chunk_predictions.append(yhat)

chunk_predictions = array(chunk_predictions)

predictions.append(chunk_predictions)

return array(predictions)

# calculate the error between an actual and predicted value

def calculate_error(actual, predicted):

# give the full actual value if predicted is nan

if isnan(predicted):

return abs(actual)

# calculate abs difference

return abs(actual - predicted)

# evaluate a forecast in the format [chunk][variable][time]

def evaluate_forecasts(predictions, testset):

lead_times = get_lead_times()

total_mae, times_mae = 0.0, [0.0 for _ in range(len(lead_times))]

total_c, times_c = 0, [0 for _ in range(len(lead_times))]

# enumerate test chunks

for i in range(len(test_chunks)):

# convert to forecasts

actual = testset[i]

predicted = predictions[i]

# enumerate target variables

for j in range(predicted.shape[0]):

# enumerate lead times

for k in range(len(lead_times)):

# skip if actual in nan

if isnan(actual[j, k]):

continue

# calculate error

error = calculate_error(actual[j, k], predicted[j, k])

# update statistics

total_mae += error

times_mae[k] += error

total_c += 1

times_c[k] += 1

# normalize summed absolute errors

total_mae /= total_c

times_mae = [times_mae[i]/times_c[i] for i in range(len(times_mae))]

return total_mae, times_mae

# summarize scores

def summarize_error(name, total_mae, times_mae):

# print summary

lead_times = get_lead_times()

formatted = ['+%d %.3f' % (lead_times[i], times_mae[i]) for i in range(len(lead_times))]

s_scores = ', '.join(formatted)

print('%s: [%.3f MAE] %s' % (name, total_mae, s_scores))

# plot summary

pyplot.plot([str(x) for x in lead_times], times_mae, marker='.')

pyplot.show()

# load dataset

train = loadtxt('AirQualityPrediction/naive_train.csv', delimiter=',')

test = loadtxt('AirQualityPrediction/naive_test.csv', delimiter=',')

# group data by chunks

train_chunks = to_chunks(train)

test_chunks = to_chunks(test)

# forecast

test_input = [rows[:, :3] for rows in test_chunks]

forecast = forecast_chunks(train_chunks, test_input)

# evaluate forecast

actual = prepare_test_forecasts(test_chunks)

total_mae, times_mae = evaluate_forecasts(forecast, actual)

# summarize forecast

summarize_error('Global Median by Hour', total_mae, times_mae)

```

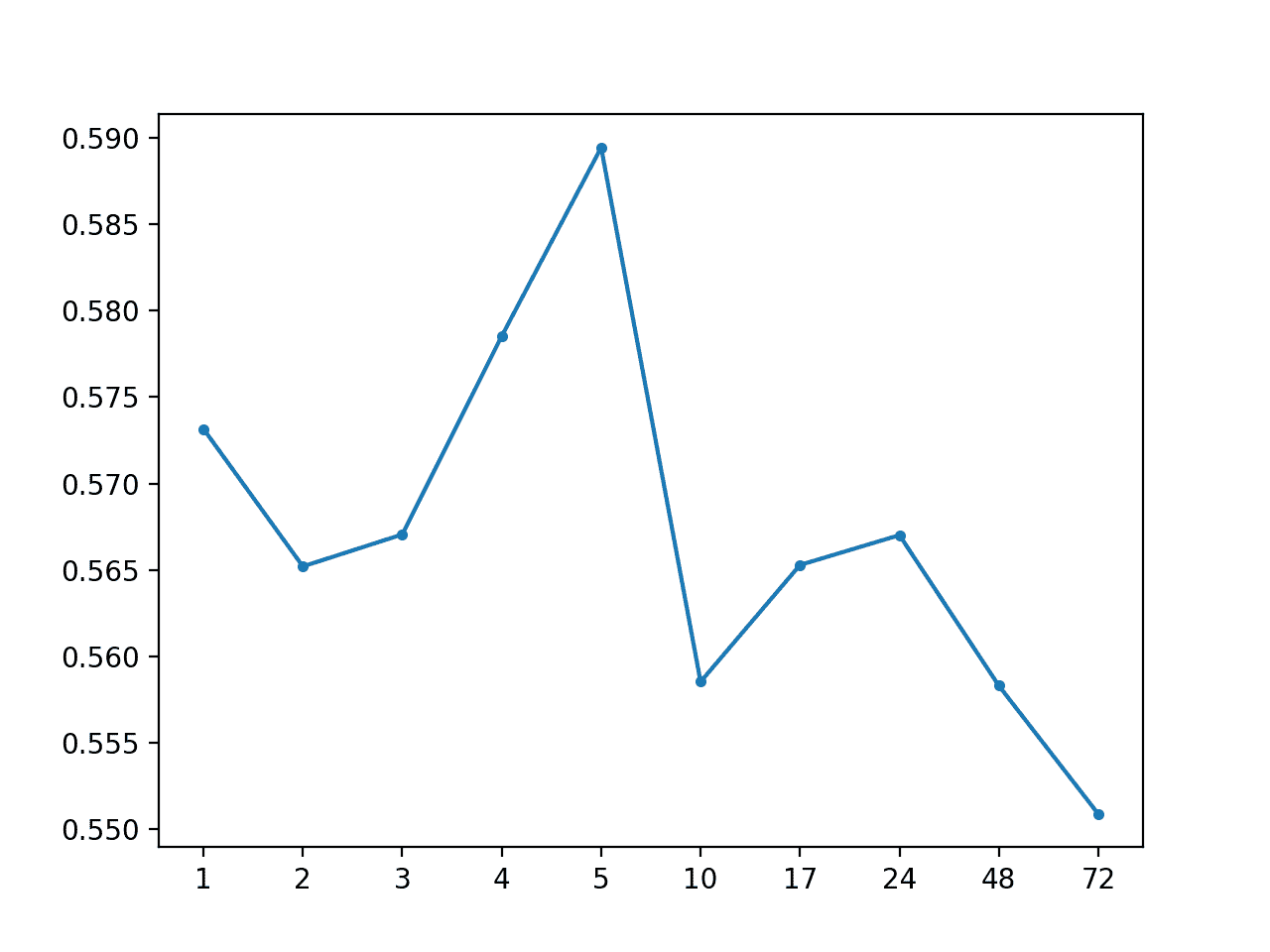

運行該示例總結了模型的表現,MAE 為 0.567,這是對每個系列的全局中位數的改進。

```py

Global Median by Hour: [0.567 MAE] +1 0.573, +2 0.565, +3 0.567, +4 0.579, +5 0.589, +10 0.559, +17 0.565, +24 0.567, +48 0.558, +72 0.551

```

還創建了預測提前期 MAE 的線圖,顯示+72 具有最低的總體預測誤差。這很有趣,并且可能表明基于小時的信息在更復雜的模型中可能是有用的。

MAE 按預測帶領時間以全球中位數按天計算

## 大塊樸素的方法

使用特定于塊的信息可能比使用來自整個訓練數據集的全局信息具有更多的預測能力。

我們可以通過三種本地或塊特定的樸素預測方法來探索這個問題;他們是:

* 預測每個系列的最后觀察

* 預測每個系列的平均值

* 預測每個系列的每日小時的平均值

最后兩個是在上一節中評估的全局策略的塊特定版本。

### 預測每個系列的最后觀察

預測塊的最后一次非 NaN 觀察可能是最簡單的模型,通常稱為持久性模型或樸素模型。

下面的 _forecast_variable()_ 函數實現了此預測策略。

```py

# forecast all lead times for one variable

def forecast_variable(train_chunks, chunk_train, chunk_test, lead_times, target_ix):

# convert target number into column number

col_ix = 3 + target_ix

# extract the history for the series

history = chunk_train[:, col_ix]

# persist a nan if we do not find any valid data

persisted = nan

# enumerate history in verse order looking for the first non-nan

for value in reversed(history):

if not isnan(value):

persisted = value

break

# persist the same value for all lead times

forecast = [persisted for _ in range(len(lead_times))]

return forecast

```

下面列出了評估測試集上持久性預測策略的完整示例。

```py

# persist last observation

from numpy import loadtxt

from numpy import nan

from numpy import isnan

from numpy import count_nonzero

from numpy import unique

from numpy import array

from numpy import nanmedian

from matplotlib import pyplot

# split the dataset by 'chunkID', return a list of chunks

def to_chunks(values, chunk_ix=0):

chunks = list()

# get the unique chunk ids

chunk_ids = unique(values[:, chunk_ix])

# group rows by chunk id

for chunk_id in chunk_ids:

selection = values[:, chunk_ix] == chunk_id

chunks.append(values[selection, :])

return chunks

# return a list of relative forecast lead times

def get_lead_times():

return [1, 2, 3, 4, 5, 10, 17, 24, 48, 72]

# forecast all lead times for one variable

def forecast_variable(train_chunks, chunk_train, chunk_test, lead_times, target_ix):

# convert target number into column number

col_ix = 3 + target_ix

# extract the history for the series

history = chunk_train[:, col_ix]

# persist a nan if we do not find any valid data

persisted = nan

# enumerate history in verse order looking for the first non-nan

for value in reversed(history):

if not isnan(value):

persisted = value

break

# persist the same value for all lead times

forecast = [persisted for _ in range(len(lead_times))]

return forecast

# forecast for each chunk, returns [chunk][variable][time]

def forecast_chunks(train_chunks, test_input):

lead_times = get_lead_times()

predictions = list()

# enumerate chunks to forecast

for i in range(len(train_chunks)):

# enumerate targets for chunk

chunk_predictions = list()

for j in range(39):

yhat = forecast_variable(train_chunks, train_chunks[i], test_input[i], lead_times, j)

chunk_predictions.append(yhat)

chunk_predictions = array(chunk_predictions)

predictions.append(chunk_predictions)

return array(predictions)

# convert the test dataset in chunks to [chunk][variable][time] format

def prepare_test_forecasts(test_chunks):

predictions = list()

# enumerate chunks to forecast

for rows in test_chunks:

# enumerate targets for chunk

chunk_predictions = list()

for j in range(3, rows.shape[1]):

yhat = rows[:, j]

chunk_predictions.append(yhat)

chunk_predictions = array(chunk_predictions)

predictions.append(chunk_predictions)

return array(predictions)

# calculate the error between an actual and predicted value

def calculate_error(actual, predicted):

# give the full actual value if predicted is nan

if isnan(predicted):

return abs(actual)

# calculate abs difference

return abs(actual - predicted)

# evaluate a forecast in the format [chunk][variable][time]

def evaluate_forecasts(predictions, testset):

lead_times = get_lead_times()

total_mae, times_mae = 0.0, [0.0 for _ in range(len(lead_times))]

total_c, times_c = 0, [0 for _ in range(len(lead_times))]

# enumerate test chunks

for i in range(len(test_chunks)):

# convert to forecasts

actual = testset[i]

predicted = predictions[i]

# enumerate target variables

for j in range(predicted.shape[0]):

# enumerate lead times

for k in range(len(lead_times)):

# skip if actual in nan

if isnan(actual[j, k]):

continue

# calculate error

error = calculate_error(actual[j, k], predicted[j, k])

# update statistics

total_mae += error

times_mae[k] += error

total_c += 1

times_c[k] += 1

# normalize summed absolute errors

total_mae /= total_c

times_mae = [times_mae[i]/times_c[i] for i in range(len(times_mae))]

return total_mae, times_mae

# summarize scores

def summarize_error(name, total_mae, times_mae):

# print summary

lead_times = get_lead_times()

formatted = ['+%d %.3f' % (lead_times[i], times_mae[i]) for i in range(len(lead_times))]

s_scores = ', '.join(formatted)

print('%s: [%.3f MAE] %s' % (name, total_mae, s_scores))

# plot summary

pyplot.plot([str(x) for x in lead_times], times_mae, marker='.')

pyplot.show()

# load dataset

train = loadtxt('AirQualityPrediction/naive_train.csv', delimiter=',')

test = loadtxt('AirQualityPrediction/naive_test.csv', delimiter=',')

# group data by chunks

train_chunks = to_chunks(train)

test_chunks = to_chunks(test)

# forecast

test_input = [rows[:, :3] for rows in test_chunks]

forecast = forecast_chunks(train_chunks, test_input)

# evaluate forecast

actual = prepare_test_forecasts(test_chunks)

total_mae, times_mae = evaluate_forecasts(forecast, actual)

# summarize forecast

summarize_error('Persistence', total_mae, times_mae)

```

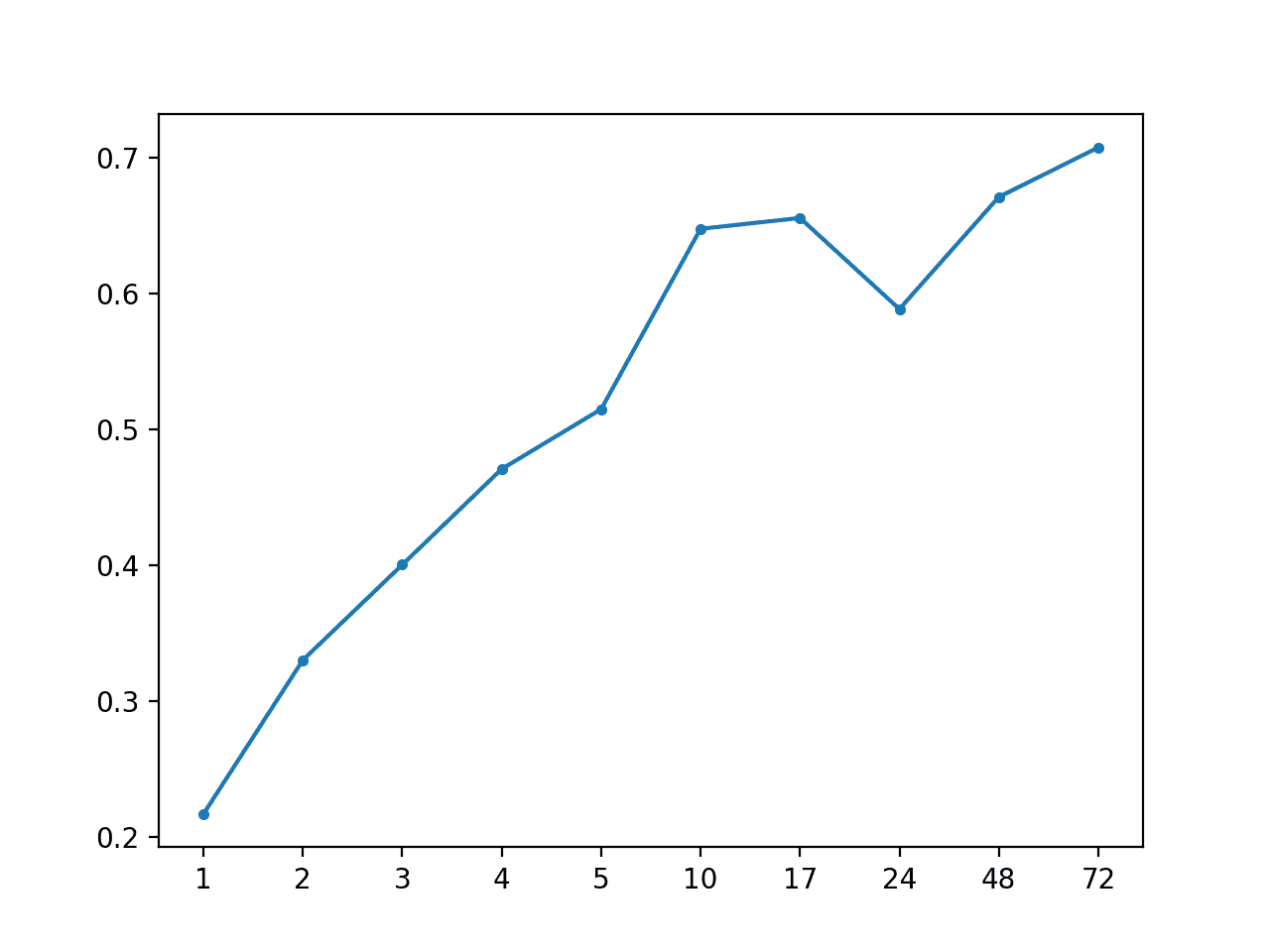

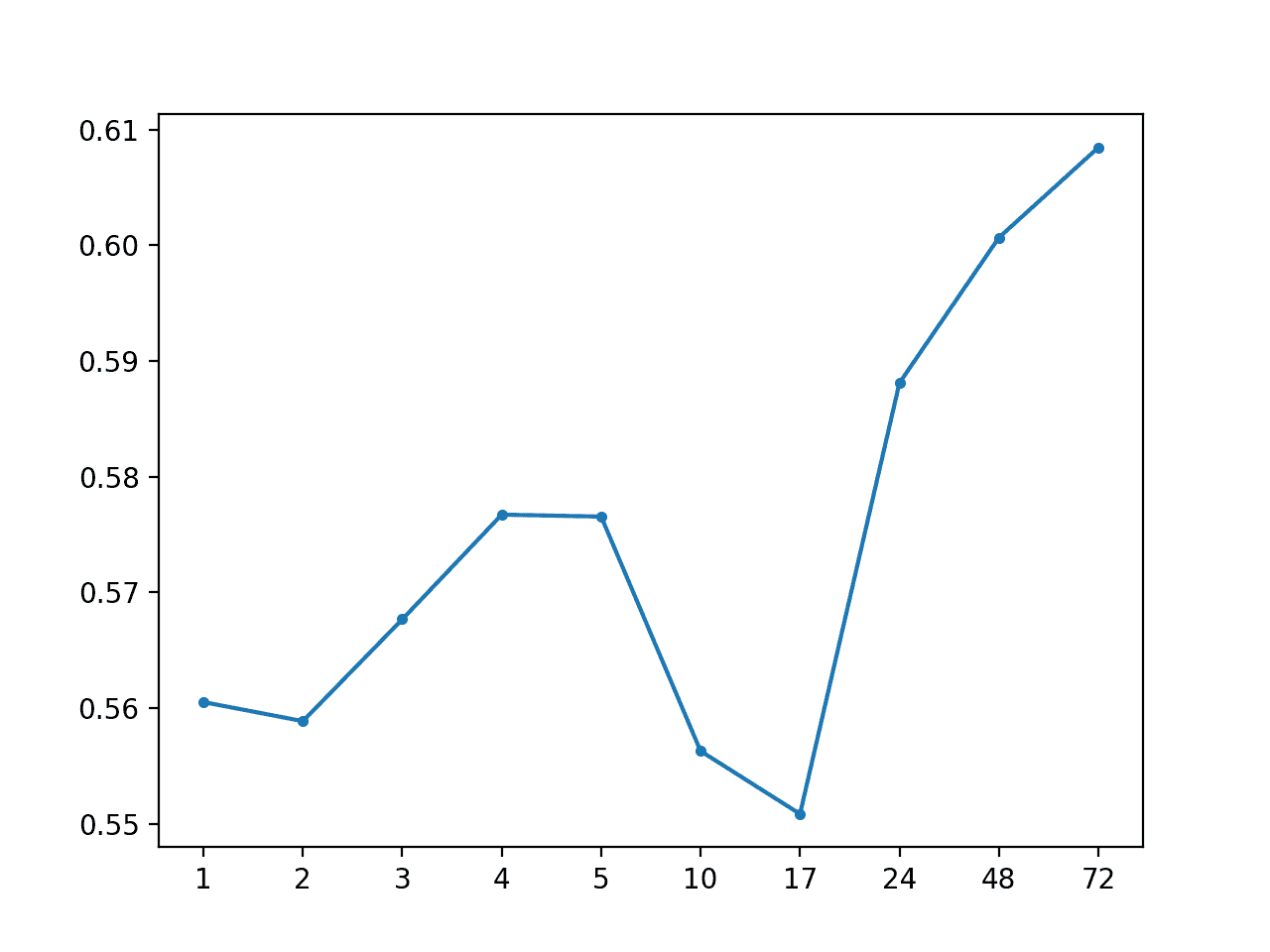

運行該示例將按預測提前期打印整體 MAE 和 MAE。

我們可以看到,持久性預測似乎勝過上一節中評估的所有全局策略。

這增加了一些支持,即合理假設特定于塊的信息在建模此問題時很重要。

```py

Persistence: [0.520 MAE] +1 0.217, +2 0.330, +3 0.400, +4 0.471, +5 0.515, +10 0.648, +17 0.656, +24 0.589, +48 0.671, +72 0.708

```

創建每個預測提前期的 MAE 線圖。

重要的是,該圖顯示了隨著預測提前期的增加而增加誤差的預期行為。也就是說,進一步預測到未來,它越具挑戰性,反過來,預期會產生越多的錯誤。

MAE 通過持續性預測提前期

### 預測每個系列的平均值

我們可以僅使用塊中的數據來保持系列的平均值,而不是持續系列的最后一次觀察。

具體來說,我們可以計算出系列的中位數,正如我們在上一節中發現的那樣,似乎可以帶來更好的表現。

_forecast_variable()_ 實現了這種本地策略。

```py

# forecast all lead times for one variable

def forecast_variable(train_chunks, chunk_train, chunk_test, lead_times, target_ix):

# convert target number into column number

col_ix = 3 + target_ix

# extract the history for the series

history = chunk_train[:, col_ix]

# calculate the central tendency

value = nanmedian(history)

# persist the same value for all lead times

forecast = [value for _ in range(len(lead_times))]

return forecast

```

下面列出了完整的示例。

```py

# forecast local median

from numpy import loadtxt

from numpy import nan

from numpy import isnan

from numpy import count_nonzero

from numpy import unique

from numpy import array

from numpy import nanmedian

from matplotlib import pyplot

# split the dataset by 'chunkID', return a list of chunks

def to_chunks(values, chunk_ix=0):

chunks = list()

# get the unique chunk ids

chunk_ids = unique(values[:, chunk_ix])

# group rows by chunk id

for chunk_id in chunk_ids:

selection = values[:, chunk_ix] == chunk_id

chunks.append(values[selection, :])

return chunks

# return a list of relative forecast lead times

def get_lead_times():

return [1, 2, 3, 4, 5, 10, 17, 24, 48, 72]

# forecast all lead times for one variable

def forecast_variable(train_chunks, chunk_train, chunk_test, lead_times, target_ix):

# convert target number into column number

col_ix = 3 + target_ix

# extract the history for the series

history = chunk_train[:, col_ix]

# calculate the central tendency

value = nanmedian(history)

# persist the same value for all lead times

forecast = [value for _ in range(len(lead_times))]

return forecast

# forecast for each chunk, returns [chunk][variable][time]

def forecast_chunks(train_chunks, test_input):

lead_times = get_lead_times()

predictions = list()

# enumerate chunks to forecast

for i in range(len(train_chunks)):

# enumerate targets for chunk

chunk_predictions = list()

for j in range(39):

yhat = forecast_variable(train_chunks, train_chunks[i], test_input[i], lead_times, j)

chunk_predictions.append(yhat)

chunk_predictions = array(chunk_predictions)

predictions.append(chunk_predictions)

return array(predictions)

# convert the test dataset in chunks to [chunk][variable][time] format

def prepare_test_forecasts(test_chunks):

predictions = list()

# enumerate chunks to forecast

for rows in test_chunks:

# enumerate targets for chunk

chunk_predictions = list()

for j in range(3, rows.shape[1]):

yhat = rows[:, j]

chunk_predictions.append(yhat)

chunk_predictions = array(chunk_predictions)

predictions.append(chunk_predictions)

return array(predictions)

# calculate the error between an actual and predicted value

def calculate_error(actual, predicted):

# give the full actual value if predicted is nan

if isnan(predicted):

return abs(actual)

# calculate abs difference

return abs(actual - predicted)

# evaluate a forecast in the format [chunk][variable][time]

def evaluate_forecasts(predictions, testset):

lead_times = get_lead_times()

total_mae, times_mae = 0.0, [0.0 for _ in range(len(lead_times))]

total_c, times_c = 0, [0 for _ in range(len(lead_times))]

# enumerate test chunks

for i in range(len(test_chunks)):

# convert to forecasts

actual = testset[i]

predicted = predictions[i]

# enumerate target variables

for j in range(predicted.shape[0]):

# enumerate lead times

for k in range(len(lead_times)):

# skip if actual in nan

if isnan(actual[j, k]):

continue

# calculate error

error = calculate_error(actual[j, k], predicted[j, k])

# update statistics

total_mae += error

times_mae[k] += error

total_c += 1

times_c[k] += 1

# normalize summed absolute errors

total_mae /= total_c

times_mae = [times_mae[i]/times_c[i] for i in range(len(times_mae))]

return total_mae, times_mae

# summarize scores

def summarize_error(name, total_mae, times_mae):

# print summary

lead_times = get_lead_times()

formatted = ['+%d %.3f' % (lead_times[i], times_mae[i]) for i in range(len(lead_times))]

s_scores = ', '.join(formatted)

print('%s: [%.3f MAE] %s' % (name, total_mae, s_scores))

# plot summary

pyplot.plot([str(x) for x in lead_times], times_mae, marker='.')

pyplot.show()

# load dataset

train = loadtxt('AirQualityPrediction/naive_train.csv', delimiter=',')

test = loadtxt('AirQualityPrediction/naive_test.csv', delimiter=',')

# group data by chunks

train_chunks = to_chunks(train)

test_chunks = to_chunks(test)

# forecast

test_input = [rows[:, :3] for rows in test_chunks]

forecast = forecast_chunks(train_chunks, test_input)

# evaluate forecast

actual = prepare_test_forecasts(test_chunks)

total_mae, times_mae = evaluate_forecasts(forecast, actual)

# summarize forecast

summarize_error('Local Median', total_mae, times_mae)

```

運行該示例總結了這種樸素策略的表現,顯示了大約 0.568 的 MAE,這比上述持久性策略更糟糕。

```py

Local Median: [0.568 MAE] +1 0.535, +2 0.542, +3 0.550, +4 0.568, +5 0.568, +10 0.562, +17 0.567, +24 0.605, +48 0.590, +72 0.593

```

還創建了每個預測提前期的 MAE 線圖,顯示了每個提前期的常見誤差增加曲線。

MAE by Forecast Lead Time via Local Median

### 預測每個系列的每日小時的平均值

最后,我們可以使用每個預測提前期特定時段的每個系列的平均值來撥入持久性策略。

發現這種方法在全球戰略中是有效的。盡管存在使用更小數據樣本的風險,但僅使用來自塊的數據可能是有效的。

下面的 _forecast_variable()_ 函數實現了這個策略,首先查找具有預測提前期小時的所有行,然后計算給定目標變量的那些行的中值。

```py

# forecast all lead times for one variable

def forecast_variable(train_chunks, chunk_train, chunk_test, lead_times, target_ix):

forecast = list()

# convert target number into column number

col_ix = 3 + target_ix

# enumerate lead times

for i in range(len(lead_times)):

# get the hour for this forecast lead time

hour = chunk_test[i, 2]

# check for no test data

if isnan(hour):

forecast.append(nan)

continue

# select rows in chunk with this hour

selected = chunk_train[chunk_train[:,2]==hour]

# calculate the central tendency for target

value = nanmedian(selected[:, col_ix])

forecast.append(value)

return forecast

```

下面列出了完整的示例。

```py

# forecast local median per hour of day

from numpy import loadtxt

from numpy import nan

from numpy import isnan

from numpy import unique

from numpy import array

from numpy import nanmedian

from matplotlib import pyplot

# split the dataset by 'chunkID', return a list of chunks

def to_chunks(values, chunk_ix=0):

chunks = list()

# get the unique chunk ids

chunk_ids = unique(values[:, chunk_ix])

# group rows by chunk id

for chunk_id in chunk_ids:

selection = values[:, chunk_ix] == chunk_id

chunks.append(values[selection, :])

return chunks

# return a list of relative forecast lead times

def get_lead_times():

return [1, 2, 3, 4, 5, 10, 17, 24, 48, 72]

# forecast all lead times for one variable

def forecast_variable(train_chunks, chunk_train, chunk_test, lead_times, target_ix):

forecast = list()

# convert target number into column number

col_ix = 3 + target_ix

# enumerate lead times

for i in range(len(lead_times)):

# get the hour for this forecast lead time

hour = chunk_test[i, 2]

# check for no test data

if isnan(hour):

forecast.append(nan)

continue

# select rows in chunk with this hour

selected = chunk_train[chunk_train[:,2]==hour]

# calculate the central tendency for target

value = nanmedian(selected[:, col_ix])

forecast.append(value)

return forecast

# forecast for each chunk, returns [chunk][variable][time]

def forecast_chunks(train_chunks, test_input):

lead_times = get_lead_times()

predictions = list()

# enumerate chunks to forecast

for i in range(len(train_chunks)):

# enumerate targets for chunk

chunk_predictions = list()

for j in range(39):

yhat = forecast_variable(train_chunks, train_chunks[i], test_input[i], lead_times, j)

chunk_predictions.append(yhat)

chunk_predictions = array(chunk_predictions)

predictions.append(chunk_predictions)

return array(predictions)

# convert the test dataset in chunks to [chunk][variable][time] format

def prepare_test_forecasts(test_chunks):

predictions = list()

# enumerate chunks to forecast

for rows in test_chunks:

# enumerate targets for chunk

chunk_predictions = list()

for j in range(3, rows.shape[1]):

yhat = rows[:, j]

chunk_predictions.append(yhat)

chunk_predictions = array(chunk_predictions)

predictions.append(chunk_predictions)

return array(predictions)

# calculate the error between an actual and predicted value

def calculate_error(actual, predicted):

# give the full actual value if predicted is nan

if isnan(predicted):

return abs(actual)

# calculate abs difference

return abs(actual - predicted)

# evaluate a forecast in the format [chunk][variable][time]

def evaluate_forecasts(predictions, testset):

lead_times = get_lead_times()

total_mae, times_mae = 0.0, [0.0 for _ in range(len(lead_times))]

total_c, times_c = 0, [0 for _ in range(len(lead_times))]

# enumerate test chunks

for i in range(len(test_chunks)):

# convert to forecasts

actual = testset[i]

predicted = predictions[i]

# enumerate target variables

for j in range(predicted.shape[0]):

# enumerate lead times

for k in range(len(lead_times)):

# skip if actual in nan

if isnan(actual[j, k]):

continue

# calculate error

error = calculate_error(actual[j, k], predicted[j, k])

# update statistics

total_mae += error

times_mae[k] += error

total_c += 1

times_c[k] += 1

# normalize summed absolute errors

total_mae /= total_c

times_mae = [times_mae[i]/times_c[i] for i in range(len(times_mae))]

return total_mae, times_mae

# summarize scores

def summarize_error(name, total_mae, times_mae):

# print summary

lead_times = get_lead_times()

formatted = ['+%d %.3f' % (lead_times[i], times_mae[i]) for i in range(len(lead_times))]

s_scores = ', '.join(formatted)

print('%s: [%.3f MAE] %s' % (name, total_mae, s_scores))

# plot summary

pyplot.plot([str(x) for x in lead_times], times_mae, marker='.')

pyplot.show()

# load dataset

train = loadtxt('AirQualityPrediction/naive_train.csv', delimiter=',')

test = loadtxt('AirQualityPrediction/naive_test.csv', delimiter=',')

# group data by chunks

train_chunks = to_chunks(train)

test_chunks = to_chunks(test)

# forecast

test_input = [rows[:, :3] for rows in test_chunks]

forecast = forecast_chunks(train_chunks, test_input)

# evaluate forecast

actual = prepare_test_forecasts(test_chunks)

total_mae, times_mae = evaluate_forecasts(forecast, actual)

# summarize forecast

summarize_error('Local Median by Hour', total_mae, times_mae)

```

運行該示例打印的總體 MAE 約為 0.574,這比同一策略的全局變化更差。

如所懷疑的那樣,這可能是由于樣本量很小,最多五行訓練數據對每個預測都有貢獻。

```py

Local Median by Hour: [0.574 MAE] +1 0.561, +2 0.559, +3 0.568, +4 0.577, +5 0.577, +10 0.556, +17 0.551, +24 0.588, +48 0.601, +72 0.608

```

還創建了每個預測提前期的 MAE 線圖,顯示了每個提前期的常見誤差增加曲線。

MAE 按預測提前時間按當地中位數按小時計算

## 結果摘要

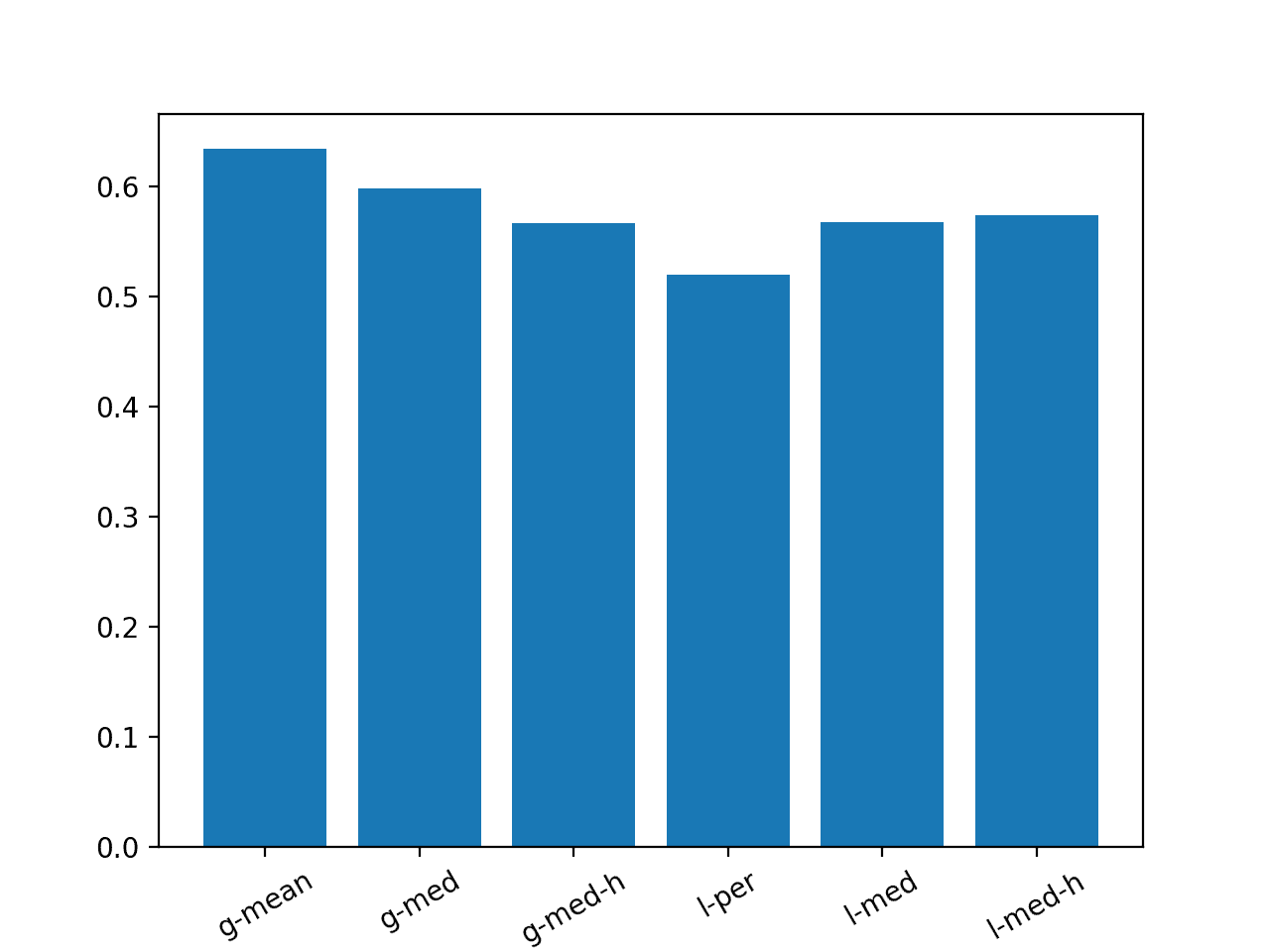

我們可以總結本教程中審查的所有樸素預測方法的表現。

下面的例子列出了每個小時的' _g_ '和' _l_ '用于全局和本地以及' _h_ '用于小時的每種方法變化。該示例創建了一個條形圖,以便我們可以根據它們的相對表現來比較樸素的策略。

```py

# summary of results

from matplotlib import pyplot

# results

results = {

'g-mean':0.634,

'g-med':0.598,

'g-med-h':0.567,

'l-per':0.520,

'l-med':0.568,

'l-med-h':0.574}

# plot

pyplot.bar(results.keys(), results.values())

locs, labels = pyplot.xticks()

pyplot.setp(labels, rotation=30)

pyplot.show()

```

運行該示例會創建一個條形圖,比較六種策略中每種策略的 MAE。

我們可以看到持久性策略優于所有其他方法,并且第二個最佳策略是使用一小時的每個系列的全局中位數。

在該訓練/測試分離數據集上評估的模型必須達到低于 0.520 的總體 MAE 才能被認為是熟練的。

帶有樸素預測方法概要的條形圖

## 擴展

本節列出了一些擴展您可能希望探索的教程的想法。

* **跨站點樸素預測**。制定一個樸素的預測策略,該策略使用跨站點的每個變量的信息,例如:不同站點的同一變量的不同目標變量。

* **混合方法**。制定混合預測策略,該策略結合了本教程中描述的兩個或更多樸素預測策略的元素。

* **樸素方法的集合**。制定集合預測策略,創建本教程中描述的兩個或更多預測策略的線性組合。

如果你探索任何這些擴展,我很想知道。

## 進一步閱讀

如果您希望深入了解,本節將提供有關該主題的更多資源。

### 帖子

* [標準多變量,多步驟和多站點時間序列預測問題](https://machinelearningmastery.com/standard-multivariate-multi-step-multi-site-time-series-forecasting-problem/)

* [如何使用 Python 進行時間序列預測的基線預測](https://machinelearningmastery.com/persistence-time-series-forecasting-with-python/)

### 用品

* [EMC 數據科學全球黑客馬拉松(空氣質量預測)](https://www.kaggle.com/c/dsg-hackathon/data)

* [將所有東西放入隨機森林:Ben Hamner 贏得空氣質量預測黑客馬拉松](http://blog.kaggle.com/2012/05/01/chucking-everything-into-a-random-forest-ben-hamner-on-winning-the-air-quality-prediction-hackathon/)

* [EMC 數據科學全球黑客馬拉松(空氣質量預測)的獲獎代碼](https://github.com/benhamner/Air-Quality-Prediction-Hackathon-Winning-Model)

* [分區模型的一般方法?](https://www.kaggle.com/c/dsg-hackathon/discussion/1821)

## 摘要

在本教程中,您了解了如何為多步驟多變量空氣污染時間序列預測問題開發樸素的預測方法。

具體來說,你學到了:

* 如何開發用于評估大氣污染數據集預測策略的測試工具。

* 如何開發使用整個訓練數據集中的數據的全球樸素預測策略。

* 如何開發使用來自預測的特定區間的數據的本地樸素預測策略。

你有任何問題嗎?

在下面的評論中提出您的問題,我會盡力回答。

- Machine Learning Mastery 應用機器學習教程

- 5競爭機器學習的好處

- 過度擬合的簡單直覺,或者為什么測試訓練數據是一個壞主意

- 特征選擇簡介

- 應用機器學習作為一個搜索問題的溫和介紹

- 為什么應用機器學習很難

- 為什么我的結果不如我想的那么好?你可能過度擬合了

- 用ROC曲線評估和比較分類器表現

- BigML評論:發現本機學習即服務平臺的聰明功能

- BigML教程:開發您的第一個決策樹并進行預測

- 構建生產機器學習基礎設施

- 分類準確性不夠:可以使用更多表現測量

- 一種預測模型的巧妙應用

- 機器學習項目中常見的陷阱

- 數據清理:將凌亂的數據轉換為整潔的數據

- 機器學習中的數據泄漏

- 數據,學習和建模

- 數據管理至關重要以及為什么需要認真對待它

- 將預測模型部署到生產中

- 參數和超參數之間有什么區別?

- 測試和驗證數據集之間有什么區別?

- 發現特征工程,如何設計特征以及如何獲得它

- 如何開始使用Kaggle

- 超越預測

- 如何在評估機器學習算法時選擇正確的測試選項

- 如何定義機器學習問題

- 如何評估機器學習算法

- 如何獲得基線結果及其重要性

- 如何充分利用機器學習數據

- 如何識別數據中的異常值

- 如何提高機器學習效果

- 如何在競爭機器學習中踢屁股

- 如何知道您的機器學習模型是否具有良好的表現

- 如何布局和管理您的機器學習項目

- 如何為機器學習準備數據

- 如何減少最終機器學習模型中的方差

- 如何使用機器學習結果

- 如何解決像數據科學家這樣的問題

- 通過數據預處理提高模型精度

- 處理機器學習的大數據文件的7種方法

- 建立機器學習系統的經驗教訓

- 如何使用機器學習清單可靠地獲得準確的預測(即使您是初學者)

- 機器學習模型運行期間要做什么

- 機器學習表現改進備忘單

- 來自世界級從業者的機器學習技巧:Phil Brierley

- 模型預測精度與機器學習中的解釋

- 競爭機器學習的模型選擇技巧

- 機器學習需要多少訓練數據?

- 如何系統地規劃和運行機器學習實驗

- 應用機器學習過程

- 默認情況下可重現的機器學習結果

- 10個實踐應用機器學習的標準數據集

- 簡單的三步法到最佳機器學習算法

- 打擊機器學習數據集中不平衡類的8種策略

- 模型表現不匹配問題(以及如何處理)

- 黑箱機器學習的誘惑陷阱

- 如何培養最終的機器學習模型

- 使用探索性數據分析了解您的問題并獲得更好的結果

- 什么是數據挖掘和KDD

- 為什么One-Hot在機器學習中編碼數據?

- 為什么你應該在你的機器學習問題上進行抽樣檢查算法

- 所以,你正在研究機器學習問題......

- Machine Learning Mastery Keras 深度學習教程

- Keras 中神經網絡模型的 5 步生命周期

- 在 Python 迷你課程中應用深度學習

- Keras 深度學習庫的二元分類教程

- 如何用 Keras 構建多層感知器神經網絡模型

- 如何在 Keras 中檢查深度學習模型

- 10 個用于 Amazon Web Services 深度學習的命令行秘籍

- 機器學習卷積神經網絡的速成課程

- 如何在 Python 中使用 Keras 進行深度學習的度量

- 深度學習書籍

- 深度學習課程

- 你所知道的深度學習是一種謊言

- 如何設置 Amazon AWS EC2 GPU 以訓練 Keras 深度學習模型(分步)

- 神經網絡中批量和迭代之間的區別是什么?

- 在 Keras 展示深度學習模型訓練歷史

- 基于 Keras 的深度學習模型中的dropout正則化

- 評估 Keras 中深度學習模型的表現

- 如何評價深度學習模型的技巧

- 小批量梯度下降的簡要介紹以及如何配置批量大小

- 在 Keras 中獲得深度學習幫助的 9 種方法

- 如何使用 Keras 在 Python 中網格搜索深度學習模型的超參數

- 用 Keras 在 Python 中使用卷積神經網絡進行手寫數字識別

- 如何用 Keras 進行預測

- 用 Keras 進行深度學習的圖像增強

- 8 個深度學習的鼓舞人心的應用

- Python 深度學習庫 Keras 簡介

- Python 深度學習庫 TensorFlow 簡介

- Python 深度學習庫 Theano 簡介

- 如何使用 Keras 函數式 API 進行深度學習

- Keras 深度學習庫的多類分類教程

- 多層感知器神經網絡速成課程

- 基于卷積神經網絡的 Keras 深度學習庫中的目標識別

- 流行的深度學習庫

- 用深度學習預測電影評論的情感

- Python 中的 Keras 深度學習庫的回歸教程

- 如何使用 Keras 獲得可重現的結果

- 如何在 Linux 服務器上運行深度學習實驗

- 保存并加載您的 Keras 深度學習模型

- 用 Keras 逐步開發 Python 中的第一個神經網絡

- 用 Keras 理解 Python 中的有狀態 LSTM 循環神經網絡

- 在 Python 中使用 Keras 深度學習模型和 Scikit-Learn

- 如何使用預訓練的 VGG 模型對照片中的物體進行分類

- 在 Python 和 Keras 中對深度學習模型使用學習率調度

- 如何在 Keras 中可視化深度學習神經網絡模型

- 什么是深度學習?

- 何時使用 MLP,CNN 和 RNN 神經網絡

- 為什么用隨機權重初始化神經網絡?

- Machine Learning Mastery 深度學習 NLP 教程

- 深度學習在自然語言處理中的 7 個應用

- 如何實現自然語言處理的波束搜索解碼器

- 深度學習文檔分類的最佳實踐

- 關于自然語言處理的熱門書籍

- 在 Python 中計算文本 BLEU 分數的溫和介紹

- 使用編碼器 - 解碼器模型的用于字幕生成的注入和合并架構

- 如何用 Python 清理機器學習的文本

- 如何配置神經機器翻譯的編碼器 - 解碼器模型

- 如何開始深度學習自然語言處理(7 天迷你課程)

- 自然語言處理的數據集

- 如何開發一種深度學習的詞袋模型來預測電影評論情感

- 深度學習字幕生成模型的溫和介紹

- 如何在 Keras 中定義神經機器翻譯的編碼器 - 解碼器序列 - 序列模型

- 如何利用小實驗在 Keras 中開發字幕生成模型

- 如何從頭開發深度學習圖片標題生成器

- 如何在 Keras 中開發基于字符的神經語言模型

- 如何開發用于情感分析的 N-gram 多通道卷積神經網絡

- 如何從零開始開發神經機器翻譯系統

- 如何在 Python 中用 Keras 開發基于單詞的神經語言模型

- 如何開發一種預測電影評論情感的詞嵌入模型

- 如何使用 Gensim 在 Python 中開發詞嵌入

- 用于文本摘要的編碼器 - 解碼器深度學習模型

- Keras 中文本摘要的編碼器 - 解碼器模型

- 用于神經機器翻譯的編碼器 - 解碼器循環神經網絡模型

- 淺談詞袋模型

- 文本摘要的溫和介紹

- 編碼器 - 解碼器循環神經網絡中的注意力如何工作

- 如何利用深度學習自動生成照片的文本描述

- 如何開發一個單詞級神經語言模型并用它來生成文本

- 淺談神經機器翻譯

- 什么是自然語言處理?

- 牛津自然語言處理深度學習課程

- 如何為機器翻譯準備法語到英語的數據集

- 如何為情感分析準備電影評論數據

- 如何為文本摘要準備新聞文章

- 如何準備照片標題數據集以訓練深度學習模型

- 如何使用 Keras 為深度學習準備文本數據

- 如何使用 scikit-learn 為機器學習準備文本數據

- 自然語言處理神經網絡模型入門

- 對自然語言處理的深度學習的承諾

- 在 Python 中用 Keras 進行 LSTM 循環神經網絡的序列分類

- 斯坦福自然語言處理深度學習課程評價

- 統計語言建模和神經語言模型的簡要介紹

- 使用 Keras 在 Python 中進行 LSTM 循環神經網絡的文本生成

- 淺談機器學習中的轉換

- 如何使用 Keras 將詞嵌入層用于深度學習

- 什么是用于文本的詞嵌入

- Machine Learning Mastery 深度學習時間序列教程

- 如何開發人類活動識別的一維卷積神經網絡模型

- 人類活動識別的深度學習模型

- 如何評估人類活動識別的機器學習算法

- 時間序列預測的多層感知器網絡探索性配置

- 比較經典和機器學習方法進行時間序列預測的結果

- 如何通過深度學習快速獲得時間序列預測的結果

- 如何利用 Python 處理序列預測問題中的缺失時間步長

- 如何建立預測大氣污染日的概率預測模型

- 如何開發一種熟練的機器學習時間序列預測模型

- 如何構建家庭用電自回歸預測模型

- 如何開發多步空氣污染時間序列預測的自回歸預測模型

- 如何制定多站點多元空氣污染時間序列預測的基線預測

- 如何開發時間序列預測的卷積神經網絡模型

- 如何開發卷積神經網絡用于多步時間序列預測

- 如何開發單變量時間序列預測的深度學習模型

- 如何開發 LSTM 模型用于家庭用電的多步時間序列預測

- 如何開發 LSTM 模型進行時間序列預測

- 如何開發多元多步空氣污染時間序列預測的機器學習模型

- 如何開發多層感知器模型進行時間序列預測

- 如何開發人類活動識別時間序列分類的 RNN 模型

- 如何開始深度學習的時間序列預測(7 天迷你課程)

- 如何網格搜索深度學習模型進行時間序列預測

- 如何對單變量時間序列預測的網格搜索樸素方法

- 如何在 Python 中搜索 SARIMA 模型超參數用于時間序列預測

- 如何在 Python 中進行時間序列預測的網格搜索三次指數平滑

- 一個標準的人類活動識別問題的溫和介紹

- 如何加載和探索家庭用電數據

- 如何加載,可視化和探索復雜的多變量多步時間序列預測數據集

- 如何從智能手機數據模擬人類活動

- 如何根據環境因素預測房間占用率

- 如何使用腦波預測人眼是開放還是閉合

- 如何在 Python 中擴展長短期內存網絡的數據

- 如何使用 TimeseriesGenerator 進行 Keras 中的時間序列預測

- 基于機器學習算法的室內運動時間序列分類

- 用于時間序列預測的狀態 LSTM 在線學習的不穩定性

- 用于罕見事件時間序列預測的 LSTM 模型體系結構

- 用于時間序列預測的 4 種通用機器學習數據變換

- Python 中長短期記憶網絡的多步時間序列預測

- 家庭用電機器學習的多步時間序列預測

- Keras 中 LSTM 的多變量時間序列預測

- 如何開發和評估樸素的家庭用電量預測方法

- 如何為長短期記憶網絡準備單變量時間序列數據

- 循環神經網絡在時間序列預測中的應用

- 如何在 Python 中使用差異變換刪除趨勢和季節性

- 如何在 LSTM 中種子狀態用于 Python 中的時間序列預測

- 使用 Python 進行時間序列預測的有狀態和無狀態 LSTM

- 長短時記憶網絡在時間序列預測中的適用性

- 時間序列預測問題的分類

- Python 中長短期記憶網絡的時間序列預測

- 基于 Keras 的 Python 中 LSTM 循環神經網絡的時間序列預測

- Keras 中深度學習的時間序列預測

- 如何用 Keras 調整 LSTM 超參數進行時間序列預測

- 如何在時間序列預測訓練期間更新 LSTM 網絡

- 如何使用 LSTM 網絡的 Dropout 進行時間序列預測

- 如何使用 LSTM 網絡中的特征進行時間序列預測

- 如何在 LSTM 網絡中使用時間序列進行時間序列預測

- 如何利用 LSTM 網絡進行權重正則化進行時間序列預測

- Machine Learning Mastery 線性代數教程

- 機器學習數學符號的基礎知識

- 用 NumPy 陣列輕松介紹廣播

- 如何從 Python 中的 Scratch 計算主成分分析(PCA)

- 用于編碼器審查的計算線性代數

- 10 機器學習中的線性代數示例

- 線性代數的溫和介紹

- 用 NumPy 輕松介紹 Python 中的 N 維數組

- 機器學習向量的溫和介紹

- 如何在 Python 中為機器學習索引,切片和重塑 NumPy 數組

- 機器學習的矩陣和矩陣算法簡介

- 溫和地介紹機器學習的特征分解,特征值和特征向量

- NumPy 對預期價值,方差和協方差的簡要介紹

- 機器學習矩陣分解的溫和介紹

- 用 NumPy 輕松介紹機器學習的張量

- 用于機器學習的線性代數中的矩陣類型簡介

- 用于機器學習的線性代數備忘單

- 線性代數的深度學習

- 用于機器學習的線性代數(7 天迷你課程)

- 機器學習的線性代數

- 機器學習矩陣運算的溫和介紹

- 線性代數評論沒有廢話指南

- 學習機器學習線性代數的主要資源

- 淺談機器學習的奇異值分解

- 如何用線性代數求解線性回歸

- 用于機器學習的稀疏矩陣的溫和介紹

- 機器學習中向量規范的溫和介紹

- 學習線性代數用于機器學習的 5 個理由

- Machine Learning Mastery LSTM 教程

- Keras中長短期記憶模型的5步生命周期

- 長短時記憶循環神經網絡的注意事項

- CNN長短期記憶網絡

- 逆向神經網絡中的深度學習速成課程

- 可變長度輸入序列的數據準備

- 如何用Keras開發用于Python序列分類的雙向LSTM

- 如何開發Keras序列到序列預測的編碼器 - 解碼器模型

- 如何診斷LSTM模型的過度擬合和欠擬合

- 如何開發一種編碼器 - 解碼器模型,注重Keras中的序列到序列預測

- 編碼器 - 解碼器長短期存儲器網絡

- 神經網絡中爆炸梯度的溫和介紹

- 對時間反向傳播的溫和介紹

- 生成長短期記憶網絡的溫和介紹

- 專家對長短期記憶網絡的簡要介紹

- 在序列預測問題上充分利用LSTM

- 編輯器 - 解碼器循環神經網絡全局注意的溫和介紹

- 如何利用長短時記憶循環神經網絡處理很長的序列

- 如何在Python中對一個熱編碼序列數據

- 如何使用編碼器 - 解碼器LSTM來回顯隨機整數序列

- 具有注意力的編碼器 - 解碼器RNN體系結構的實現模式

- 學習使用編碼器解碼器LSTM循環神經網絡添加數字

- 如何學習長短時記憶循環神經網絡回聲隨機整數

- 具有Keras的長短期記憶循環神經網絡的迷你課程

- LSTM自動編碼器的溫和介紹

- 如何用Keras中的長短期記憶模型進行預測

- 用Python中的長短期內存網絡演示內存

- 基于循環神經網絡的序列預測模型的簡要介紹

- 深度學習的循環神經網絡算法之旅

- 如何重塑Keras中長短期存儲網絡的輸入數據

- 了解Keras中LSTM的返回序列和返回狀態之間的差異

- RNN展開的溫和介紹

- 5學習LSTM循環神經網絡的簡單序列預測問題的例子

- 使用序列進行預測

- 堆疊長短期內存網絡

- 什么是教師強制循環神經網絡?

- 如何在Python中使用TimeDistributed Layer for Long Short-Term Memory Networks

- 如何準備Keras中截斷反向傳播的序列預測

- 如何在使用LSTM進行訓練和預測時使用不同的批量大小

- Machine Learning Mastery 機器學習算法教程

- 機器學習算法之旅

- 用于機器學習的裝袋和隨機森林集合算法

- 從頭開始實施機器學習算法的好處

- 更好的樸素貝葉斯:從樸素貝葉斯算法中獲取最多的12個技巧

- 機器學習的提升和AdaBoost

- 選擇機器學習算法:Microsoft Azure的經驗教訓

- 機器學習的分類和回歸樹

- 什么是機器學習中的混淆矩陣

- 如何使用Python從頭開始創建算法測試工具

- 通過創建機器學習算法的目標列表來控制

- 從頭開始停止編碼機器學習算法

- 在實現機器學習算法時,不要從開源代碼開始

- 不要使用隨機猜測作為基線分類器

- 淺談機器學習中的概念漂移

- 溫和介紹機器學習中的偏差 - 方差權衡

- 機器學習的梯度下降

- 機器學習算法如何工作(他們學習輸入到輸出的映射)

- 如何建立機器學習算法的直覺

- 如何實現機器學習算法

- 如何研究機器學習算法行為

- 如何學習機器學習算法

- 如何研究機器學習算法

- 如何研究機器學習算法

- 如何在Python中從頭開始實現反向傳播算法

- 如何用Python從頭開始實現Bagging

- 如何用Python從頭開始實現基線機器學習算法

- 如何在Python中從頭開始實現決策樹算法

- 如何用Python從頭開始實現學習向量量化

- 如何利用Python從頭開始隨機梯度下降實現線性回歸

- 如何利用Python從頭開始隨機梯度下降實現Logistic回歸

- 如何用Python從頭開始實現機器學習算法表現指標

- 如何在Python中從頭開始實現感知器算法

- 如何在Python中從零開始實現隨機森林

- 如何在Python中從頭開始實現重采樣方法

- 如何用Python從頭開始實現簡單線性回歸

- 如何用Python從頭開始實現堆棧泛化(Stacking)

- K-Nearest Neighbors for Machine Learning

- 學習機器學習的向量量化

- 機器學習的線性判別分析

- 機器學習的線性回歸

- 使用梯度下降進行機器學習的線性回歸教程

- 如何在Python中從頭開始加載機器學習數據

- 機器學習的Logistic回歸

- 機器學習的Logistic回歸教程

- 機器學習算法迷你課程

- 如何在Python中從頭開始實現樸素貝葉斯

- 樸素貝葉斯機器學習

- 樸素貝葉斯機器學習教程

- 機器學習算法的過擬合和欠擬合

- 參數化和非參數機器學習算法

- 理解任何機器學習算法的6個問題

- 在機器學習中擁抱隨機性

- 如何使用Python從頭開始擴展機器學習數據

- 機器學習的簡單線性回歸教程

- 有監督和無監督的機器學習算法

- 用于機器學習的支持向量機

- 在沒有數學背景的情況下理解機器學習算法的5種技術

- 最好的機器學習算法

- 教程從頭開始在Python中實現k-Nearest Neighbors

- 通過從零開始實現它們來理解機器學習算法(以及繞過壞代碼的策略)

- 使用隨機森林:在121個數據集上測試179個分類器

- 為什么從零開始實現機器學習算法

- Machine Learning Mastery 機器學習入門教程

- 機器學習入門的四個步驟:初學者入門與實踐的自上而下策略

- 你應該培養的 5 個機器學習領域

- 一種選擇機器學習算法的數據驅動方法

- 機器學習中的分析與數值解

- 應用機器學習是一種精英政治

- 機器學習的基本概念

- 如何成為數據科學家

- 初學者如何在機器學習中弄錯

- 機器學習的最佳編程語言

- 構建機器學習組合

- 機器學習中分類與回歸的區別

- 評估自己作為數據科學家并利用結果建立驚人的數據科學團隊

- 探索 Kaggle 大師的方法論和心態:對 Diogo Ferreira 的采訪

- 擴展機器學習工具并展示掌握

- 通過尋找地標開始機器學習

- 溫和地介紹預測建模

- 通過提供結果在機器學習中獲得夢想的工作

- 如何開始機器學習:自學藍圖

- 開始并在機器學習方面取得進展

- 應用機器學習的 Hello World

- 初學者如何使用小型項目開始機器學習并在 Kaggle 上進行競爭

- 我如何開始機器學習? (簡短版)

- 我是如何開始機器學習的

- 如何在機器學習中取得更好的成績

- 如何從在銀行工作到擔任 Target 的高級數據科學家

- 如何學習任何機器學習工具

- 使用小型目標項目深入了解機器學習工具

- 獲得付費申請機器學習

- 映射機器學習工具的景觀

- 機器學習開發環境

- 機器學習金錢

- 程序員的機器學習

- 機器學習很有意思

- 機器學習是 Kaggle 比賽

- 機器學習現在很受歡迎

- 機器學習掌握方法

- 機器學習很重要

- 機器學習 Q&amp; A:概念漂移,更好的結果和學習更快

- 缺乏自學機器學習的路線圖

- 機器學習很重要

- 快速了解任何機器學習工具(即使您是初學者)

- 機器學習工具

- 找到你的機器學習部落

- 機器學習在一年

- 通過競爭一致的大師 Kaggle

- 5 程序員在機器學習中開始犯錯誤

- 哲學畢業生到機器學習從業者(Brian Thomas 采訪)

- 機器學習入門的實用建議

- 實用機器學習問題

- 使用來自 UCI 機器學習庫的數據集練習機器學習

- 使用秘籍的任何機器學習工具快速啟動

- 程序員可以進入機器學習

- 程序員應該進入機器學習

- 項目焦點:Shashank Singh 的人臉識別

- 項目焦點:使用 Mahout 和 Konstantin Slisenko 進行堆棧交換群集

- 機器學習自學指南

- 4 個自學機器學習項目

- álvaroLemos 如何在數據科學團隊中獲得機器學習實習

- 如何思考機器學習

- 現實世界機器學習問題之旅

- 有關機器學習的有用知識

- 如果我沒有學位怎么辦?

- 如果我不是一個優秀的程序員怎么辦?

- 如果我不擅長數學怎么辦?

- 為什么機器學習算法會處理以前從未見過的數據?

- 是什么阻礙了你的機器學習目標?

- 什么是機器學習?

- 機器學習適合哪里?

- 為什么要進入機器學習?

- 研究對您來說很重要的機器學習問題

- 你這樣做是錯的。為什么機器學習不必如此困難

- Machine Learning Mastery Sklearn 教程

- Scikit-Learn 的溫和介紹:Python 機器學習庫

- 使用 Python 管道和 scikit-learn 自動化機器學習工作流程

- 如何以及何時使用帶有 scikit-learn 的校準分類模型

- 如何比較 Python 中的機器學習算法與 scikit-learn

- 用于機器學習開發人員的 Python 崩潰課程

- 用 scikit-learn 在 Python 中集成機器學習算法

- 使用重采樣評估 Python 中機器學習算法的表現

- 使用 Scikit-Learn 在 Python 中進行特征選擇

- Python 中機器學習的特征選擇

- 如何使用 scikit-learn 在 Python 中生成測試數據集

- scikit-learn 中的機器學習算法秘籍

- 如何使用 Python 處理丟失的數據

- 如何開始使用 Python 進行機器學習

- 如何使用 Scikit-Learn 在 Python 中加載數據

- Python 中概率評分方法的簡要介紹

- 如何用 Scikit-Learn 調整算法參數

- 如何在 Mac OS X 上安裝 Python 3 環境以進行機器學習和深度學習

- 使用 scikit-learn 進行機器學習簡介

- 從 shell 到一本帶有 Fernando Perez 單一工具的書的 IPython

- 如何使用 Python 3 為機器學習開發創建 Linux 虛擬機

- 如何在 Python 中加載機器學習數據

- 您在 Python 中的第一個機器學習項目循序漸進

- 如何使用 scikit-learn 進行預測

- 用于評估 Python 中機器學習算法的度量標準

- 使用 Pandas 為 Python 中的機器學習準備數據

- 如何使用 Scikit-Learn 為 Python 機器學習準備數據

- 項目焦點:使用 Artem Yankov 在 Python 中進行事件推薦

- 用于機器學習的 Python 生態系統

- Python 是應用機器學習的成長平臺

- Python 機器學習書籍

- Python 機器學習迷你課程

- 使用 Pandas 快速和骯臟的數據分析

- 使用 Scikit-Learn 重新調整 Python 中的機器學習數據

- 如何以及何時使用 ROC 曲線和精確調用曲線進行 Python 分類

- 使用 scikit-learn 在 Python 中保存和加載機器學習模型

- scikit-learn Cookbook 書評

- 如何使用 Anaconda 為機器學習和深度學習設置 Python 環境

- 使用 scikit-learn 在 Python 中進行 Spot-Check 分類機器學習算法

- 如何在 Python 中開發可重復使用的抽樣檢查算法框架

- 使用 scikit-learn 在 Python 中進行 Spot-Check 回歸機器學習算法

- 使用 Python 中的描述性統計來了解您的機器學習數據

- 使用 OpenCV,Python 和模板匹配來播放“哪里是 Waldo?”

- 使用 Pandas 在 Python 中可視化機器學習數據

- Machine Learning Mastery 統計學教程

- 淺談計算正態匯總統計量

- 非參數統計的溫和介紹

- Python中常態測試的溫和介紹

- 淺談Bootstrap方法

- 淺談機器學習的中心極限定理

- 淺談機器學習中的大數定律

- 機器學習的所有統計數據

- 如何計算Python中機器學習結果的Bootstrap置信區間

- 淺談機器學習的Chi-Squared測試

- 機器學習的置信區間

- 隨機化在機器學習中解決混雜變量的作用

- 機器學習中的受控實驗

- 機器學習統計學速成班

- 統計假設檢驗的關鍵值以及如何在Python中計算它們

- 如何在機器學習中談論數據(統計學和計算機科學術語)

- Python中數據可視化方法的簡要介紹

- Python中效果大小度量的溫和介紹

- 估計隨機機器學習算法的實驗重復次數

- 機器學習評估統計的溫和介紹

- 如何計算Python中的非參數秩相關性

- 如何在Python中計算數據的5位數摘要

- 如何在Python中從頭開始編寫學生t檢驗

- 如何在Python中生成隨機數

- 如何轉換數據以更好地擬合正態分布

- 如何使用相關來理解變量之間的關系

- 如何使用統計信息識別數據中的異常值

- 用于Python機器學習的隨機數生成器簡介

- k-fold交叉驗證的溫和介紹

- 如何計算McNemar的比較兩種機器學習量詞的測試

- Python中非參數統計顯著性測試簡介

- 如何在Python中使用參數統計顯著性測試

- 機器學習的預測間隔

- 應用統計學與機器學習的密切關系

- 如何使用置信區間報告分類器表現

- 統計數據分布的簡要介紹

- 15 Python中的統計假設檢驗(備忘單)

- 統計假設檢驗的溫和介紹

- 10如何在機器學習項目中使用統計方法的示例

- Python中統計功效和功耗分析的簡要介紹

- 統計抽樣和重新抽樣的簡要介紹

- 比較機器學習算法的統計顯著性檢驗

- 機器學習中統計容差區間的溫和介紹

- 機器學習統計書籍

- 評估機器學習模型的統計數據

- 機器學習統計(7天迷你課程)

- 用于機器學習的簡明英語統計

- 如何使用統計顯著性檢驗來解釋機器學習結果

- 什么是統計(為什么它在機器學習中很重要)?

- Machine Learning Mastery 時間序列入門教程

- 如何在 Python 中為時間序列預測創建 ARIMA 模型

- 用 Python 進行時間序列預測的自回歸模型

- 如何回溯機器學習模型的時間序列預測

- Python 中基于時間序列數據的基本特征工程

- R 的時間序列預測熱門書籍

- 10 挑戰機器學習時間序列預測問題

- 如何將時間序列轉換為 Python 中的監督學習問題

- 如何將時間序列數據分解為趨勢和季節性

- 如何用 ARCH 和 GARCH 模擬波動率進行時間序列預測

- 如何將時間序列數據集與 Python 區分開來

- Python 中時間序列預測的指數平滑的溫和介紹

- 用 Python 進行時間序列預測的特征選擇

- 淺談自相關和部分自相關

- 時間序列預測的 Box-Jenkins 方法簡介

- 用 Python 簡要介紹時間序列的時間序列預測

- 如何使用 Python 網格搜索 ARIMA 模型超參數

- 如何在 Python 中加載和探索時間序列數據

- 如何使用 Python 對 ARIMA 模型進行手動預測

- 如何用 Python 進行時間序列預測的預測

- 如何使用 Python 中的 ARIMA 進行樣本外預測

- 如何利用 Python 模擬殘差錯誤來糾正時間序列預測

- 使用 Python 進行數據準備,特征工程和時間序列預測的移動平均平滑

- 多步時間序列預測的 4 種策略

- 如何在 Python 中規范化和標準化時間序列數據

- 如何利用 Python 進行時間序列預測的基線預測

- 如何使用 Python 對時間序列預測數據進行功率變換

- 用于時間序列預測的 Python 環境

- 如何重構時間序列預測問題

- 如何使用 Python 重新采樣和插值您的時間序列數據

- 用 Python 編寫 SARIMA 時間序列預測

- 如何在 Python 中保存 ARIMA 時間序列預測模型

- 使用 Python 進行季節性持久性預測

- 基于 ARIMA 的 Python 歷史規模敏感性預測技巧分析

- 簡單的時間序列預測模型進行測試,這樣你就不會欺騙自己

- 標準多變量,多步驟和多站點時間序列預測問題

- 如何使用 Python 檢查時間序列數據是否是固定的

- 使用 Python 進行時間序列數據可視化

- 7 個機器學習的時間序列數據集

- 時間序列預測案例研究與 Python:波士頓每月武裝搶劫案

- Python 的時間序列預測案例研究:巴爾的摩的年度用水量

- 使用 Python 進行時間序列預測研究:法國香檳的月銷售額

- 使用 Python 的置信區間理解時間序列預測不確定性

- 11 Python 中的經典時間序列預測方法(備忘單)

- 使用 Python 進行時間序列預測表現測量

- 使用 Python 7 天迷你課程進行時間序列預測

- 時間序列預測作為監督學習

- 什么是時間序列預測?

- 如何使用 Python 識別和刪除時間序列數據的季節性

- 如何在 Python 中使用和刪除時間序列數據中的趨勢信息

- 如何在 Python 中調整 ARIMA 參數

- 如何用 Python 可視化時間序列殘差預測錯誤

- 白噪聲時間序列與 Python

- 如何通過時間序列預測項目

- Machine Learning Mastery XGBoost 教程

- 通過在 Python 中使用 XGBoost 提前停止來避免過度擬合

- 如何在 Python 中調優 XGBoost 的多線程支持

- 如何配置梯度提升算法

- 在 Python 中使用 XGBoost 進行梯度提升的數據準備

- 如何使用 scikit-learn 在 Python 中開發您的第一個 XGBoost 模型

- 如何在 Python 中使用 XGBoost 評估梯度提升模型

- 在 Python 中使用 XGBoost 的特征重要性和特征選擇

- 淺談機器學習的梯度提升算法

- 應用機器學習的 XGBoost 簡介

- 如何在 macOS 上為 Python 安裝 XGBoost

- 如何在 Python 中使用 XGBoost 保存梯度提升模型

- 從梯度提升開始,比較 165 個數據集上的 13 種算法

- 在 Python 中使用 XGBoost 和 scikit-learn 進行隨機梯度提升

- 如何使用 Amazon Web Services 在云中訓練 XGBoost 模型

- 在 Python 中使用 XGBoost 調整梯度提升的學習率

- 如何在 Python 中使用 XGBoost 調整決策樹的數量和大小

- 如何在 Python 中使用 XGBoost 可視化梯度提升決策樹

- 在 Python 中開始使用 XGBoost 的 7 步迷你課程