# 十四、生成對抗網絡

生成模型被訓練以生成與他們訓練的數據類似的更多數據,并且訓練對抗模型以通過提供對抗性示例來區分真實數據和假數據。

**生成對抗網絡**(**GAN**)結合了兩種模型的特征。 GAN 有兩個組成部分:

* 生成模型,用于學習如何生成類似數據的

* 判別模型,用于學習如何區分真實數據和生成數據(來自生成模型)

GAN 已成功應用于各種復雜問題,例如:

* 從低分辨率圖像生成照片般逼真的高分辨率圖像

* 在文本中合成圖像

* 風格遷移

* 補全不完整的圖像和視頻

在本章中,我們將學習以下主題,以學習如何在 TensorFlow 和 Keras 中實現 GAN:

* 生成對抗網絡

* TensorFlow 中的簡單 GAN

* Keras 中的簡單 GAN

* TensorFlow 和 Keras 中的深度卷積 GAN

# 生成對抗網絡 101

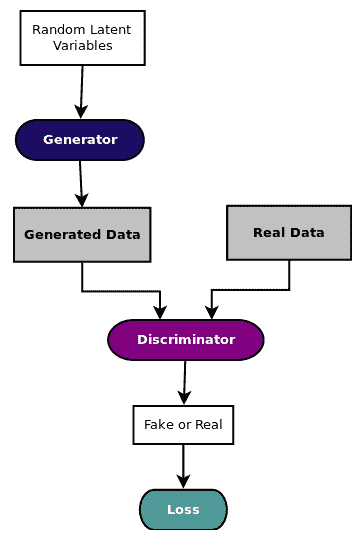

如下圖所示,生成對抗網絡(通常稱為 GAN)有兩個同步工作模型,用于學習和訓練復雜數據,如圖像,視頻或音頻文件:

直觀地,生成器模型從隨機噪聲開始生成數據,但是慢慢地學習如何生成更真實的數據。生成器輸出和實際數據被饋送到判別器,該判別器學習如何區分假數據和真實數據。

因此,生成器和判別器都發揮對抗性游戲,其中生成器試圖通過生成盡可能真實的數據來欺騙判別器,并且判別器試圖不通過從真實數據中識別偽數據而被欺騙,因此判別器試圖最小化分類損失。兩個模型都以鎖步方式進行訓練。

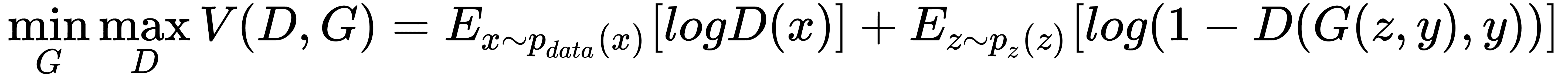

在數學上,生成模型`G(z)`學習概率分布`p(z)`,使得判別器`D(G(z), x)`無法在概率分布`p(z)`和`p(x)`之間進行識別。 GAN 的目標函數可以通過下面描述值函數`V`的等式來描述,(來自[此鏈接](https://papers.nips.cc/paper/5423-generative-adversarial-nets.pdf)):

[可以在此鏈接中找到 IAN Goodfellow 在 NIPS 2016 上關于 GAN 的開創性教程](https://arxiv.org/pdf/1701.00160.pdf)。

這個描述代表了一個簡單的 GAN(在文獻中也稱為香草 GAN),[由 Goodfellow 在此鏈接提供的開創性論文中首次介紹](https://arxiv.org/abs/1406.2661)。從那時起,在基于 GAN 推導不同架構并將其應用于不同應用領域方面進行了大量研究。

例如,在條件 GAN 中,為生成器和判別器網絡提供標簽,使得條件 GAN 的目標函數可以通過以下描述值函數`V`的等式來描述:

[描述條件 GAN 的原始論文位于此鏈接](https://arxiv.org/abs/1411.1784)。

應用中使用的其他幾種衍生產品及其原始論文,如文本到圖像,圖像合成,圖像標記,樣式轉移和圖像轉移等,如下表所示:

| **GAN 衍生物** | **原始文件** | **示例應用** |

| --- | --- | --- |

| StackGAN | <https://arxiv.org/abs/1710.10916> | 文字到圖像 |

| StackGAN++ | <https://arxiv.org/abs/1612.03242> | 逼真的圖像合成 |

| DCGAN | <https://arxiv.org/abs/1511.06434> | 圖像合成 |

| HR-DCGAN | <https://arxiv.org/abs/1711.06491> | 高分辨率圖像合成 |

| 條件 GAN | <https://arxiv.org/abs/1411.1784> | 圖像標記 |

| InfoGAN | <https://arxiv.org/abs/1606.03657> | 風格識別 |

| Wasserstein GAN | <https://arxiv.org/abs/1701.07875> <https://arxiv.org/abs/1704.00028> | 圖像生成 |

| 耦合 GAN | <https://arxiv.org/abs/1606.07536> | 圖像轉換,域適應 |

| BEGAN | <https://arxiv.org/abs/1703.10717> | 圖像生成 |

| DiscoGAN | <https://arxiv.org/abs/1703.05192> | 風格遷移 |

| CycleGAN | <https://arxiv.org/abs/1703.10593> | 風格遷移 |

讓我們練習使用 MNIST 數據集創建一個簡單的 GAN。在本練習中,我們將使用以下函數將 MNIST 數據集標準化為介于`[-1, +1]`之間:

```py

def norm(x):

return (x-0.5)/0.5

```

我們還定義了 256 維的隨機噪聲,用于測試生成器模型:

```py

n_z = 256

z_test = np.random.uniform(-1.0,1.0,size=[8,n_z])

```

顯示將在本章所有示例中使用的生成圖像的函數:

```py

def display_images(images):

for i in range(images.shape[0]): plt.subplot(1, 8, i + 1)

plt.imshow(images[i])

plt.axis('off')

plt.tight_layout()

plt.show()

```

# 建立和訓練 GAN 的最佳實踐

對于我們為此演示選擇的數據集,判別器在對真實和假圖像進行分類方面變得非常擅長,因此沒有為生成器提供梯度方面的大量反饋。因此,我們必須通過以下最佳實踐使判別器變弱:

* 判別器的學習率保持遠高于生成器的學習率。

* 判別器的優化器是`GradientDescent`,生成器的優化器是`Adam`。

* 判別器具有丟棄正則化,而生成器則沒有。

* 與生成器相比,判別器具有更少的層和更少的神經元。

* 生成器的輸出是`tanh`,而判別器的輸出是 sigmoid。

* 在 Keras 模型中,對于實際數據的標簽,我們使用 0.9 而不是 1.0 的值,對于偽數據的標簽,我們使用 0.1 而不是 0.0,以便在標簽中引入一點噪聲

歡迎您探索并嘗試其他最佳實踐。

# TensorFlow 中的簡單的 GAN

您可以按照 Jupyter 筆記本中的代碼`ch-14a_SimpleGAN`。

為了使用 TensorFlow 構建 GAN,我們使用以下步驟構建三個網絡,兩個判別器模型和一個生成器模型:

1. 首先添加用于定義網絡的超參數:

```py

# graph hyperparameters

g_learning_rate = 0.00001

d_learning_rate = 0.01

n_x = 784 # number of pixels in the MNIST image

# number of hidden layers for generator and discriminator

g_n_layers = 3

d_n_layers = 1

# neurons in each hidden layer

g_n_neurons = [256, 512, 1024]

d_n_neurons = [256]

# define parameter ditionary

d_params = {}

g_params = {}

activation = tf.nn.leaky_relu

w_initializer = tf.glorot_uniform_initializer

b_initializer = tf.zeros_initializer

```

1. 接下來,定義生成器網絡:

```py

z_p = tf.placeholder(dtype=tf.float32, name='z_p',

shape=[None, n_z])

layer = z_p

# add generator network weights, biases and layers

with tf.variable_scope('g'):

for i in range(0, g_n_layers): w_name = 'w_{0:04d}'.format(i)

g_params[w_name] = tf.get_variable(

name=w_name,

shape=[n_z if i == 0 else g_n_neurons[i - 1],

g_n_neurons[i]],

initializer=w_initializer())

b_name = 'b_{0:04d}'.format(i)

g_params[b_name] = tf.get_variable(

name=b_name, shape=[g_n_neurons[i]],

initializer=b_initializer())

layer = activation(

tf.matmul(layer, g_params[w_name]) + g_params[b_name])

# output (logit) layer

i = g_n_layers

w_name = 'w_{0:04d}'.format(i)

g_params[w_name] = tf.get_variable(

name=w_name,

shape=[g_n_neurons[i - 1], n_x],

initializer=w_initializer())

b_name = 'b_{0:04d}'.format(i)

g_params[b_name] = tf.get_variable(

name=b_name, shape=[n_x], initializer=b_initializer())

g_logit = tf.matmul(layer, g_params[w_name]) + g_params[b_name]

g_model = tf.nn.tanh(g_logit)

```

1. 接下來,定義我們將構建的兩個判別器網絡的權重和偏差:

```py

with tf.variable_scope('d'):

for i in range(0, d_n_layers): w_name = 'w_{0:04d}'.format(i)

d_params[w_name] = tf.get_variable(

name=w_name,

shape=[n_x if i == 0 else d_n_neurons[i - 1],

d_n_neurons[i]],

initializer=w_initializer())

b_name = 'b_{0:04d}'.format(i)

d_params[b_name] = tf.get_variable(

name=b_name, shape=[d_n_neurons[i]],

initializer=b_initializer())

#output (logit) layer

i = d_n_layers

w_name = 'w_{0:04d}'.format(i)

d_params[w_name] = tf.get_variable(

name=w_name, shape=[d_n_neurons[i - 1], 1],

initializer=w_initializer())

b_name = 'b_{0:04d}'.format(i)

d_params[b_name] = tf.get_variable(

name=b_name, shape=[1], initializer=b_initializer())

```

1. 現在使用這些參數,構建將真實圖像作為輸入并輸出分類的判別器:

```py

# define discriminator_real

# input real images

x_p = tf.placeholder(dtype=tf.float32, name='x_p',

shape=[None, n_x])

layer = x_p

with tf.variable_scope('d'):

for i in range(0, d_n_layers): w_name = 'w_{0:04d}'.format(i)

b_name = 'b_{0:04d}'.format(i)

layer = activation(

tf.matmul(layer, d_params[w_name]) + d_params[b_name])

layer = tf.nn.dropout(layer,0.7)

#output (logit) layer

i = d_n_layers

w_name = 'w_{0:04d}'.format(i)

b_name = 'b_{0:04d}'.format(i)

d_logit_real = tf.matmul(layer,

d_params[w_name]) + d_params[b_name]

d_model_real = tf.nn.sigmoid(d_logit_real)

```

1. 接下來,使用相同的參數構建另一個判別器網絡,但提供生成器的輸出作為輸入:

```py

# define discriminator_fake

# input generated fake images

z = g_model

layer = z

with tf.variable_scope('d'):

for i in range(0, d_n_layers): w_name = 'w_{0:04d}'.format(i)

b_name = 'b_{0:04d}'.format(i)

layer = activation(

tf.matmul(layer, d_params[w_name]) + d_params[b_name])

layer = tf.nn.dropout(layer,0.7)

#output (logit) layer

i = d_n_layers

w_name = 'w_{0:04d}'.format(i)

b_name = 'b_{0:04d}'.format(i)

d_logit_fake = tf.matmul(layer,

d_params[w_name]) + d_params[b_name]

d_model_fake = tf.nn.sigmoid(d_logit_fake)

```

1. 現在我們已經建立了三個網絡,它們之間的連接是使用損失,優化器和訓練函數完成的。在訓練生成器時,我們只訓練生成器的參數,在訓練判別器時,我們只訓練判別器的參數。我們使用`var_list`參數將此指定給優化器的`minimize()`函數。以下是為兩種網絡定義損失,優化器和訓練函數的完整代碼:

```py

g_loss = -tf.reduce_mean(tf.log(d_model_fake))

d_loss = -tf.reduce_mean(tf.log(d_model_real) + tf.log(1 - d_model_fake))

g_optimizer = tf.train.AdamOptimizer(g_learning_rate)

d_optimizer = tf.train.GradientDescentOptimizer(d_learning_rate)

g_train_op = g_optimizer.minimize(g_loss,

var_list=list(g_params.values()))

d_train_op = d_optimizer.minimize(d_loss,

var_list=list(d_params.values()))

```

1. 現在我們已經定義了模型,我們必須訓練模型。訓練按照以下算法完成:

```py

For each epoch:

For each batch: get real images x_batch

generate noise z_batch

train discriminator using z_batch and x_batch

generate noise z_batch

train generator using z_batch

```

筆記本電腦的完整訓練代碼如下:

```py

n_epochs = 400

batch_size = 100

n_batches = int(mnist.train.num_examples / batch_size)

n_epochs_print = 50

with tf.Session() as tfs:

tfs.run(tf.global_variables_initializer())

for epoch in range(n_epochs):

epoch_d_loss = 0.0

epoch_g_loss = 0.0

for batch in range(n_batches):

x_batch, _ = mnist.train.next_batch(batch_size)

x_batch = norm(x_batch)

z_batch = np.random.uniform(-1.0,1.0,size=[batch_size,n_z])

feed_dict = {x_p: x_batch,z_p: z_batch}

_,batch_d_loss = tfs.run([d_train_op,d_loss],

feed_dict=feed_dict)

z_batch = np.random.uniform(-1.0,1.0,size=[batch_size,n_z])

feed_dict={z_p: z_batch}

_,batch_g_loss = tfs.run([g_train_op,g_loss],

feed_dict=feed_dict)

epoch_d_loss += batch_d_loss

epoch_g_loss += batch_g_loss

if epoch%n_epochs_print == 0:

average_d_loss = epoch_d_loss / n_batches

average_g_loss = epoch_g_loss / n_batches

print('epoch: {0:04d} d_loss = {1:0.6f} g_loss = {2:0.6f}'

.format(epoch,average_d_loss,average_g_loss))

# predict images using generator model trained

x_pred = tfs.run(g_model,feed_dict={z_p:z_test})

display_images(x_pred.reshape(-1,pixel_size,pixel_size))

```

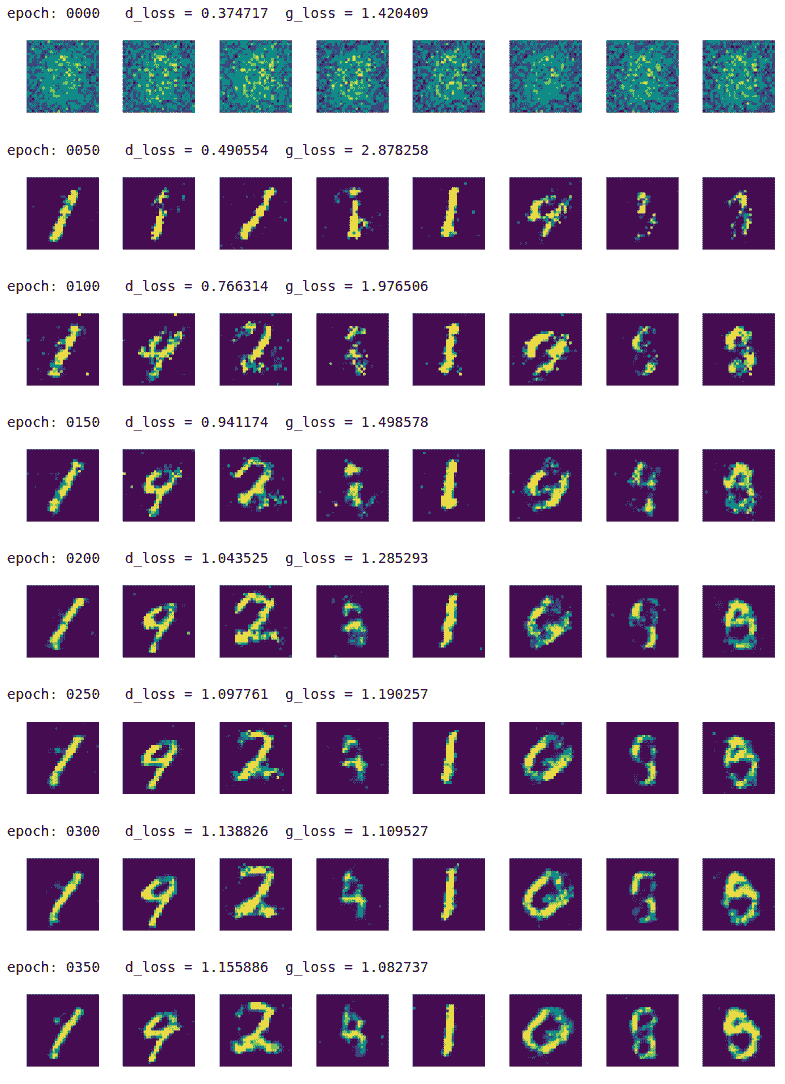

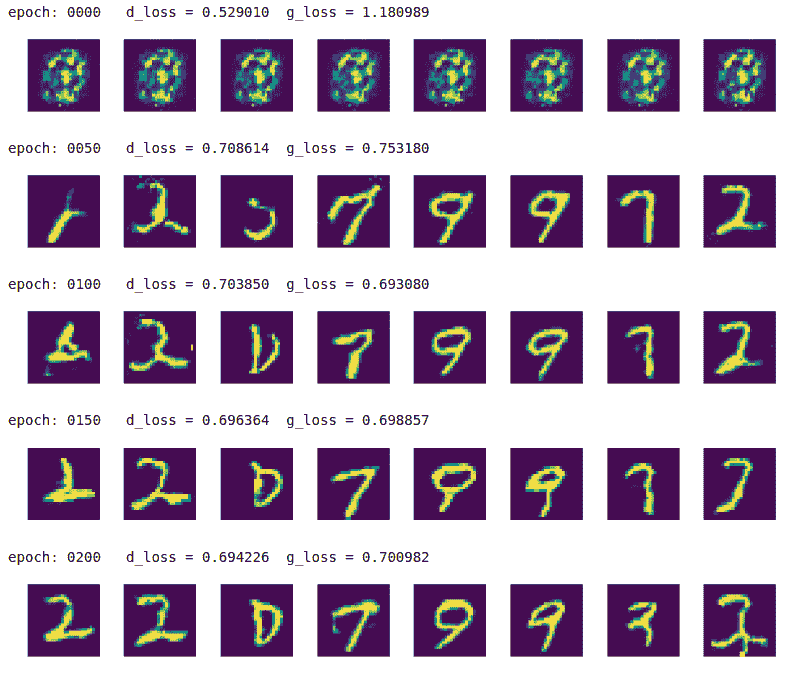

我們每 50 個周期印刷生成的圖像:

正如我們所看到的那樣,生成器在周期 0 中只產生噪聲,但是在周期 350 中,它經過訓練可以產生更好的手寫數字形狀。您可以嘗試使用周期,正則化,網絡架構和其他超參數進行試驗,看看是否可以產生更快更好的結果。

# Keras 中的簡單的 GAN

您可以按照 Jupyter 筆記本中的代碼`ch-14a_SimpleGAN`。

現在讓我們在 Keras 實現相同的模型:

1. 超參數定義與上一節保持一致:

```py

# graph hyperparameters

g_learning_rate = 0.00001

d_learning_rate = 0.01

n_x = 784 # number of pixels in the MNIST image

# number of hidden layers for generator and discriminator

g_n_layers = 3

d_n_layers = 1

# neurons in each hidden layer

g_n_neurons = [256, 512, 1024]

d_n_neurons = [256]

```

1. 接下來,定義生成器網絡:

```py

# define generator

g_model = Sequential()

g_model.add(Dense(units=g_n_neurons[0],

input_shape=(n_z,),

name='g_0'))

g_model.add(LeakyReLU())

for i in range(1,g_n_layers):

g_model.add(Dense(units=g_n_neurons[i],

name='g_{}'.format(i)

))

g_model.add(LeakyReLU())

g_model.add(Dense(units=n_x, activation='tanh',name='g_out'))

print('Generator:')

g_model.summary()

g_model.compile(loss='binary_crossentropy',

optimizer=keras.optimizers.Adam(lr=g_learning_rate)

)

```

這就是生成器模型的樣子:

```py

Generator:

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

g_0 (Dense) (None, 256) 65792

_________________________________________________________________

leaky_re_lu_1 (LeakyReLU) (None, 256) 0

_________________________________________________________________

g_1 (Dense) (None, 512) 131584

_________________________________________________________________

leaky_re_lu_2 (LeakyReLU) (None, 512) 0

_________________________________________________________________

g_2 (Dense) (None, 1024) 525312

_________________________________________________________________

leaky_re_lu_3 (LeakyReLU) (None, 1024) 0

_________________________________________________________________

g_out (Dense) (None, 784) 803600

=================================================================

Total params: 1,526,288

Trainable params: 1,526,288

Non-trainable params: 0

_________________________________________________________________

```

1. 在 Keras 示例中,我們沒有定義兩個判別器網絡,就像我們在 TensorFlow 示例中定義的那樣。相反,我們定義一個判別器網絡,然后將生成器和判別器網絡縫合到 GAN 網絡中。然后,GAN 網絡僅用于訓練生成器參數,判別器網絡用于訓練判別器參數:

```py

# define discriminator

d_model = Sequential()

d_model.add(Dense(units=d_n_neurons[0],

input_shape=(n_x,),

name='d_0'

))

d_model.add(LeakyReLU())

d_model.add(Dropout(0.3))

for i in range(1,d_n_layers):

d_model.add(Dense(units=d_n_neurons[i],

name='d_{}'.format(i)

))

d_model.add(LeakyReLU())

d_model.add(Dropout(0.3))

d_model.add(Dense(units=1, activation='sigmoid',name='d_out'))

print('Discriminator:')

d_model.summary()

d_model.compile(loss='binary_crossentropy',

optimizer=keras.optimizers.SGD(lr=d_learning_rate)

)

```

這是判別器模型的外觀:

```py

Discriminator:

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

d_0 (Dense) (None, 256) 200960

_________________________________________________________________

leaky_re_lu_4 (LeakyReLU) (None, 256) 0

_________________________________________________________________

dropout_1 (Dropout) (None, 256) 0

_________________________________________________________________

d_out (Dense) (None, 1) 257

=================================================================

Total params: 201,217

Trainable params: 201,217

Non-trainable params: 0

_________________________________________________________________

```

1. 接下來,定義 GAN 網絡,并將判別器模型的可訓練屬性轉換為`false`,因為 GAN 僅用于訓練生成器:

```py

# define GAN network

d_model.trainable=False

z_in = Input(shape=(n_z,),name='z_in')

x_in = g_model(z_in)

gan_out = d_model(x_in)

gan_model = Model(inputs=z_in,outputs=gan_out,name='gan')

print('GAN:')

gan_model.summary()

```

```py

gan_model.compile(loss='binary_crossentropy',

optimizer=keras.optimizers.Adam(lr=g_learning_rate)

)

```

這就是 GAN 模型的樣子:

```py

GAN:

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

z_in (InputLayer) (None, 256) 0

_________________________________________________________________

sequential_1 (Sequential) (None, 784) 1526288

_________________________________________________________________

sequential_2 (Sequential) (None, 1) 201217

=================================================================

Total params: 1,727,505

Trainable params: 1,526,288

Non-trainable params: 201,217

_________________________________________________________________

```

1. 太好了,現在我們已經定義了三個模型,我們必須訓練模型。訓練按照以下算法進行:

```py

For each epoch:

For each batch: get real images x_batch

generate noise z_batch

generate images g_batch using generator model

combine g_batch and x_batch into x_in and create labels y_out

set discriminator model as trainable

train discriminator using x_in and y_out

generate noise z_batch

set x_in = z_batch and labels y_out = 1

set discriminator model as non-trainable

train gan model using x_in and y_out,

(effectively training generator model)

```

為了設置標簽,我們分別對真實和假圖像應用標簽 0.9 和 0.1。通常,建議您使用標簽平滑,通過為假數據選擇 0.0 到 0.3 的隨機值,為實際數據選擇 0.8 到 1.0。

以下是筆記本電腦訓練的完整代碼:

```py

n_epochs = 400

batch_size = 100

n_batches = int(mnist.train.num_examples / batch_size)

n_epochs_print = 50

for epoch in range(n_epochs+1):

epoch_d_loss = 0.0

epoch_g_loss = 0.0

for batch in range(n_batches):

x_batch, _ = mnist.train.next_batch(batch_size)

x_batch = norm(x_batch)

z_batch = np.random.uniform(-1.0,1.0,size=[batch_size,n_z])

g_batch = g_model.predict(z_batch)

x_in = np.concatenate([x_batch,g_batch])

y_out = np.ones(batch_size*2)

y_out[:batch_size]=0.9

y_out[batch_size:]=0.1

d_model.trainable=True

batch_d_loss = d_model.train_on_batch(x_in,y_out)

z_batch = np.random.uniform(-1.0,1.0,size=[batch_size,n_z])

x_in=z_batch

y_out = np.ones(batch_size)

d_model.trainable=False

batch_g_loss = gan_model.train_on_batch(x_in,y_out)

epoch_d_loss += batch_d_loss

epoch_g_loss += batch_g_loss

if epoch%n_epochs_print == 0:

average_d_loss = epoch_d_loss / n_batches

average_g_loss = epoch_g_loss / n_batches

print('epoch: {0:04d} d_loss = {1:0.6f} g_loss = {2:0.6f}'

.format(epoch,average_d_loss,average_g_loss))

# predict images using generator model trained

x_pred = g_model.predict(z_test)

display_images(x_pred.reshape(-1,pixel_size,pixel_size))

```

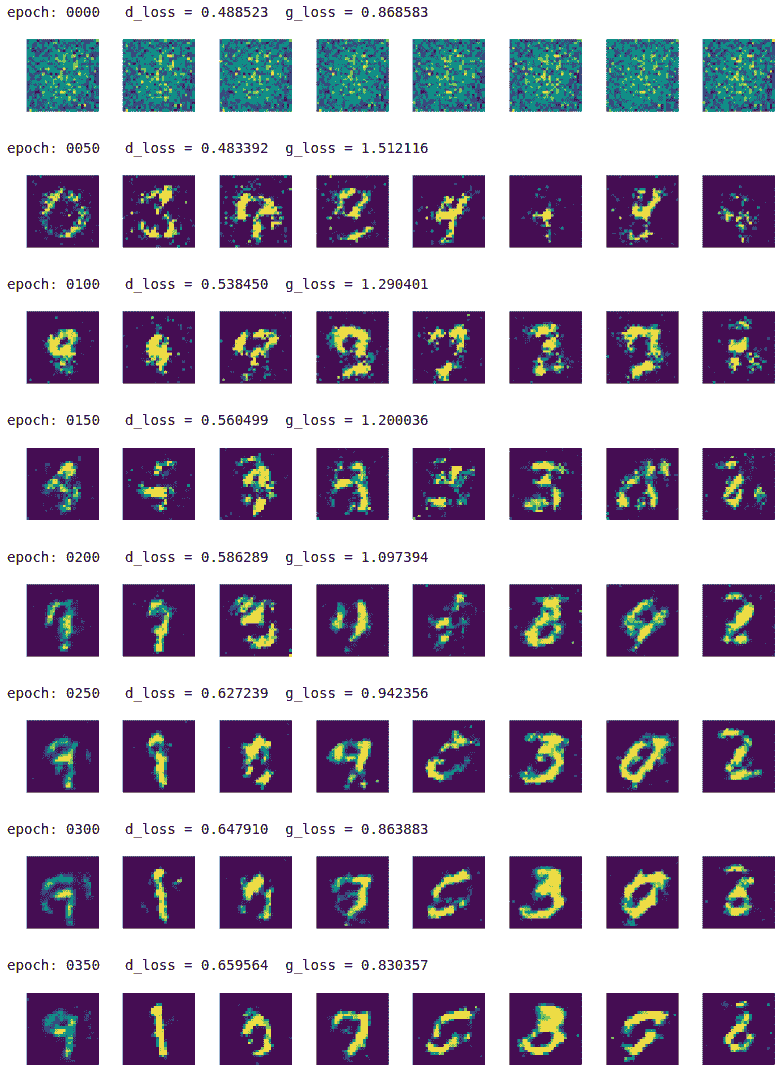

我們每 50 個周期印刷結果,最多 350 個周期:

該模型慢慢地學習從隨機噪聲中生成高質量的手寫數字圖像。

GAN 有如此多的變化,它將需要另一本書來涵蓋所有不同類型的 GAN。但是,實現技術幾乎與我們在此處所示的相似。

# TensorFlow 和 Keras 中的深度卷積 GAN

您可以按照 Jupyter 筆記本中的代碼`ch-14b_DCGAN`。

在 DCGAN 中,判別器和生成器都是使用深度卷積網絡實現的:

1. 在此示例中,我們決定將生成器實現為以下網絡:

```py

Generator:

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

g_in (Dense) (None, 3200) 822400

_________________________________________________________________

g_in_act (Activation) (None, 3200) 0

_________________________________________________________________

g_in_reshape (Reshape) (None, 5, 5, 128) 0

_________________________________________________________________

g_0_up2d (UpSampling2D) (None, 10, 10, 128) 0

_________________________________________________________________

g_0_conv2d (Conv2D) (None, 10, 10, 64) 204864

_________________________________________________________________

g_0_act (Activation) (None, 10, 10, 64) 0

_________________________________________________________________

g_1_up2d (UpSampling2D) (None, 20, 20, 64) 0

_________________________________________________________________

g_1_conv2d (Conv2D) (None, 20, 20, 32) 51232

_________________________________________________________________

g_1_act (Activation) (None, 20, 20, 32) 0

_________________________________________________________________

g_2_up2d (UpSampling2D) (None, 40, 40, 32) 0

_________________________________________________________________

g_2_conv2d (Conv2D) (None, 40, 40, 16) 12816

_________________________________________________________________

g_2_act (Activation) (None, 40, 40, 16) 0

_________________________________________________________________

g_out_flatten (Flatten) (None, 25600) 0

_________________________________________________________________

g_out (Dense) (None, 784) 20071184

=================================================================

Total params: 21,162,496

Trainable params: 21,162,496

Non-trainable params: 0

```

1. 生成器是一個更強大的網絡,有三個卷積層,然后是 tanh 激活。我們將判別器網絡定義如下:

```py

Discriminator:

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

d_0_reshape (Reshape) (None, 28, 28, 1) 0

_________________________________________________________________

d_0_conv2d (Conv2D) (None, 28, 28, 64) 1664

_________________________________________________________________

d_0_act (Activation) (None, 28, 28, 64) 0

_________________________________________________________________

d_0_maxpool (MaxPooling2D) (None, 14, 14, 64) 0

_________________________________________________________________

d_out_flatten (Flatten) (None, 12544) 0

_________________________________________________________________

d_out (Dense) (None, 1) 12545

=================================================================

Total params: 14,209

Trainable params: 14,209

Non-trainable params: 0

_________________________________________________________________

```

1. GAN 網絡由判別器和生成器組成,如前所述:

```py

GAN:

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

z_in (InputLayer) (None, 256) 0

_________________________________________________________________

g (Sequential) (None, 784) 21162496

_________________________________________________________________

d (Sequential) (None, 1) 14209

=================================================================

Total params: 21,176,705

Trainable params: 21,162,496

Non-trainable params: 14,209

_________________________________________________________________

```

當我們運行這個模型 400 個周期時,我們得到以下輸出:

如您所見,DCGAN 能夠從周期 100 本身開始生成高質量的數字。 DGCAN 已被用于樣式轉移,圖像和標題的生成以及圖像代數,即拍攝一個圖像的一部分并將其添加到另一個圖像的部分。 MNIST DCGAN 的完整代碼在筆記本`ch-14b_DCGAN`中提供。

# 總結

在本章中,我們了解了生成對抗網絡。我們在 TensorFlow 和 Keras 中構建了一個簡單的 GAN,并將其應用于從 MNIST 數據集生成圖像。我們還了解到,許多不同的 GAN 衍生產品正在不斷推出,例如 DCGAN,SRGAN,StackGAN 和 CycleGAN 等等。我們還建立了一個 DCGAN,其中生成器和判別器由卷積網絡組成。我們鼓勵您閱讀并嘗試不同的衍生工具,以了解哪些模型適合他們試圖解決的問題。

在下一章中,我們將學習如何使用 TensorFlow 集群和多個計算設備(如多個 GPU)在分布式集群中構建和部署模型。

- TensorFlow 1.x 深度學習秘籍

- 零、前言

- 一、TensorFlow 簡介

- 二、回歸

- 三、神經網絡:感知器

- 四、卷積神經網絡

- 五、高級卷積神經網絡

- 六、循環神經網絡

- 七、無監督學習

- 八、自編碼器

- 九、強化學習

- 十、移動計算

- 十一、生成模型和 CapsNet

- 十二、分布式 TensorFlow 和云深度學習

- 十三、AutoML 和學習如何學習(元學習)

- 十四、TensorFlow 處理單元

- 使用 TensorFlow 構建機器學習項目中文版

- 一、探索和轉換數據

- 二、聚類

- 三、線性回歸

- 四、邏輯回歸

- 五、簡單的前饋神經網絡

- 六、卷積神經網絡

- 七、循環神經網絡和 LSTM

- 八、深度神經網絡

- 九、大規模運行模型 -- GPU 和服務

- 十、庫安裝和其他提示

- TensorFlow 深度學習中文第二版

- 一、人工神經網絡

- 二、TensorFlow v1.6 的新功能是什么?

- 三、實現前饋神經網絡

- 四、CNN 實戰

- 五、使用 TensorFlow 實現自編碼器

- 六、RNN 和梯度消失或爆炸問題

- 七、TensorFlow GPU 配置

- 八、TFLearn

- 九、使用協同過濾的電影推薦

- 十、OpenAI Gym

- TensorFlow 深度學習實戰指南中文版

- 一、入門

- 二、深度神經網絡

- 三、卷積神經網絡

- 四、循環神經網絡介紹

- 五、總結

- 精通 TensorFlow 1.x

- 一、TensorFlow 101

- 二、TensorFlow 的高級庫

- 三、Keras 101

- 四、TensorFlow 中的經典機器學習

- 五、TensorFlow 和 Keras 中的神經網絡和 MLP

- 六、TensorFlow 和 Keras 中的 RNN

- 七、TensorFlow 和 Keras 中的用于時間序列數據的 RNN

- 八、TensorFlow 和 Keras 中的用于文本數據的 RNN

- 九、TensorFlow 和 Keras 中的 CNN

- 十、TensorFlow 和 Keras 中的自編碼器

- 十一、TF 服務:生產中的 TensorFlow 模型

- 十二、遷移學習和預訓練模型

- 十三、深度強化學習

- 十四、生成對抗網絡

- 十五、TensorFlow 集群的分布式模型

- 十六、移動和嵌入式平臺上的 TensorFlow 模型

- 十七、R 中的 TensorFlow 和 Keras

- 十八、調試 TensorFlow 模型

- 十九、張量處理單元

- TensorFlow 機器學習秘籍中文第二版

- 一、TensorFlow 入門

- 二、TensorFlow 的方式

- 三、線性回歸

- 四、支持向量機

- 五、最近鄰方法

- 六、神經網絡

- 七、自然語言處理

- 八、卷積神經網絡

- 九、循環神經網絡

- 十、將 TensorFlow 投入生產

- 十一、更多 TensorFlow

- 與 TensorFlow 的初次接觸

- 前言

- 1.?TensorFlow 基礎知識

- 2. TensorFlow 中的線性回歸

- 3. TensorFlow 中的聚類

- 4. TensorFlow 中的單層神經網絡

- 5. TensorFlow 中的多層神經網絡

- 6. 并行

- 后記

- TensorFlow 學習指南

- 一、基礎

- 二、線性模型

- 三、學習

- 四、分布式

- TensorFlow Rager 教程

- 一、如何使用 TensorFlow Eager 構建簡單的神經網絡

- 二、在 Eager 模式中使用指標

- 三、如何保存和恢復訓練模型

- 四、文本序列到 TFRecords

- 五、如何將原始圖片數據轉換為 TFRecords

- 六、如何使用 TensorFlow Eager 從 TFRecords 批量讀取數據

- 七、使用 TensorFlow Eager 構建用于情感識別的卷積神經網絡(CNN)

- 八、用于 TensorFlow Eager 序列分類的動態循壞神經網絡

- 九、用于 TensorFlow Eager 時間序列回歸的遞歸神經網絡

- TensorFlow 高效編程

- 圖嵌入綜述:問題,技術與應用

- 一、引言

- 三、圖嵌入的問題設定

- 四、圖嵌入技術

- 基于邊重構的優化問題

- 應用

- 基于深度學習的推薦系統:綜述和新視角

- 引言

- 基于深度學習的推薦:最先進的技術

- 基于卷積神經網絡的推薦

- 關于卷積神經網絡我們理解了什么

- 第1章概論

- 第2章多層網絡

- 2.1.4生成對抗網絡

- 2.2.1最近ConvNets演變中的關鍵架構

- 2.2.2走向ConvNet不變性

- 2.3時空卷積網絡

- 第3章了解ConvNets構建塊

- 3.2整改

- 3.3規范化

- 3.4匯集

- 第四章現狀

- 4.2打開問題

- 參考

- 機器學習超級復習筆記

- Python 遷移學習實用指南

- 零、前言

- 一、機器學習基礎

- 二、深度學習基礎

- 三、了解深度學習架構

- 四、遷移學習基礎

- 五、釋放遷移學習的力量

- 六、圖像識別與分類

- 七、文本文件分類

- 八、音頻事件識別與分類

- 九、DeepDream

- 十、自動圖像字幕生成器

- 十一、圖像著色

- 面向計算機視覺的深度學習

- 零、前言

- 一、入門

- 二、圖像分類

- 三、圖像檢索

- 四、對象檢測

- 五、語義分割

- 六、相似性學習

- 七、圖像字幕

- 八、生成模型

- 九、視頻分類

- 十、部署

- 深度學習快速參考

- 零、前言

- 一、深度學習的基礎

- 二、使用深度學習解決回歸問題

- 三、使用 TensorBoard 監控網絡訓練

- 四、使用深度學習解決二分類問題

- 五、使用 Keras 解決多分類問題

- 六、超參數優化

- 七、從頭開始訓練 CNN

- 八、將預訓練的 CNN 用于遷移學習

- 九、從頭開始訓練 RNN

- 十、使用詞嵌入從頭開始訓練 LSTM

- 十一、訓練 Seq2Seq 模型

- 十二、深度強化學習

- 十三、生成對抗網絡

- TensorFlow 2.0 快速入門指南

- 零、前言

- 第 1 部分:TensorFlow 2.00 Alpha 簡介

- 一、TensorFlow 2 簡介

- 二、Keras:TensorFlow 2 的高級 API

- 三、TensorFlow 2 和 ANN 技術

- 第 2 部分:TensorFlow 2.00 Alpha 中的監督和無監督學習

- 四、TensorFlow 2 和監督機器學習

- 五、TensorFlow 2 和無監督學習

- 第 3 部分:TensorFlow 2.00 Alpha 的神經網絡應用

- 六、使用 TensorFlow 2 識別圖像

- 七、TensorFlow 2 和神經風格遷移

- 八、TensorFlow 2 和循環神經網絡

- 九、TensorFlow 估計器和 TensorFlow HUB

- 十、從 tf1.12 轉換為 tf2

- TensorFlow 入門

- 零、前言

- 一、TensorFlow 基本概念

- 二、TensorFlow 數學運算

- 三、機器學習入門

- 四、神經網絡簡介

- 五、深度學習

- 六、TensorFlow GPU 編程和服務

- TensorFlow 卷積神經網絡實用指南

- 零、前言

- 一、TensorFlow 的設置和介紹

- 二、深度學習和卷積神經網絡

- 三、TensorFlow 中的圖像分類

- 四、目標檢測與分割

- 五、VGG,Inception,ResNet 和 MobileNets

- 六、自編碼器,變分自編碼器和生成對抗網絡

- 七、遷移學習

- 八、機器學習最佳實踐和故障排除

- 九、大規模訓練

- 十、參考文獻