# 三、如何保存和恢復訓練模型

滾動瀏覽`reddit.com/r/learnmachinelearning`的帖子后,我意識到機器學習項目的主要瓶頸,出現于數據輸入流水線和模型的最后階段,你必須保存模型和 對新數據做出預測。 所以我認為制作一個簡單直接的教程,向你展示如何保存和恢復使用 Tensorflow Eager 構建的模型會很有用。

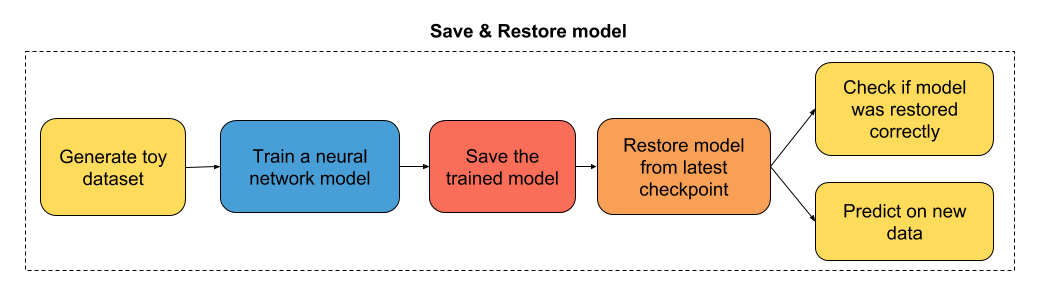

教程的流程圖

## 導入有用的庫

```py

# 導入 TensorFlow 和 TensorFlow Eager

import tensorflow as tf

import tensorflow.contrib.eager as tfe

# 導入函數來生成玩具分類問題

from sklearn.datasets import make_moons

# 開啟 Eager 模式。一旦開啟不能撤銷!只執行一次。

tfe.enable_eager_execution()

```

## 第一部分:為二分類構建簡單的神經網絡

```py

class simple_nn(tf.keras.Model):

def __init__(self):

super(simple_nn, self).__init__()

""" 在這里定義正向傳播期間

使用的神經網絡層

"""

# 隱層

self.dense_layer = tf.layers.Dense(10, activation=tf.nn.relu)

# 輸出層,無激活

self.output_layer = tf.layers.Dense(2, activation=None)

def predict(self, input_data):

""" 在神經網絡上執行正向傳播

Args:

input_data: 2D tensor of shape (n_samples, n_features).

Returns:

logits: unnormalized predictions.

"""

hidden_activations = self.dense_layer(input_data)

logits = self.output_layer(hidden_activations)

return logits

def loss_fn(self, input_data, target):

""" 定義訓練期間使用的損失函數

"""

logits = self.predict(input_data)

loss = tf.losses.sparse_softmax_cross_entropy(labels=target, logits=logits)

return loss

def grads_fn(self, input_data, target):

""" 在每個正向步驟中,

動態計算損失值對模型參數的梯度

"""

with tfe.GradientTape() as tape:

loss = self.loss_fn(input_data, target)

return tape.gradient(loss, self.variables)

def fit(self, input_data, target, optimizer, num_epochs=500, verbose=50):

""" 用于訓練模型的函數,

使用所選的優化器,執行所需數量的迭代

"""

for i in range(num_epochs):

grads = self.grads_fn(input_data, target)

optimizer.apply_gradients(zip(grads, self.variables))

if (i==0) | ((i+1)%verbose==0):

print('Loss at epoch %d: %f' %(i+1, self.loss_fn(input_data, target).numpy()))

```

## 第二部分:訓練模型

```py

# 為分類生成玩具數據集

# X 是 n_samples x n_features 的矩陣,表示輸入特征

# y 是 長度為 n_samples 的向量,表示我們的標簽

X, y = make_moons(n_samples=100, noise=0.1, random_state=2018)

X_train, y_train = tf.constant(X[:80,:]), tf.constant(y[:80])

X_test, y_test = tf.constant(X[80:,:]), tf.constant(y[80:])

optimizer = tf.train.GradientDescentOptimizer(5e-1)

model = simple_nn()

model.fit(X_train, y_train, optimizer, num_epochs=500, verbose=50)

'''

Loss at epoch 1: 0.658276

Loss at epoch 50: 0.302146

Loss at epoch 100: 0.268594

Loss at epoch 150: 0.247425

Loss at epoch 200: 0.229143

Loss at epoch 250: 0.197839

Loss at epoch 300: 0.143365

Loss at epoch 350: 0.098039

Loss at epoch 400: 0.070781

Loss at epoch 450: 0.053753

Loss at epoch 500: 0.042401

'''

```

## 第三部分:保存訓練模型

```py

# 指定檢查點目錄

checkpoint_directory = 'models_checkpoints/SimpleNN/'

# 創建模型檢查點

checkpoint = tfe.Checkpoint(optimizer=optimizer,

model=model,

optimizer_step=tf.train.get_or_create_global_step())

# 保存訓練模型

checkpoint.save(file_prefix=checkpoint_directory)

# 'models_checkpoints/SimpleNN/-1'

```

## 第四部分:恢復訓練模型

```py

# 重新初始化模型實例

model = simple_nn()

optimizer = tf.train.GradientDescentOptimizer(5e-1)

# 指定檢查點目錄

checkpoint_directory = 'models_checkpoints/SimpleNN/'

# 創建模型檢查點

checkpoint = tfe.Checkpoint(optimizer=optimizer,

model=model,

optimizer_step=tf.train.get_or_create_global_step())

# 從最近的檢查點恢復模型

checkpoint.restore(tf.train.latest_checkpoint(checkpoint_directory))

# <tensorflow.contrib.eager.python.checkpointable_utils.CheckpointLoadStatus at 0x7fcfd47d2048>

```

## 第五部分:檢查模型是否正確恢復

```py

model.fit(X_train, y_train, optimizer, num_epochs=1)

# Loss at epoch 1: 0.042220

```

損失似乎與我們在之前訓練的最后一個迭代中獲得的損失一致!

## 第六部分:對新數據做預測

```py

logits_test = model.predict(X_test)

print(logits_test)

'''

tf.Tensor(

[[ 1.54352813 -0.83117302]

[-1.60523365 2.82397487]

[ 2.87589525 -1.36463485]

[-1.39461001 2.62404279]

[ 0.82305161 -0.55651397]

[ 3.53674391 -2.55593046]

[-2.97344627 3.46589599]

[-1.69372442 2.95660466]

[-1.43226137 2.65357974]

[ 3.11479995 -1.31765645]

[-0.65841567 1.60468631]

[-2.27454367 3.60553595]

[-1.50170912 2.74410115]

[ 0.76261479 -0.44574208]

[ 2.34516959 -1.6859307 ]

[ 1.92181942 -1.63766352]

[ 4.06047684 -3.03988941]

[ 1.00252324 -0.78900484]

[ 2.79802993 -2.2139734 ]

[-1.43933035 2.68037059]], shape=(20, 2), dtype=float64)

'''

```

- TensorFlow 1.x 深度學習秘籍

- 零、前言

- 一、TensorFlow 簡介

- 二、回歸

- 三、神經網絡:感知器

- 四、卷積神經網絡

- 五、高級卷積神經網絡

- 六、循環神經網絡

- 七、無監督學習

- 八、自編碼器

- 九、強化學習

- 十、移動計算

- 十一、生成模型和 CapsNet

- 十二、分布式 TensorFlow 和云深度學習

- 十三、AutoML 和學習如何學習(元學習)

- 十四、TensorFlow 處理單元

- 使用 TensorFlow 構建機器學習項目中文版

- 一、探索和轉換數據

- 二、聚類

- 三、線性回歸

- 四、邏輯回歸

- 五、簡單的前饋神經網絡

- 六、卷積神經網絡

- 七、循環神經網絡和 LSTM

- 八、深度神經網絡

- 九、大規模運行模型 -- GPU 和服務

- 十、庫安裝和其他提示

- TensorFlow 深度學習中文第二版

- 一、人工神經網絡

- 二、TensorFlow v1.6 的新功能是什么?

- 三、實現前饋神經網絡

- 四、CNN 實戰

- 五、使用 TensorFlow 實現自編碼器

- 六、RNN 和梯度消失或爆炸問題

- 七、TensorFlow GPU 配置

- 八、TFLearn

- 九、使用協同過濾的電影推薦

- 十、OpenAI Gym

- TensorFlow 深度學習實戰指南中文版

- 一、入門

- 二、深度神經網絡

- 三、卷積神經網絡

- 四、循環神經網絡介紹

- 五、總結

- 精通 TensorFlow 1.x

- 一、TensorFlow 101

- 二、TensorFlow 的高級庫

- 三、Keras 101

- 四、TensorFlow 中的經典機器學習

- 五、TensorFlow 和 Keras 中的神經網絡和 MLP

- 六、TensorFlow 和 Keras 中的 RNN

- 七、TensorFlow 和 Keras 中的用于時間序列數據的 RNN

- 八、TensorFlow 和 Keras 中的用于文本數據的 RNN

- 九、TensorFlow 和 Keras 中的 CNN

- 十、TensorFlow 和 Keras 中的自編碼器

- 十一、TF 服務:生產中的 TensorFlow 模型

- 十二、遷移學習和預訓練模型

- 十三、深度強化學習

- 十四、生成對抗網絡

- 十五、TensorFlow 集群的分布式模型

- 十六、移動和嵌入式平臺上的 TensorFlow 模型

- 十七、R 中的 TensorFlow 和 Keras

- 十八、調試 TensorFlow 模型

- 十九、張量處理單元

- TensorFlow 機器學習秘籍中文第二版

- 一、TensorFlow 入門

- 二、TensorFlow 的方式

- 三、線性回歸

- 四、支持向量機

- 五、最近鄰方法

- 六、神經網絡

- 七、自然語言處理

- 八、卷積神經網絡

- 九、循環神經網絡

- 十、將 TensorFlow 投入生產

- 十一、更多 TensorFlow

- 與 TensorFlow 的初次接觸

- 前言

- 1.?TensorFlow 基礎知識

- 2. TensorFlow 中的線性回歸

- 3. TensorFlow 中的聚類

- 4. TensorFlow 中的單層神經網絡

- 5. TensorFlow 中的多層神經網絡

- 6. 并行

- 后記

- TensorFlow 學習指南

- 一、基礎

- 二、線性模型

- 三、學習

- 四、分布式

- TensorFlow Rager 教程

- 一、如何使用 TensorFlow Eager 構建簡單的神經網絡

- 二、在 Eager 模式中使用指標

- 三、如何保存和恢復訓練模型

- 四、文本序列到 TFRecords

- 五、如何將原始圖片數據轉換為 TFRecords

- 六、如何使用 TensorFlow Eager 從 TFRecords 批量讀取數據

- 七、使用 TensorFlow Eager 構建用于情感識別的卷積神經網絡(CNN)

- 八、用于 TensorFlow Eager 序列分類的動態循壞神經網絡

- 九、用于 TensorFlow Eager 時間序列回歸的遞歸神經網絡

- TensorFlow 高效編程

- 圖嵌入綜述:問題,技術與應用

- 一、引言

- 三、圖嵌入的問題設定

- 四、圖嵌入技術

- 基于邊重構的優化問題

- 應用

- 基于深度學習的推薦系統:綜述和新視角

- 引言

- 基于深度學習的推薦:最先進的技術

- 基于卷積神經網絡的推薦

- 關于卷積神經網絡我們理解了什么

- 第1章概論

- 第2章多層網絡

- 2.1.4生成對抗網絡

- 2.2.1最近ConvNets演變中的關鍵架構

- 2.2.2走向ConvNet不變性

- 2.3時空卷積網絡

- 第3章了解ConvNets構建塊

- 3.2整改

- 3.3規范化

- 3.4匯集

- 第四章現狀

- 4.2打開問題

- 參考

- 機器學習超級復習筆記

- Python 遷移學習實用指南

- 零、前言

- 一、機器學習基礎

- 二、深度學習基礎

- 三、了解深度學習架構

- 四、遷移學習基礎

- 五、釋放遷移學習的力量

- 六、圖像識別與分類

- 七、文本文件分類

- 八、音頻事件識別與分類

- 九、DeepDream

- 十、自動圖像字幕生成器

- 十一、圖像著色

- 面向計算機視覺的深度學習

- 零、前言

- 一、入門

- 二、圖像分類

- 三、圖像檢索

- 四、對象檢測

- 五、語義分割

- 六、相似性學習

- 七、圖像字幕

- 八、生成模型

- 九、視頻分類

- 十、部署

- 深度學習快速參考

- 零、前言

- 一、深度學習的基礎

- 二、使用深度學習解決回歸問題

- 三、使用 TensorBoard 監控網絡訓練

- 四、使用深度學習解決二分類問題

- 五、使用 Keras 解決多分類問題

- 六、超參數優化

- 七、從頭開始訓練 CNN

- 八、將預訓練的 CNN 用于遷移學習

- 九、從頭開始訓練 RNN

- 十、使用詞嵌入從頭開始訓練 LSTM

- 十一、訓練 Seq2Seq 模型

- 十二、深度強化學習

- 十三、生成對抗網絡

- TensorFlow 2.0 快速入門指南

- 零、前言

- 第 1 部分:TensorFlow 2.00 Alpha 簡介

- 一、TensorFlow 2 簡介

- 二、Keras:TensorFlow 2 的高級 API

- 三、TensorFlow 2 和 ANN 技術

- 第 2 部分:TensorFlow 2.00 Alpha 中的監督和無監督學習

- 四、TensorFlow 2 和監督機器學習

- 五、TensorFlow 2 和無監督學習

- 第 3 部分:TensorFlow 2.00 Alpha 的神經網絡應用

- 六、使用 TensorFlow 2 識別圖像

- 七、TensorFlow 2 和神經風格遷移

- 八、TensorFlow 2 和循環神經網絡

- 九、TensorFlow 估計器和 TensorFlow HUB

- 十、從 tf1.12 轉換為 tf2

- TensorFlow 入門

- 零、前言

- 一、TensorFlow 基本概念

- 二、TensorFlow 數學運算

- 三、機器學習入門

- 四、神經網絡簡介

- 五、深度學習

- 六、TensorFlow GPU 編程和服務

- TensorFlow 卷積神經網絡實用指南

- 零、前言

- 一、TensorFlow 的設置和介紹

- 二、深度學習和卷積神經網絡

- 三、TensorFlow 中的圖像分類

- 四、目標檢測與分割

- 五、VGG,Inception,ResNet 和 MobileNets

- 六、自編碼器,變分自編碼器和生成對抗網絡

- 七、遷移學習

- 八、機器學習最佳實踐和故障排除

- 九、大規模訓練

- 十、參考文獻