# 四、文本序列到 TFRecords

大家好! 在本教程中,我將向你展示如何將原始文本數據解析為 TFRecords。 我知道很多人都卡在輸入處理流水線,尤其是當你開始著手自己的個人項目時。 所以我真的希望它對你們任何人都有用!

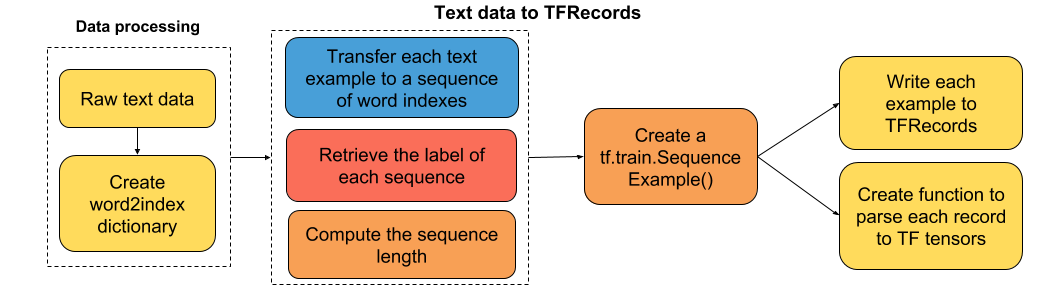

教程的流程圖

### 虛擬的IMDB文本數據

在實踐中,我從斯坦福大學提供的大型電影評論數據集中選擇了一些數據樣本。

### 在這里導入有用的庫

```py

from nltk.tokenize import word_tokenize

import tensorflow as tf

import pandas as pd

import pickle

import random

import glob

import nltk

import re

try:

nltk.data.find('tokenizers/punkt')

except LookupError:

nltk.download('punkt')

```

### 將數據解析為 TFRecords

```py

def imdb2tfrecords(path_data='datasets/dummy_text/', min_word_frequency=5,

max_words_review=700):

'''

這個腳本處理數據

并將其保存為默認的 TensorFlow 文件格式:tfrecords。

Args:

path_data: the path where the imdb data is stored.

min_word_frequency: the minimum frequency of a word, to keep it

in the vocabulary.

max_words_review: the maximum number of words allowed in a review.

'''

# 獲取正面/負面評論的文件名

pos_files = glob.glob(path_data + 'pos/*')

neg_files = glob.glob(path_data + 'neg/*')

# 連接正負評論的文件名

filenames = pos_files + neg_files

# 列出數據集中的所有評論

reviews = [open(filenames[i],'r').read() for i in range(len(filenames))]

# 移除 HTML 標簽

reviews = [re.sub(r'<[^>]+>', ' ', review) for review in reviews]

# 將每個評論分詞

reviews = [word_tokenize(review) for review in reviews]

# 計算每個評論的的長度

len_reviews = [len(review) for review in reviews]

# 展開嵌套列表

reviews = [word for review in reviews for word in review]

# 計算每個單詞的頻率

word_frequency = pd.value_counts(reviews)

# 僅僅保留頻率高于最小值的單詞

vocabulary = word_frequency[word_frequency>=min_word_frequency].index.tolist()

# 添加未知,起始和終止記號

extra_tokens = ['Unknown_token', 'End_token']

vocabulary += extra_tokens

# 創建 word2idx 詞典

word2idx = {vocabulary[i]: i for i in range(len(vocabulary))}

# 將單詞的詞匯表寫到磁盤

pickle.dump(word2idx, open(path_data + 'word2idx.pkl', 'wb'))

def text2tfrecords(filenames, writer, vocabulary, word2idx,

max_words_review):

'''

用于將每個評論解析為部分,并作為 tfrecord 寫入磁盤的函數。

Args:

filenames: the paths of the review files.

writer: the writer object for tfrecords.

vocabulary: list with all the words included in the vocabulary.

word2idx: dictionary of words and their corresponding indexes.

'''

# 打亂 filenames

random.shuffle(filenames)

for filename in filenames:

review = open(filename, 'r').read()

review = re.sub(r'<[^>]+>', ' ', review)

review = word_tokenize(review)

# 將 review 歸約為最大單詞

review = review[-max_words_review:]

# 將單詞替換為來自 word2idx 的等效索引

review = [word2idx[word] if word in vocabulary else

word2idx['Unknown_token'] for word in review]

indexed_review = review + [word2idx['End_token']]

sequence_length = len(indexed_review)

target = 1 if filename.split('/')[-2]=='pos' else 0

# Create a Sequence Example to store our data in

ex = tf.train.SequenceExample()

# 向我們的示例添加非順序特性

ex.context.feature['sequence_length'].int64_list.value.append(sequence_length)

ex.context.feature['target'].int64_list.value.append(target)

# 添加順序特征

token_indexes = ex.feature_lists.feature_list['token_indexes']

for token_index in indexed_review:

token_indexes.feature.add().int64_list.value.append(token_index)

writer.write(ex.SerializeToString())

##########################################################################

# Write data to tfrecords.This might take a while.

##########################################################################

writer = tf.python_io.TFRecordWriter(path_data + 'dummy.tfrecords')

text2tfrecords(filenames, writer, vocabulary, word2idx,

max_words_review)

imdb2tfrecords(path_data='datasets/dummy_text/')

```

### 將 TFRecords 解析為 TF 張量

```py

def parse_imdb_sequence(record):

'''

解析 imdb tfrecords 的腳本

Returns:

token_indexes: sequence of token indexes present in the review.

target: the target of the movie review.

sequence_length: the length of the sequence.

'''

context_features = {

'sequence_length': tf.FixedLenFeature([], dtype=tf.int64),

'target': tf.FixedLenFeature([], dtype=tf.int64),

}

sequence_features = {

'token_indexes': tf.FixedLenSequenceFeature([], dtype=tf.int64),

}

context_parsed, sequence_parsed = tf.parse_single_sequence_example(record,

context_features=context_features, sequence_features=sequence_features)

return (sequence_parsed['token_indexes'], context_parsed['target'],

context_parsed['sequence_length'])

```

如果你希望我在本教程中添加任何內容,請告訴我,我將很樂意進一步改善它。

- TensorFlow 1.x 深度學習秘籍

- 零、前言

- 一、TensorFlow 簡介

- 二、回歸

- 三、神經網絡:感知器

- 四、卷積神經網絡

- 五、高級卷積神經網絡

- 六、循環神經網絡

- 七、無監督學習

- 八、自編碼器

- 九、強化學習

- 十、移動計算

- 十一、生成模型和 CapsNet

- 十二、分布式 TensorFlow 和云深度學習

- 十三、AutoML 和學習如何學習(元學習)

- 十四、TensorFlow 處理單元

- 使用 TensorFlow 構建機器學習項目中文版

- 一、探索和轉換數據

- 二、聚類

- 三、線性回歸

- 四、邏輯回歸

- 五、簡單的前饋神經網絡

- 六、卷積神經網絡

- 七、循環神經網絡和 LSTM

- 八、深度神經網絡

- 九、大規模運行模型 -- GPU 和服務

- 十、庫安裝和其他提示

- TensorFlow 深度學習中文第二版

- 一、人工神經網絡

- 二、TensorFlow v1.6 的新功能是什么?

- 三、實現前饋神經網絡

- 四、CNN 實戰

- 五、使用 TensorFlow 實現自編碼器

- 六、RNN 和梯度消失或爆炸問題

- 七、TensorFlow GPU 配置

- 八、TFLearn

- 九、使用協同過濾的電影推薦

- 十、OpenAI Gym

- TensorFlow 深度學習實戰指南中文版

- 一、入門

- 二、深度神經網絡

- 三、卷積神經網絡

- 四、循環神經網絡介紹

- 五、總結

- 精通 TensorFlow 1.x

- 一、TensorFlow 101

- 二、TensorFlow 的高級庫

- 三、Keras 101

- 四、TensorFlow 中的經典機器學習

- 五、TensorFlow 和 Keras 中的神經網絡和 MLP

- 六、TensorFlow 和 Keras 中的 RNN

- 七、TensorFlow 和 Keras 中的用于時間序列數據的 RNN

- 八、TensorFlow 和 Keras 中的用于文本數據的 RNN

- 九、TensorFlow 和 Keras 中的 CNN

- 十、TensorFlow 和 Keras 中的自編碼器

- 十一、TF 服務:生產中的 TensorFlow 模型

- 十二、遷移學習和預訓練模型

- 十三、深度強化學習

- 十四、生成對抗網絡

- 十五、TensorFlow 集群的分布式模型

- 十六、移動和嵌入式平臺上的 TensorFlow 模型

- 十七、R 中的 TensorFlow 和 Keras

- 十八、調試 TensorFlow 模型

- 十九、張量處理單元

- TensorFlow 機器學習秘籍中文第二版

- 一、TensorFlow 入門

- 二、TensorFlow 的方式

- 三、線性回歸

- 四、支持向量機

- 五、最近鄰方法

- 六、神經網絡

- 七、自然語言處理

- 八、卷積神經網絡

- 九、循環神經網絡

- 十、將 TensorFlow 投入生產

- 十一、更多 TensorFlow

- 與 TensorFlow 的初次接觸

- 前言

- 1.?TensorFlow 基礎知識

- 2. TensorFlow 中的線性回歸

- 3. TensorFlow 中的聚類

- 4. TensorFlow 中的單層神經網絡

- 5. TensorFlow 中的多層神經網絡

- 6. 并行

- 后記

- TensorFlow 學習指南

- 一、基礎

- 二、線性模型

- 三、學習

- 四、分布式

- TensorFlow Rager 教程

- 一、如何使用 TensorFlow Eager 構建簡單的神經網絡

- 二、在 Eager 模式中使用指標

- 三、如何保存和恢復訓練模型

- 四、文本序列到 TFRecords

- 五、如何將原始圖片數據轉換為 TFRecords

- 六、如何使用 TensorFlow Eager 從 TFRecords 批量讀取數據

- 七、使用 TensorFlow Eager 構建用于情感識別的卷積神經網絡(CNN)

- 八、用于 TensorFlow Eager 序列分類的動態循壞神經網絡

- 九、用于 TensorFlow Eager 時間序列回歸的遞歸神經網絡

- TensorFlow 高效編程

- 圖嵌入綜述:問題,技術與應用

- 一、引言

- 三、圖嵌入的問題設定

- 四、圖嵌入技術

- 基于邊重構的優化問題

- 應用

- 基于深度學習的推薦系統:綜述和新視角

- 引言

- 基于深度學習的推薦:最先進的技術

- 基于卷積神經網絡的推薦

- 關于卷積神經網絡我們理解了什么

- 第1章概論

- 第2章多層網絡

- 2.1.4生成對抗網絡

- 2.2.1最近ConvNets演變中的關鍵架構

- 2.2.2走向ConvNet不變性

- 2.3時空卷積網絡

- 第3章了解ConvNets構建塊

- 3.2整改

- 3.3規范化

- 3.4匯集

- 第四章現狀

- 4.2打開問題

- 參考

- 機器學習超級復習筆記

- Python 遷移學習實用指南

- 零、前言

- 一、機器學習基礎

- 二、深度學習基礎

- 三、了解深度學習架構

- 四、遷移學習基礎

- 五、釋放遷移學習的力量

- 六、圖像識別與分類

- 七、文本文件分類

- 八、音頻事件識別與分類

- 九、DeepDream

- 十、自動圖像字幕生成器

- 十一、圖像著色

- 面向計算機視覺的深度學習

- 零、前言

- 一、入門

- 二、圖像分類

- 三、圖像檢索

- 四、對象檢測

- 五、語義分割

- 六、相似性學習

- 七、圖像字幕

- 八、生成模型

- 九、視頻分類

- 十、部署

- 深度學習快速參考

- 零、前言

- 一、深度學習的基礎

- 二、使用深度學習解決回歸問題

- 三、使用 TensorBoard 監控網絡訓練

- 四、使用深度學習解決二分類問題

- 五、使用 Keras 解決多分類問題

- 六、超參數優化

- 七、從頭開始訓練 CNN

- 八、將預訓練的 CNN 用于遷移學習

- 九、從頭開始訓練 RNN

- 十、使用詞嵌入從頭開始訓練 LSTM

- 十一、訓練 Seq2Seq 模型

- 十二、深度強化學習

- 十三、生成對抗網絡

- TensorFlow 2.0 快速入門指南

- 零、前言

- 第 1 部分:TensorFlow 2.00 Alpha 簡介

- 一、TensorFlow 2 簡介

- 二、Keras:TensorFlow 2 的高級 API

- 三、TensorFlow 2 和 ANN 技術

- 第 2 部分:TensorFlow 2.00 Alpha 中的監督和無監督學習

- 四、TensorFlow 2 和監督機器學習

- 五、TensorFlow 2 和無監督學習

- 第 3 部分:TensorFlow 2.00 Alpha 的神經網絡應用

- 六、使用 TensorFlow 2 識別圖像

- 七、TensorFlow 2 和神經風格遷移

- 八、TensorFlow 2 和循環神經網絡

- 九、TensorFlow 估計器和 TensorFlow HUB

- 十、從 tf1.12 轉換為 tf2

- TensorFlow 入門

- 零、前言

- 一、TensorFlow 基本概念

- 二、TensorFlow 數學運算

- 三、機器學習入門

- 四、神經網絡簡介

- 五、深度學習

- 六、TensorFlow GPU 編程和服務

- TensorFlow 卷積神經網絡實用指南

- 零、前言

- 一、TensorFlow 的設置和介紹

- 二、深度學習和卷積神經網絡

- 三、TensorFlow 中的圖像分類

- 四、目標檢測與分割

- 五、VGG,Inception,ResNet 和 MobileNets

- 六、自編碼器,變分自編碼器和生成對抗網絡

- 七、遷移學習

- 八、機器學習最佳實踐和故障排除

- 九、大規模訓練

- 十、參考文獻